-

Blog Post How integrated thinking can help boost the energy transition

-

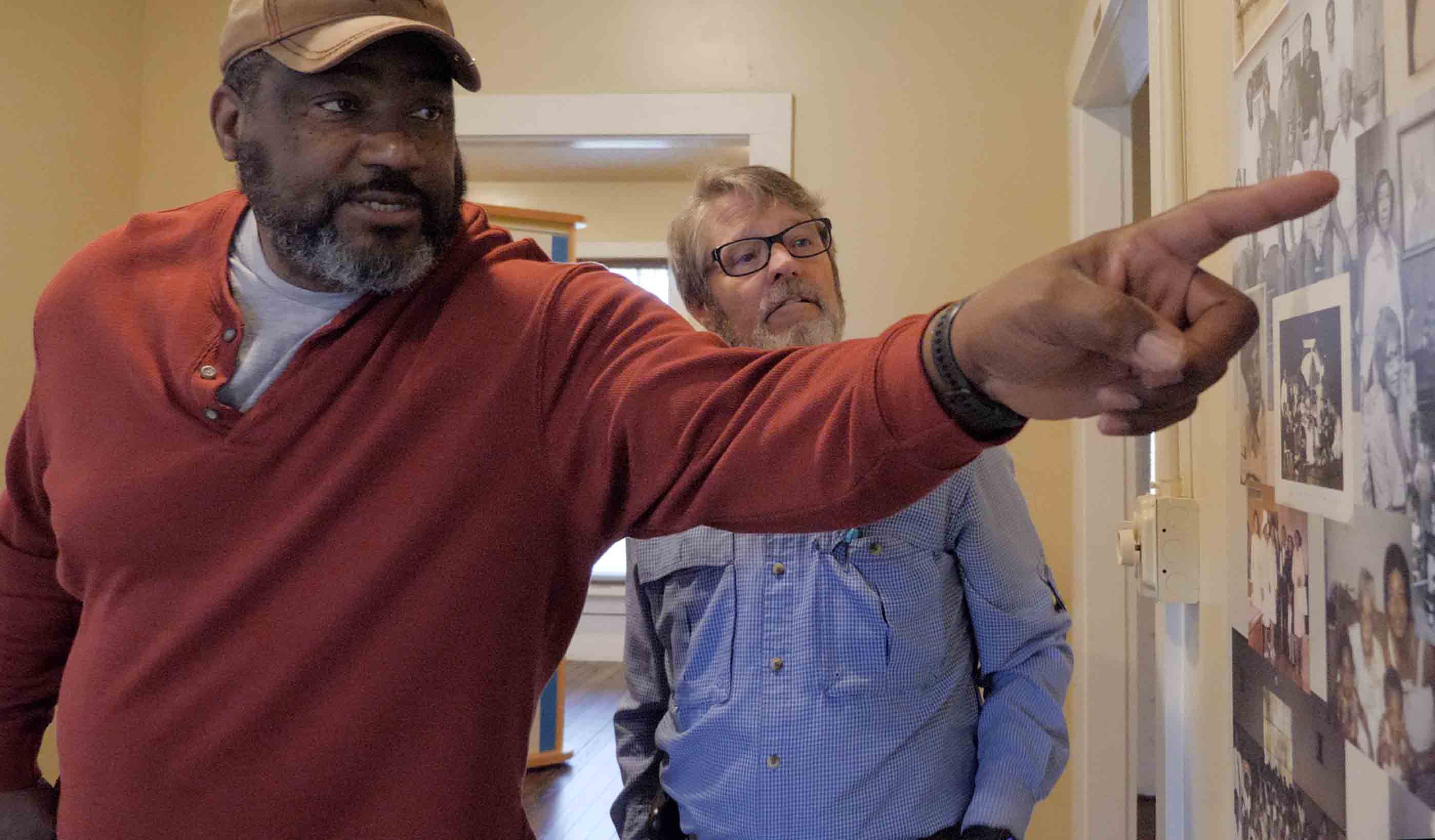

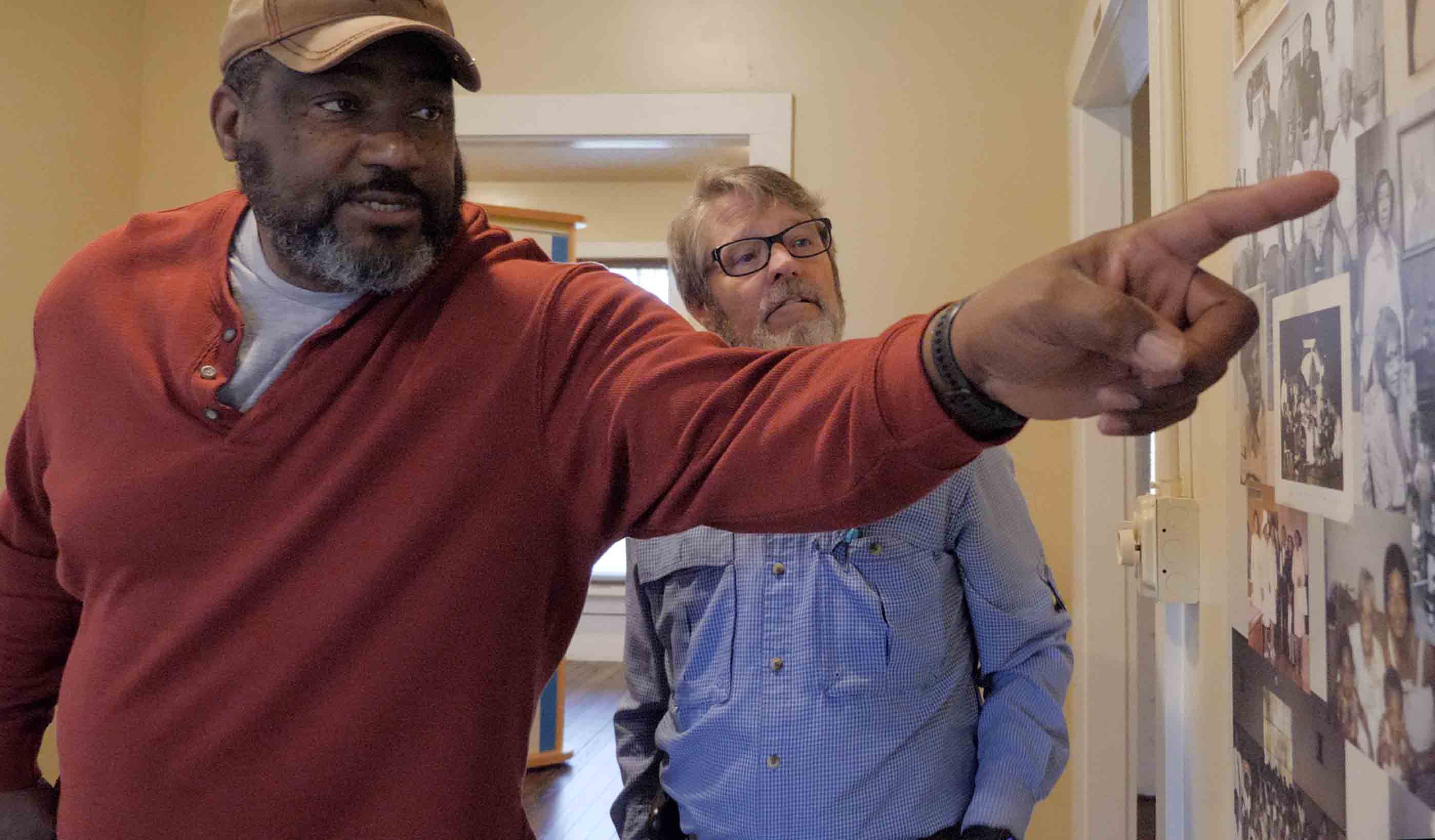

Video A road project uncovered a historical archaeology site and one family’s connection to it

-

Blog Post Flash floods: Predicting the most difficult type of flood risk

-

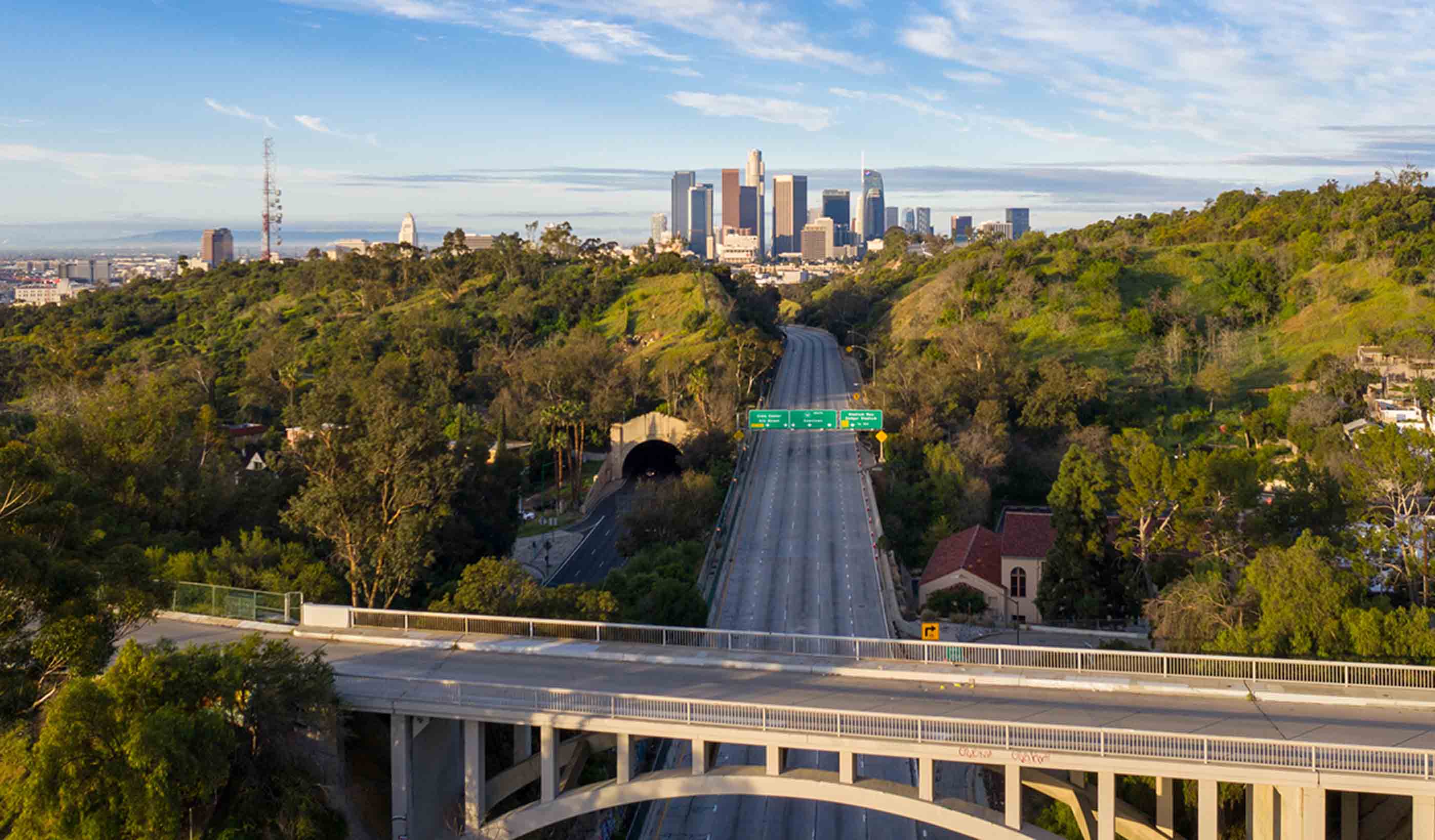

Blog Post Transportation planning strategies for the FIFA World Cup and major events

Ideas

Explore the trends, innovations, and challenges impacting the built and natural environments.

Publications

Trending Topics

-

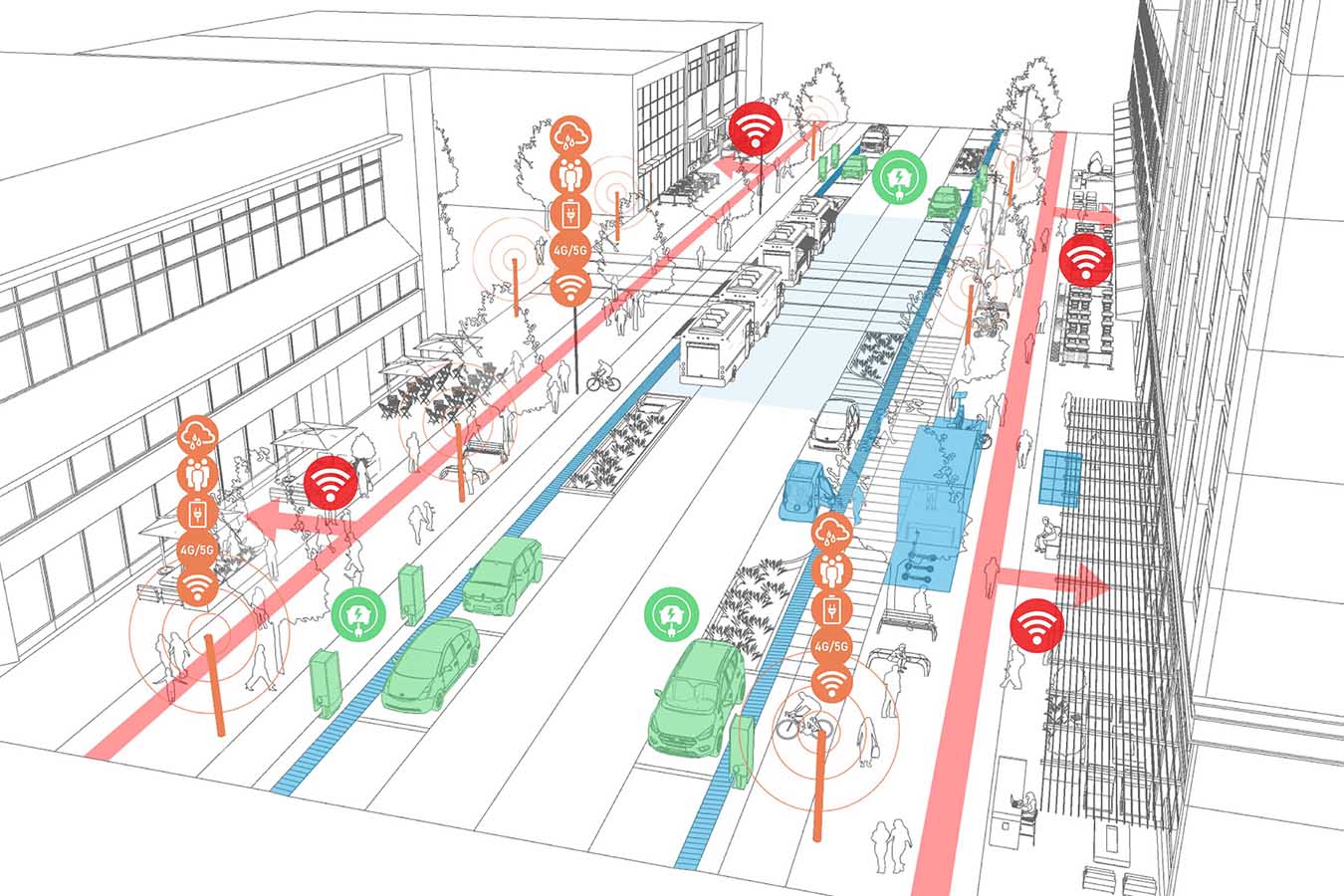

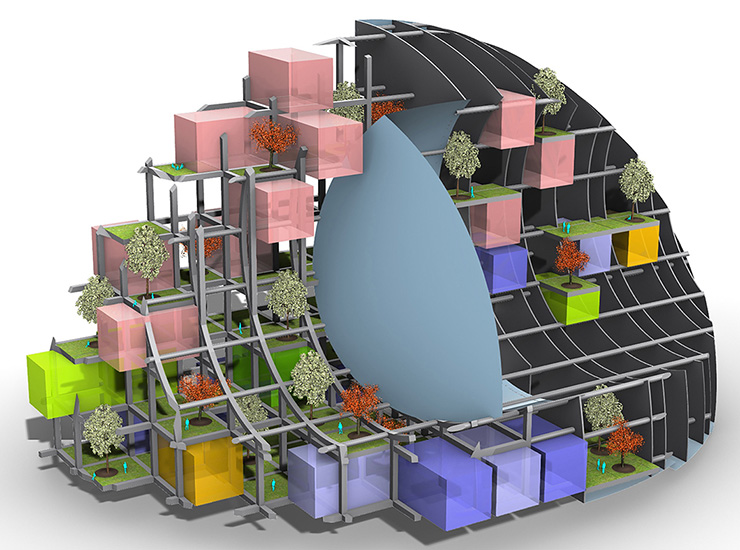

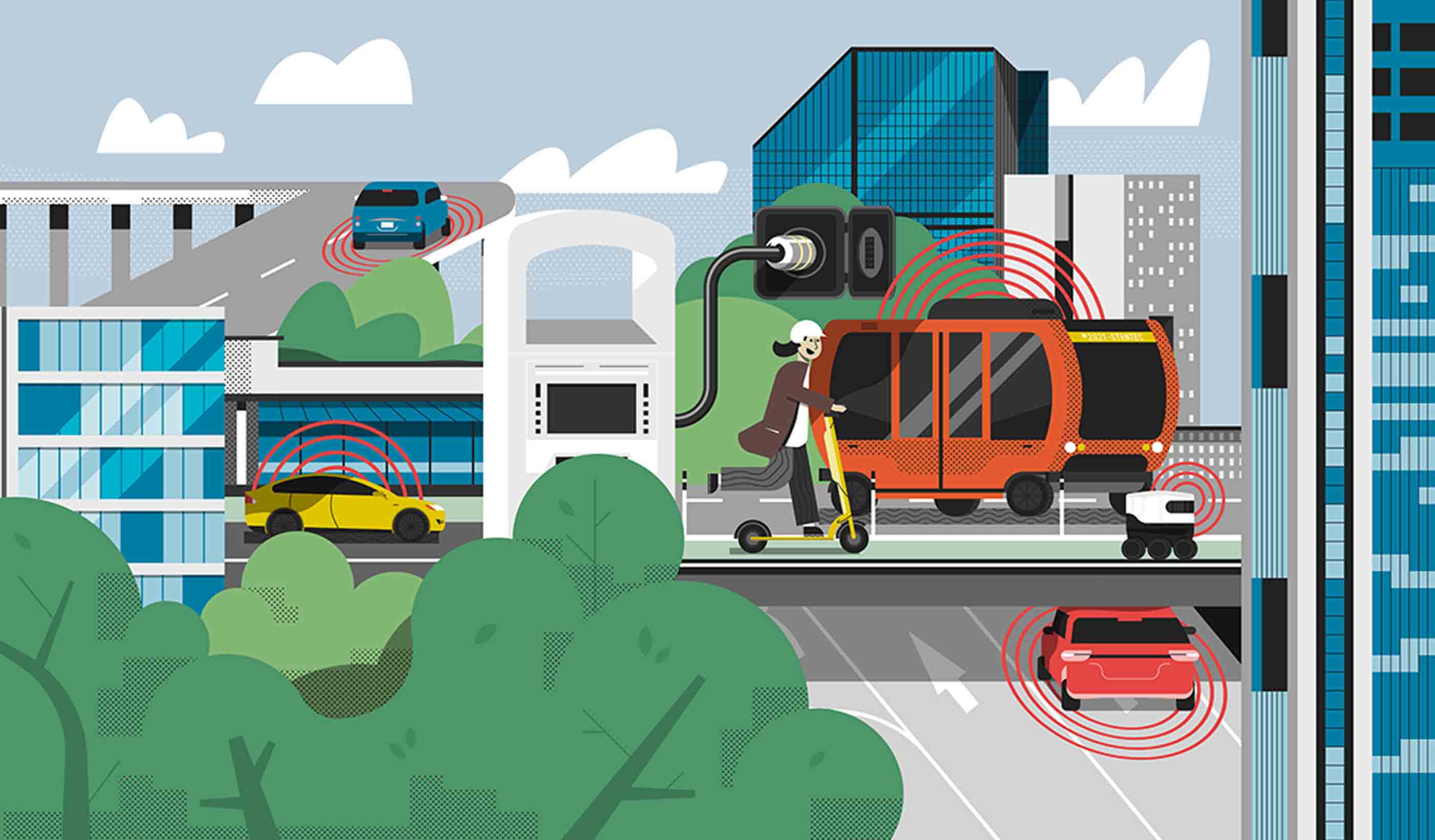

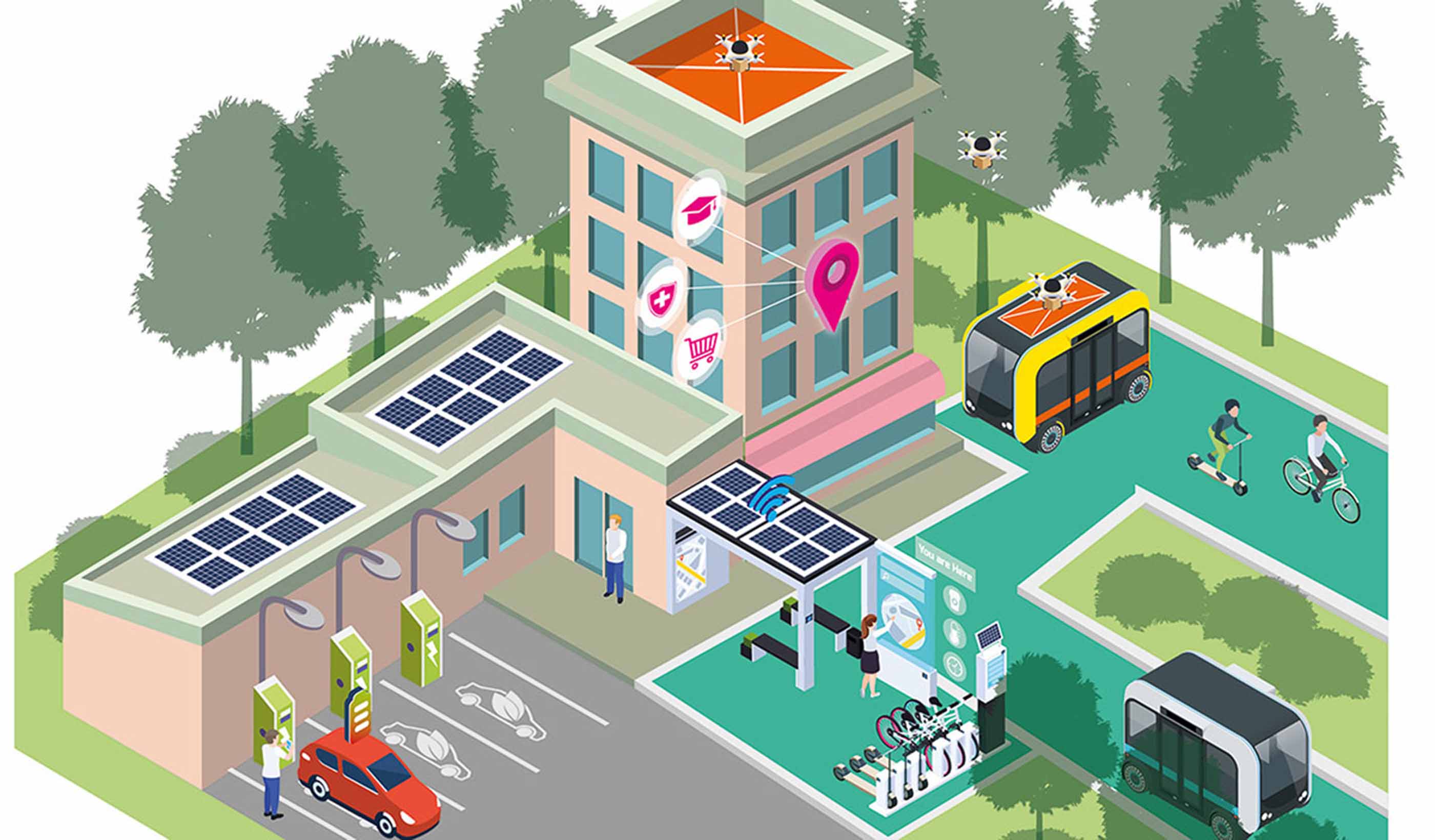

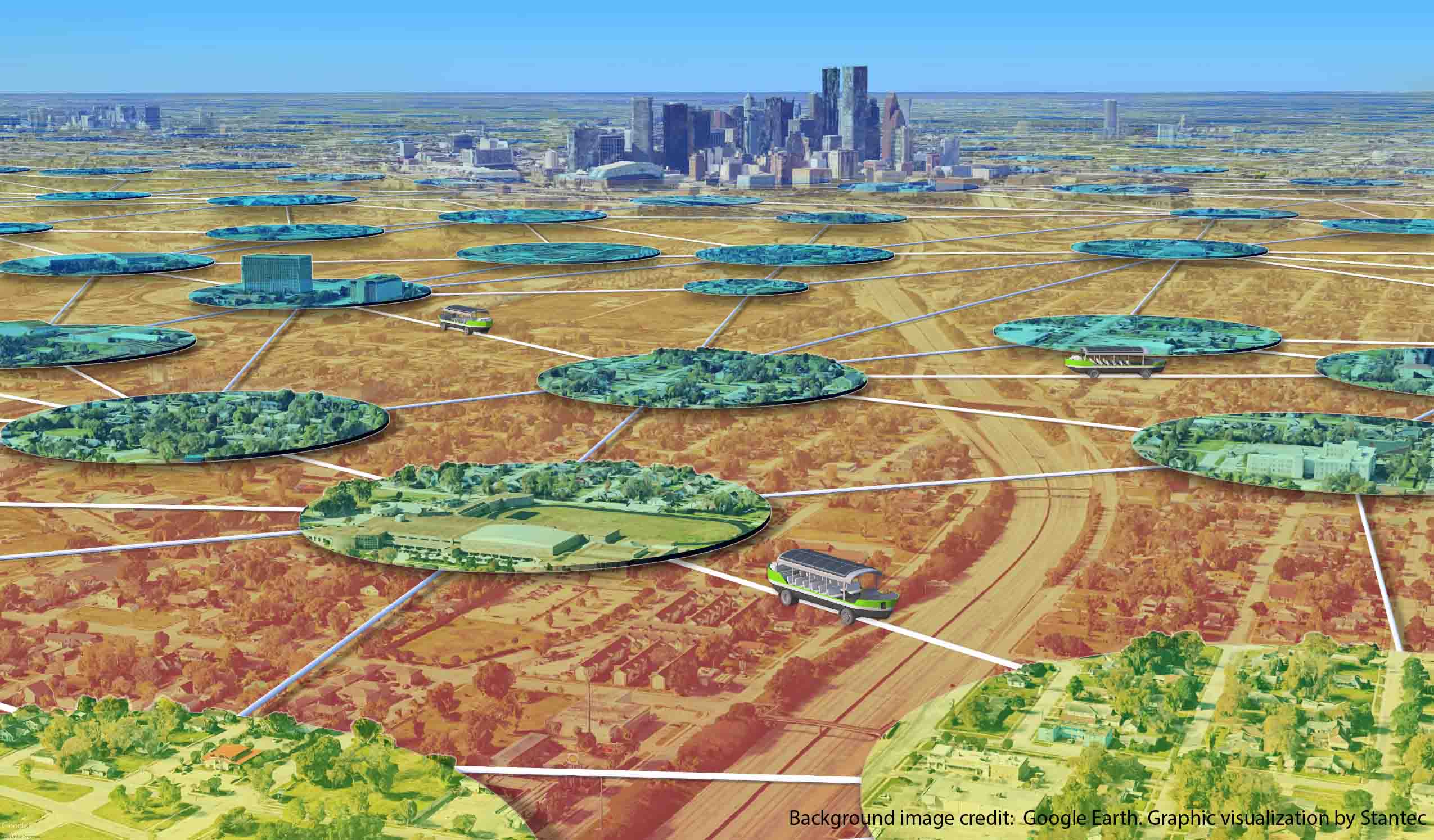

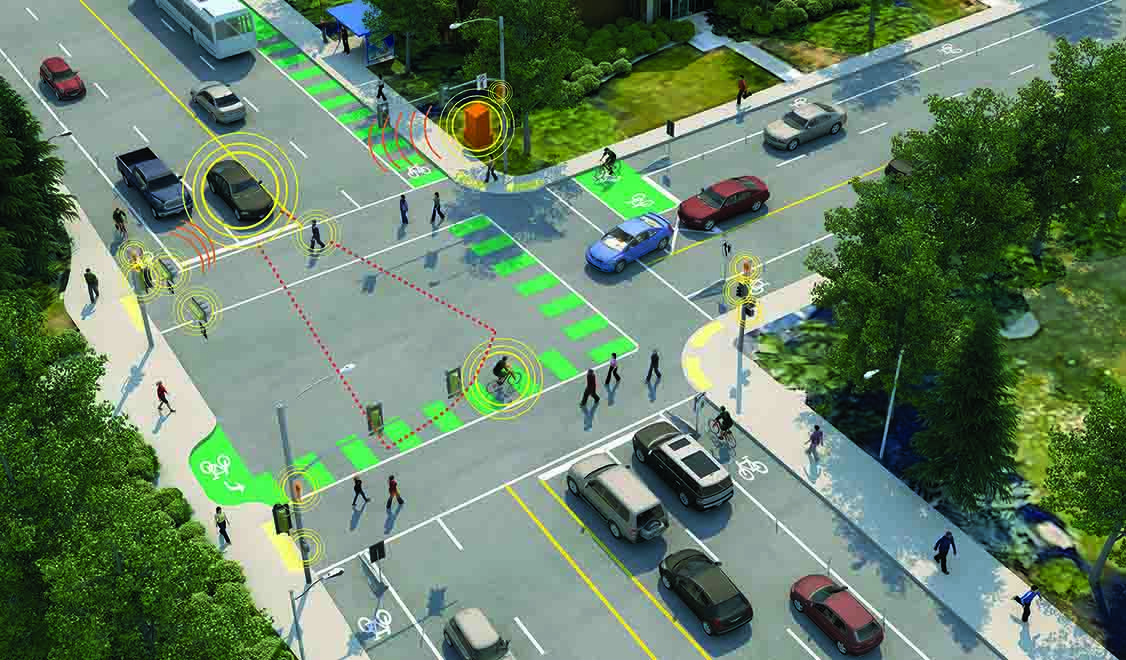

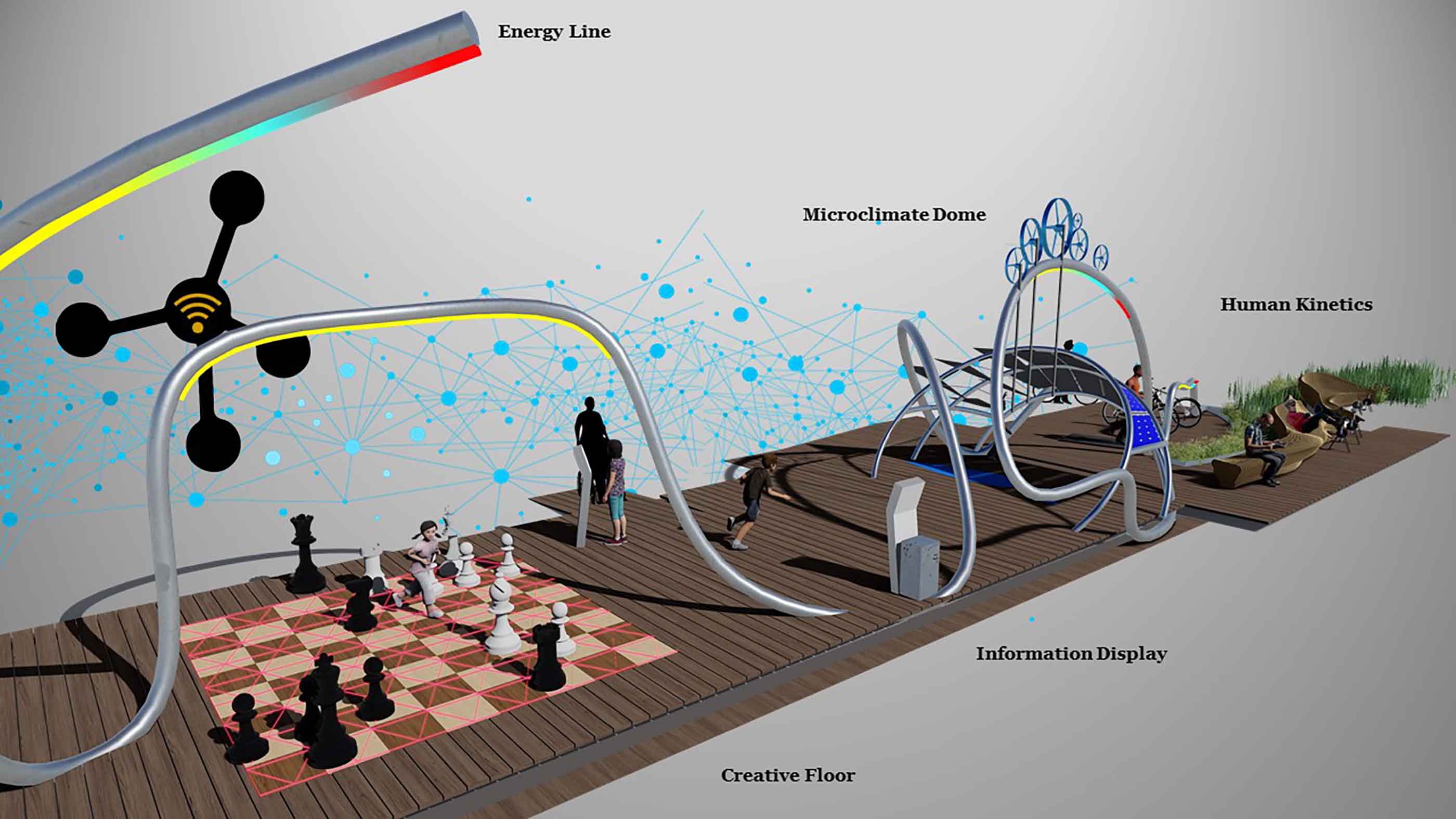

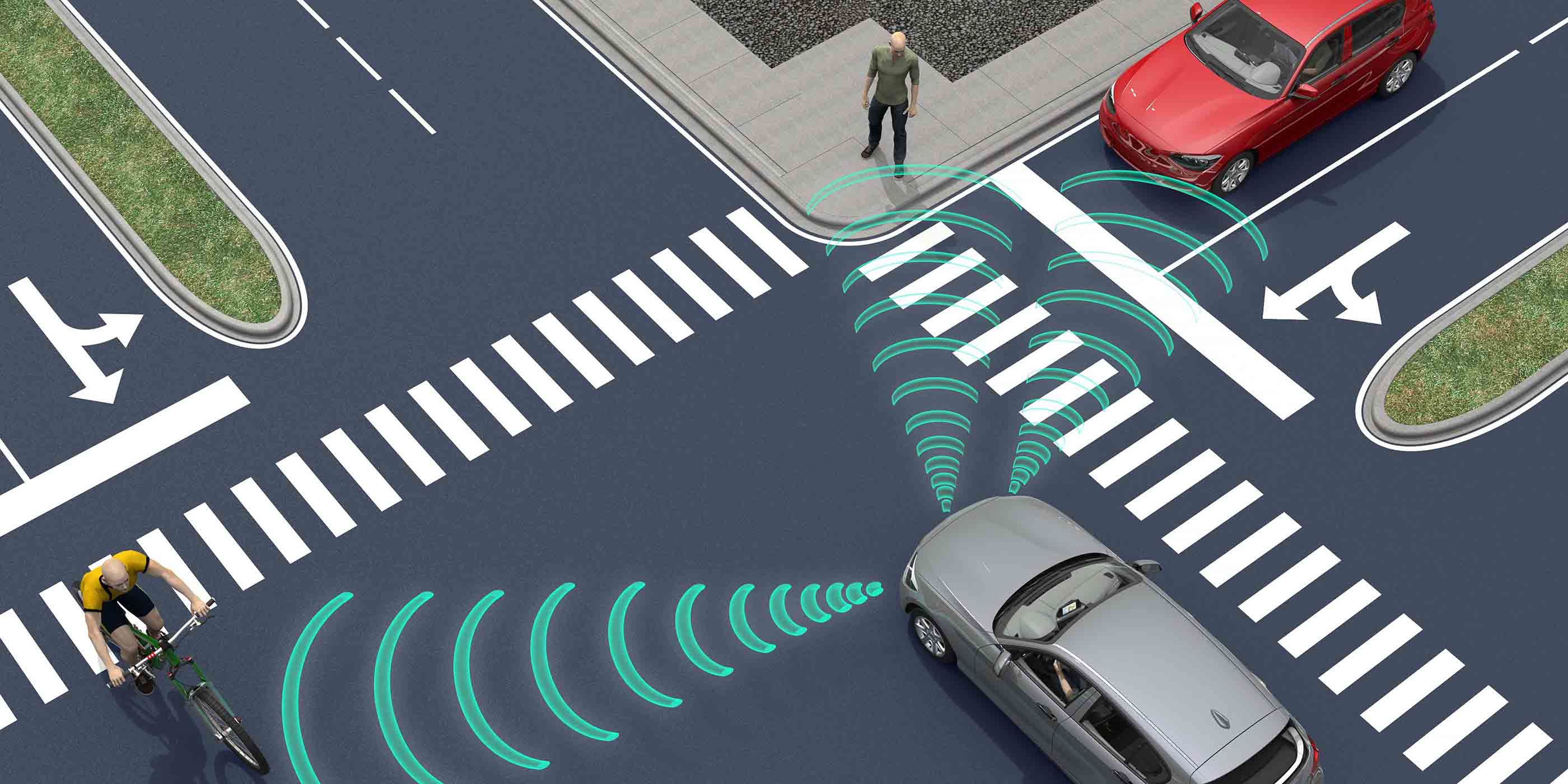

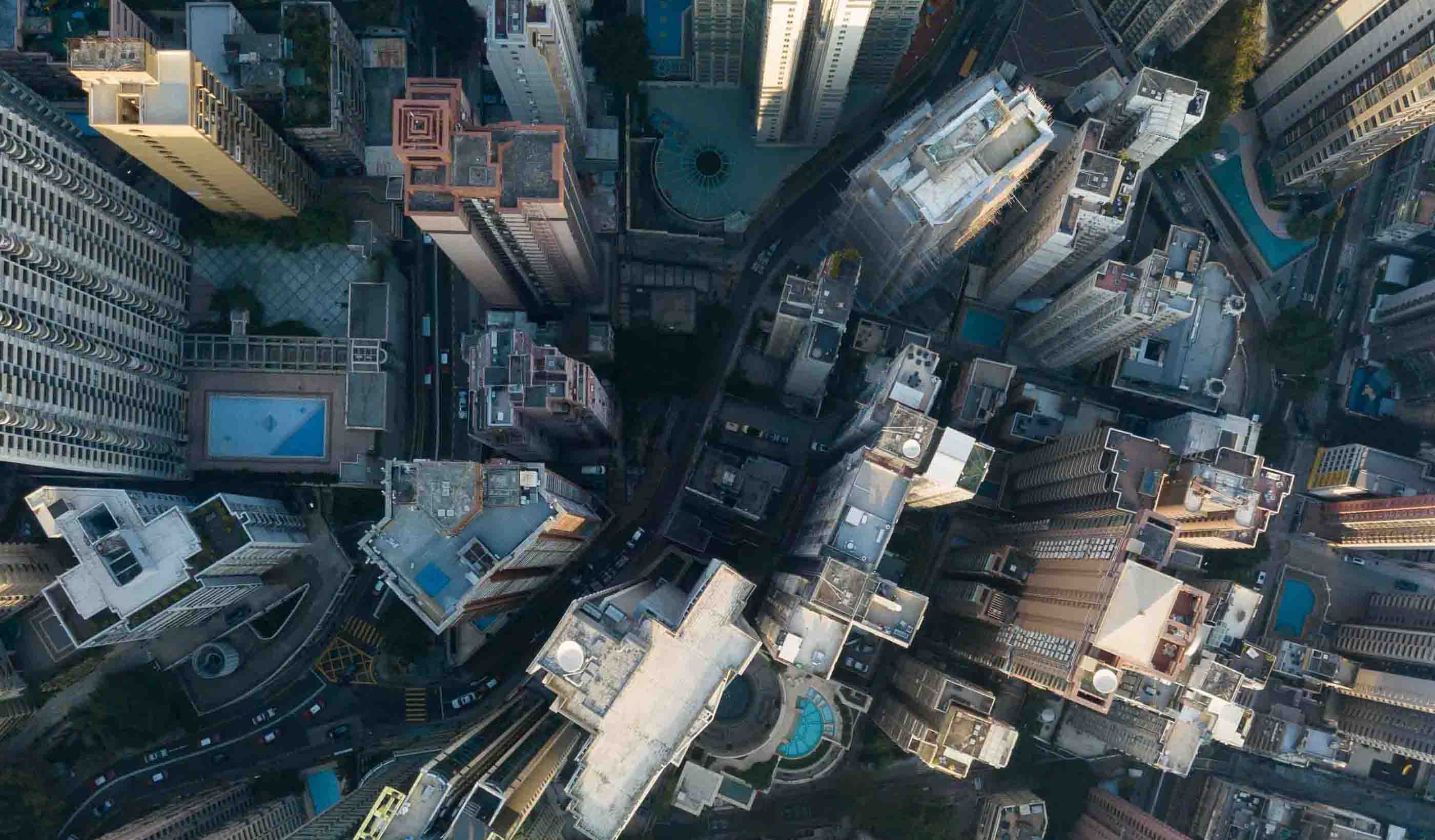

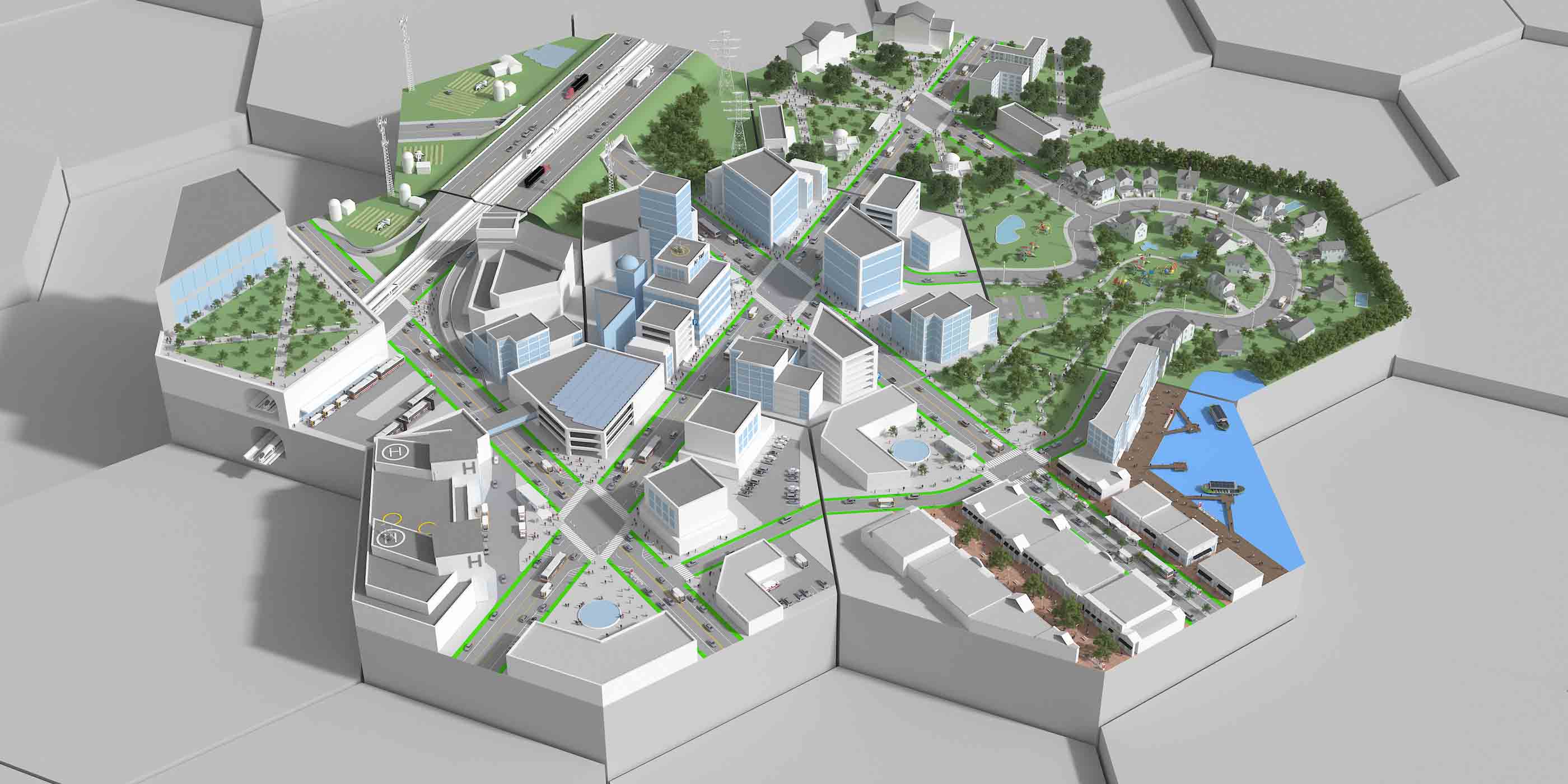

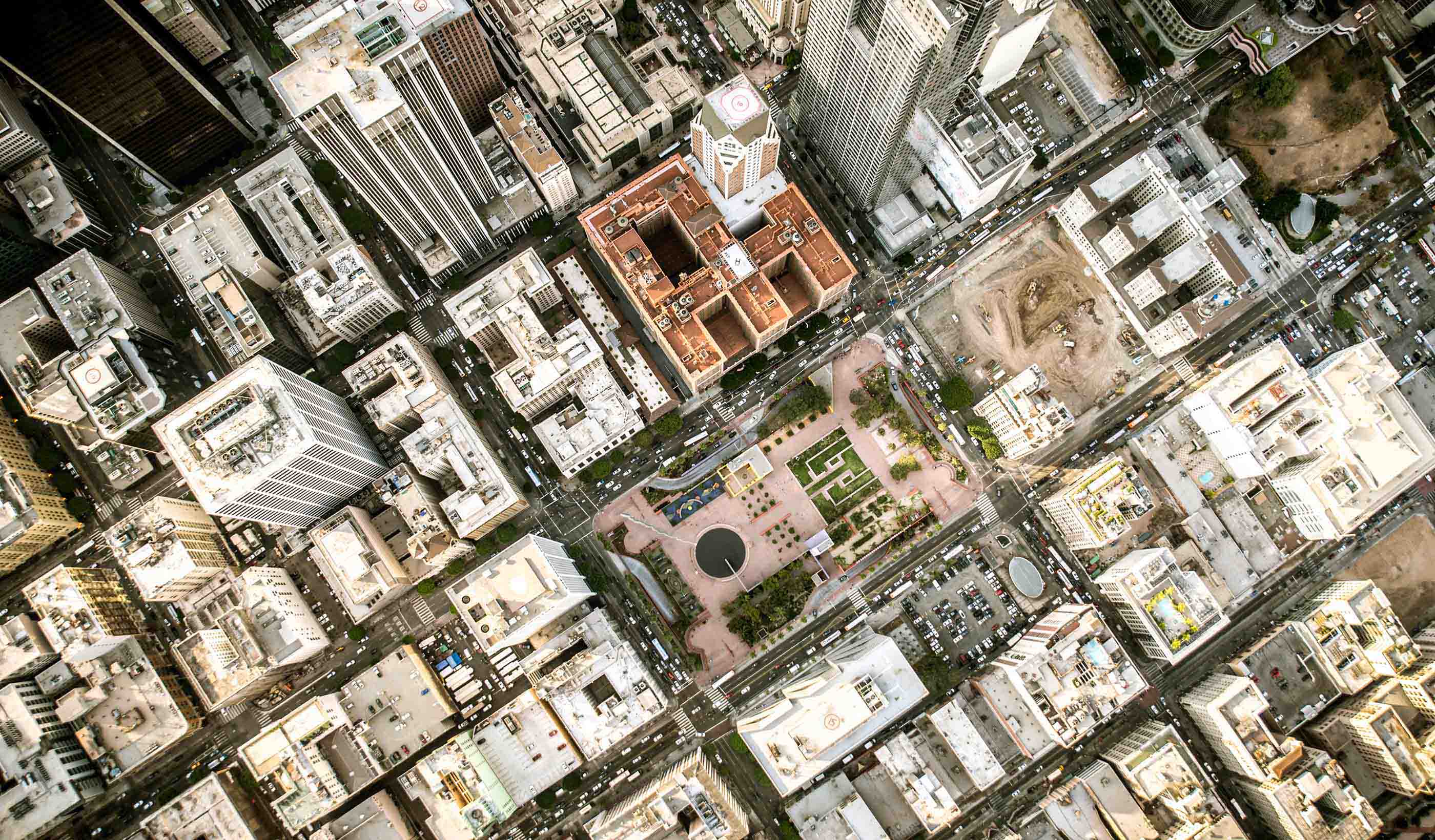

Smart Cities

Explore how smart technologies are transforming urban life—mobility, utilities, buildings, public spaces, and more.

Explore -

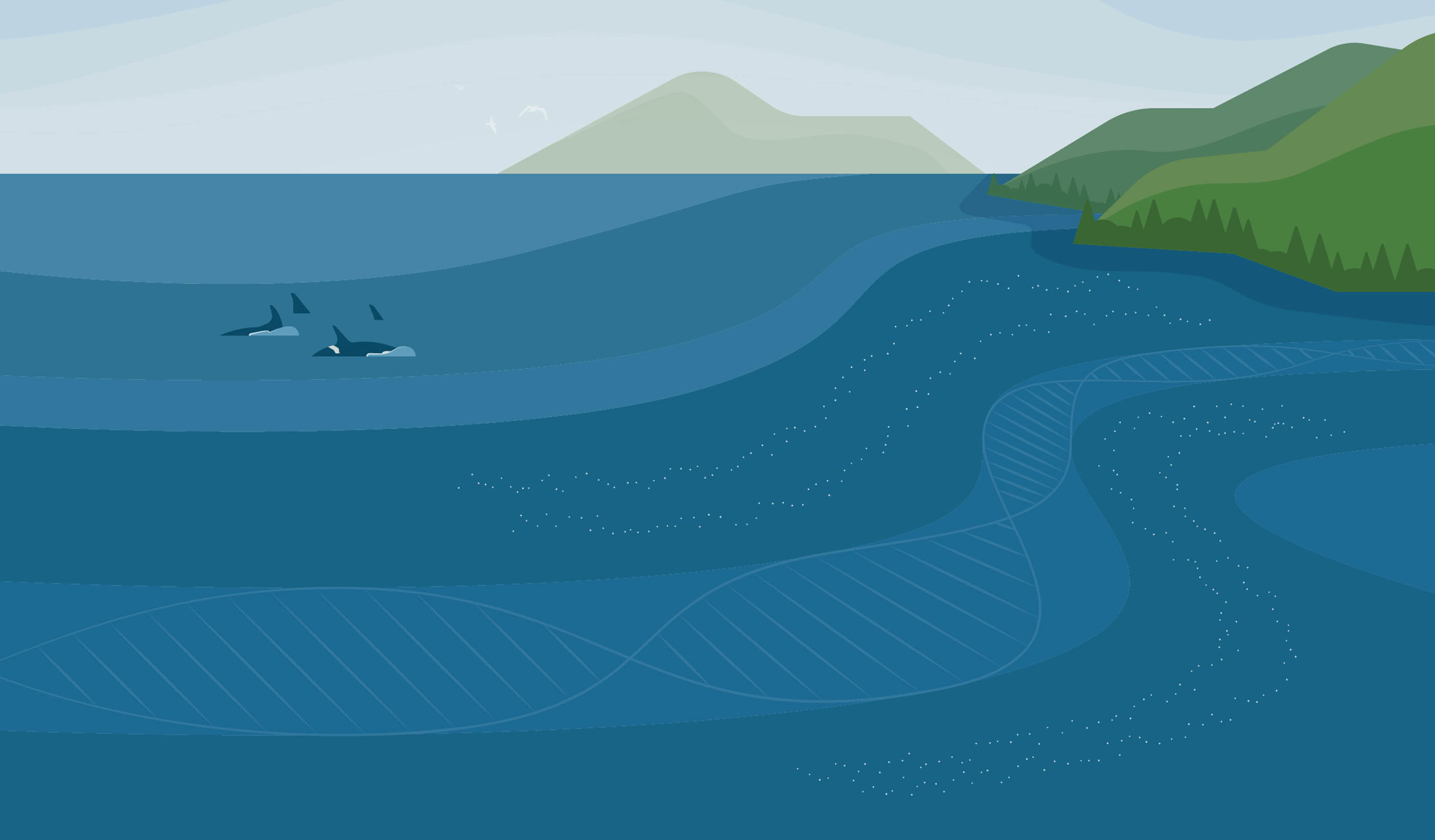

Coastal Resilience

How can we respond to the impacts of climate change on our coastal communities?

Explore -

Sustainable & Resilient Design

Our environmental challenges are many, but design can provide opportunities for positive change.

Explore -

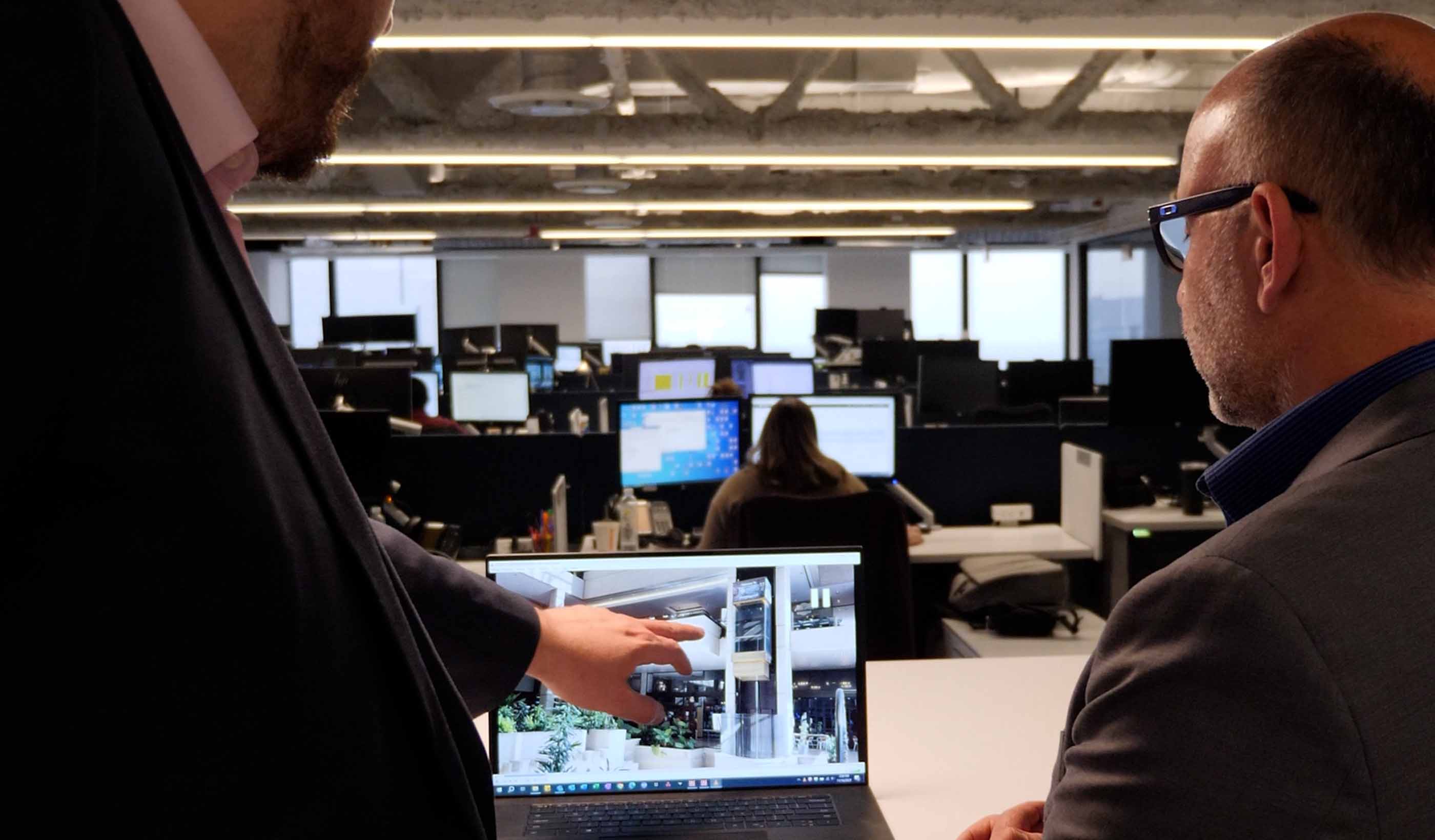

Design Technology

From AI to VR, digital technologies are transforming the design process.

Explore

All Ideas

-

Blog Post Large-scale redevelopment projects need to learn from small-scale historic neighborhoods

-

Publication Research + Benchmarkeing Issue 04 | Inclusion, Diversity, and Wellness

-

Blog Post Net zero building design starts with industry climate pledges

-

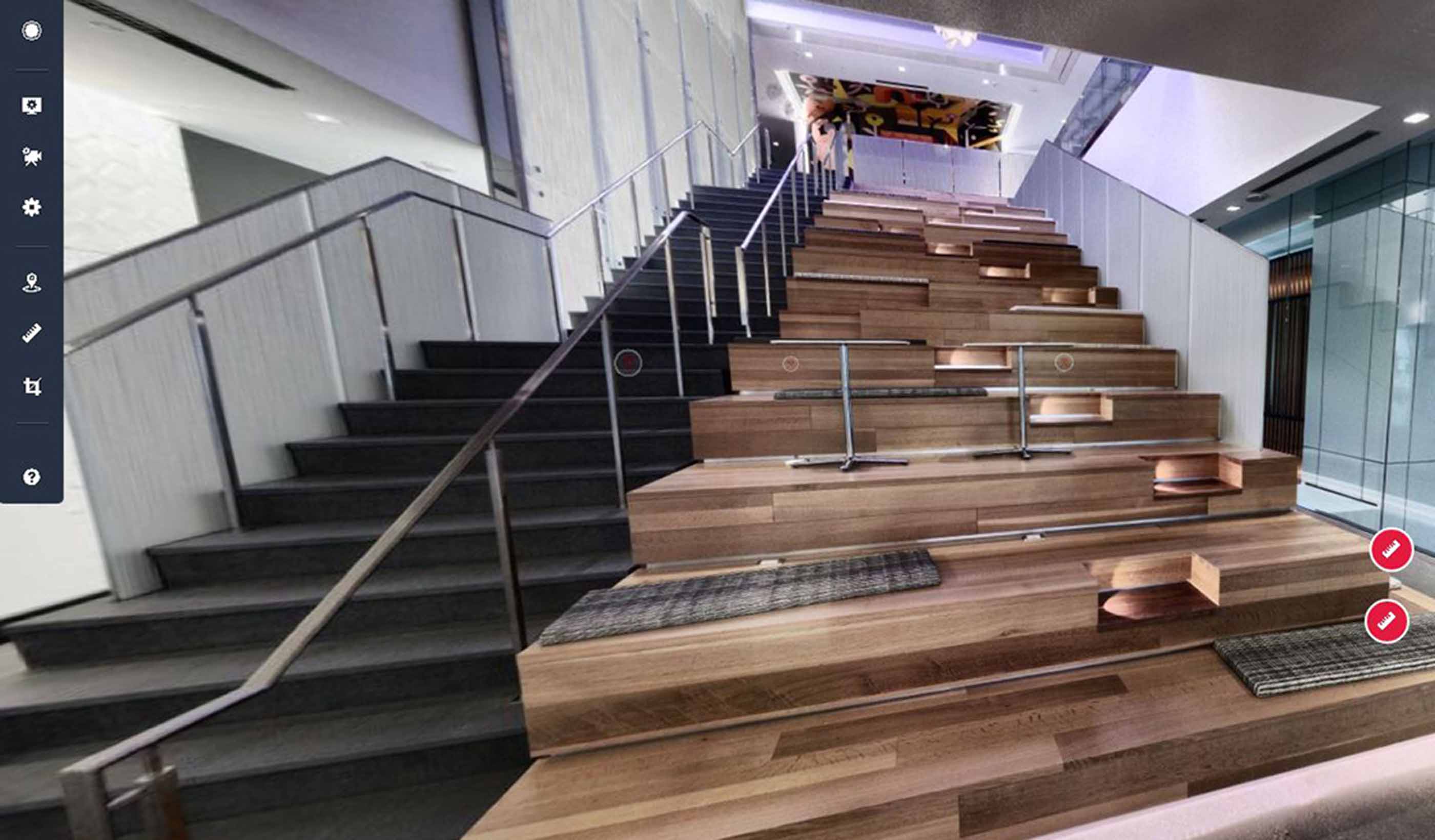

Published Article How virtual reality could transform architecture

-

Video Innovation Insights: How can you effectively deal with market disruption in your industry?

-

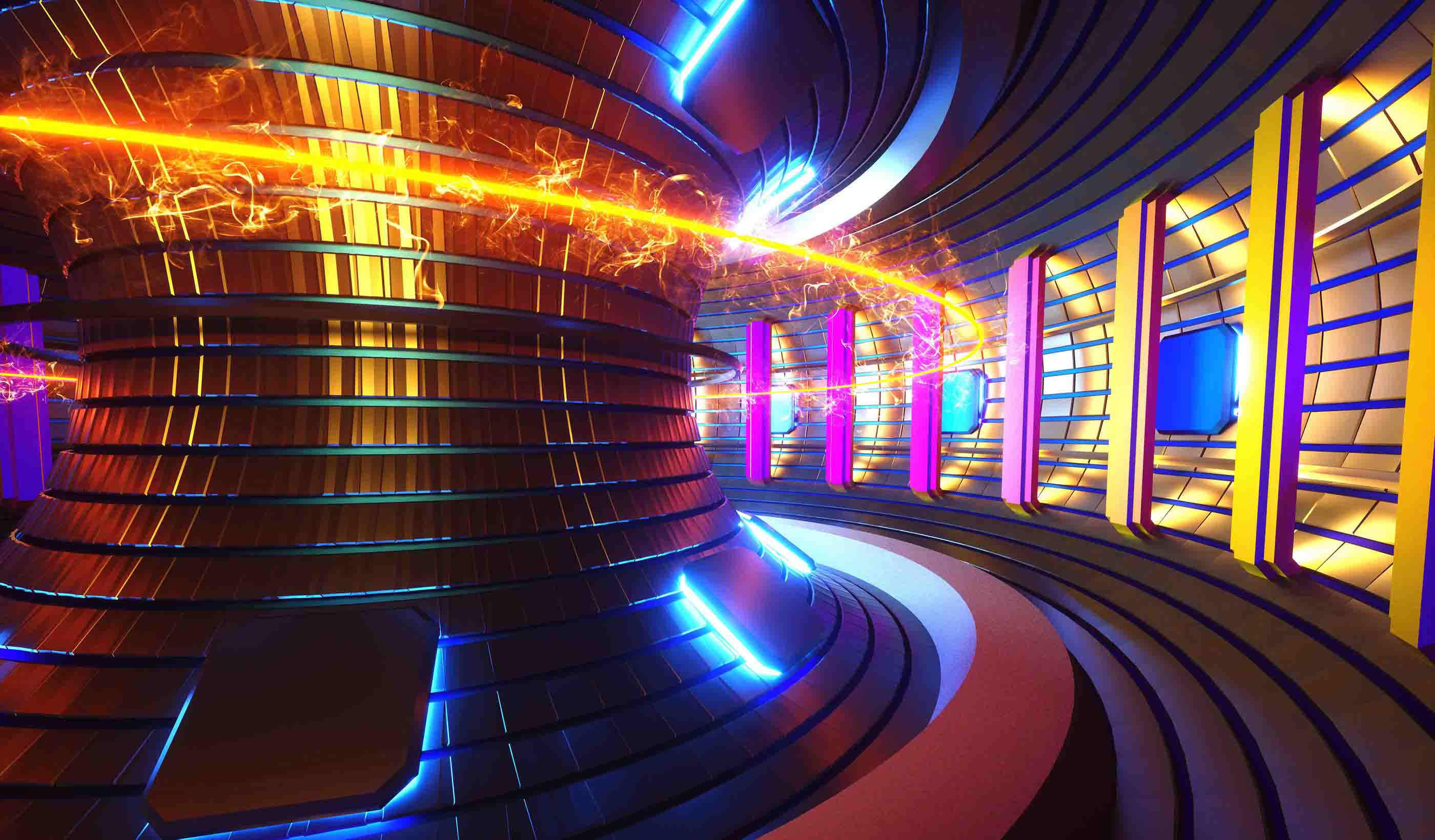

Podcast The Construction Record Podcast™–Episode 342–Stantec’s Jag Singh on small modular reactors

-

Published Article Biden CO2, hydrogen pipeline dreams get wake-up call

-

Video Designing sustainability into Edmonton's LRT expansion

-

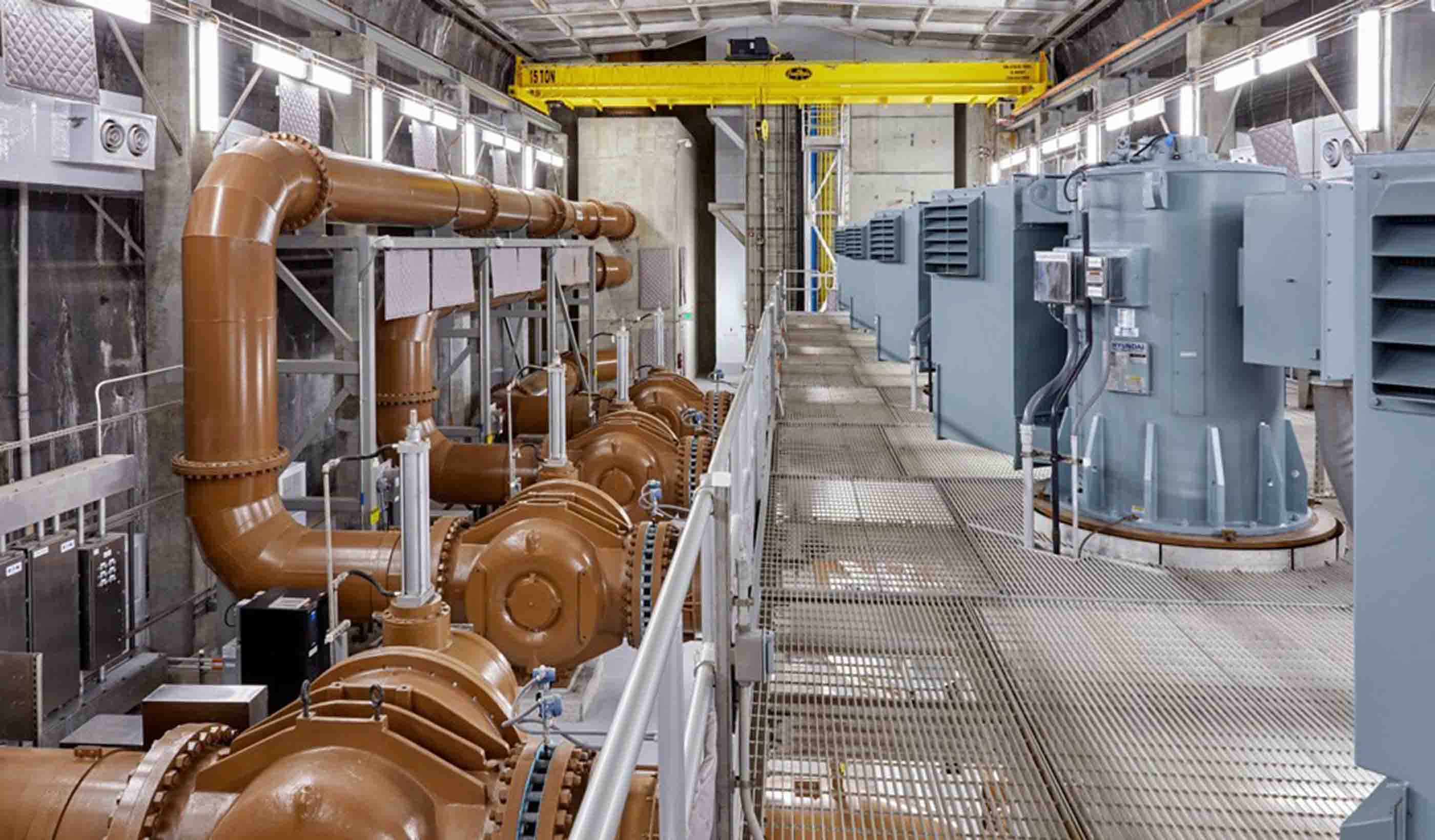

Published Article Four considerations for large pump station design

-

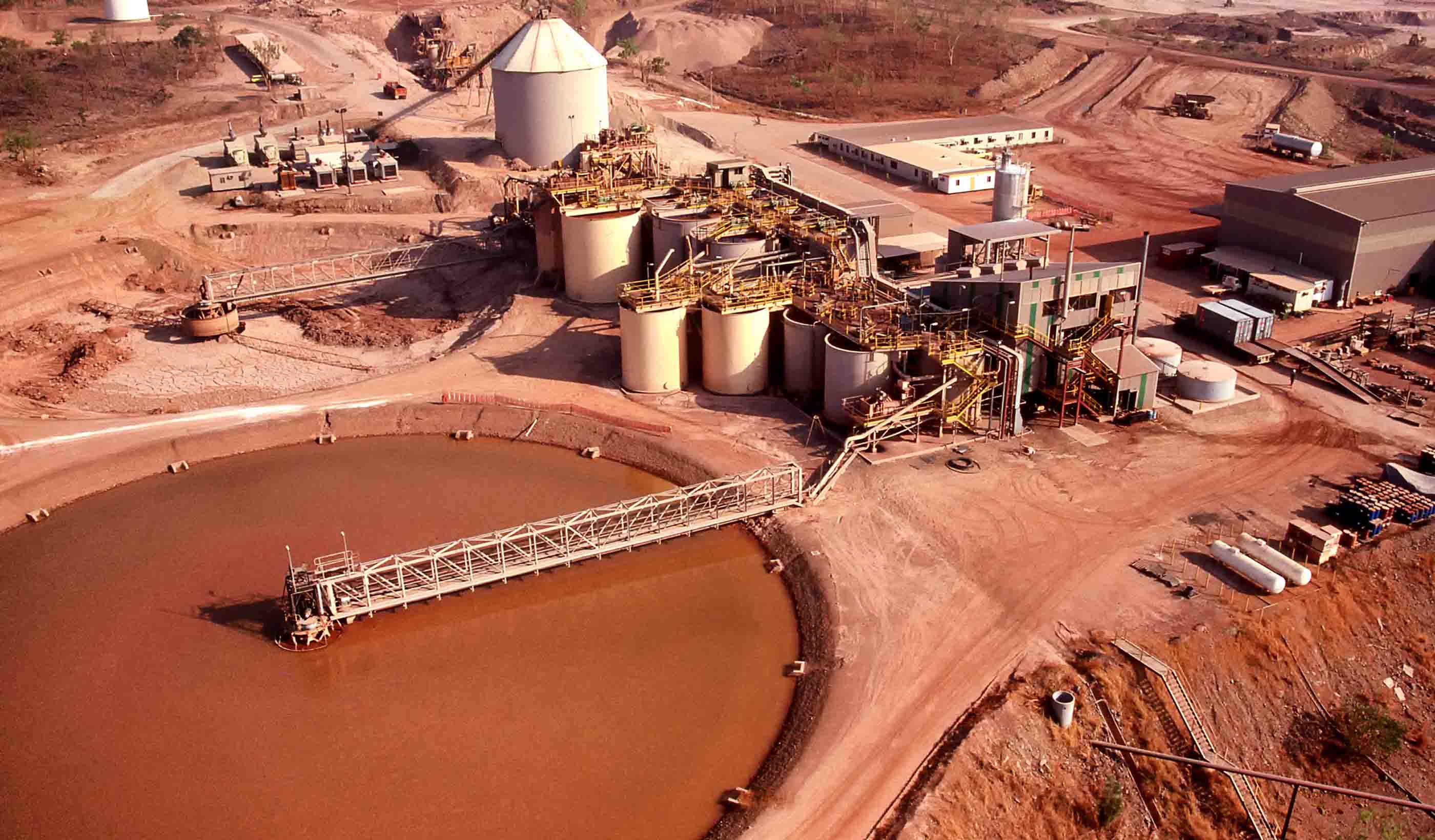

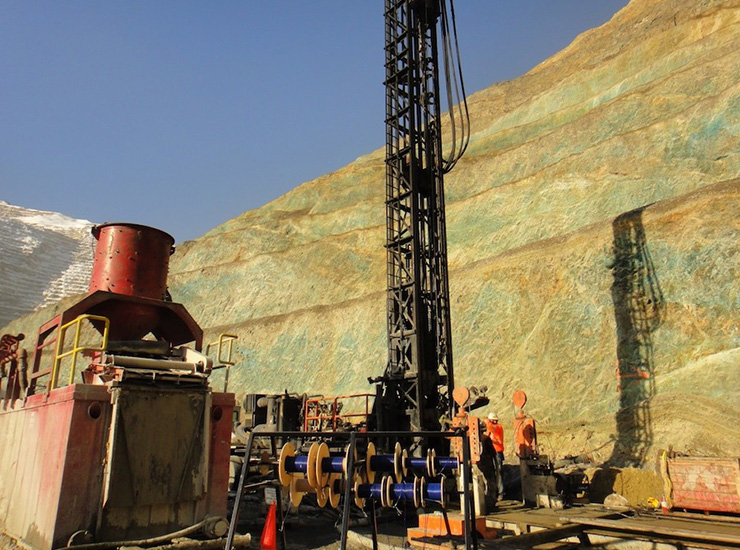

Blog Post Low-carbon energy solutions for mine sites

-

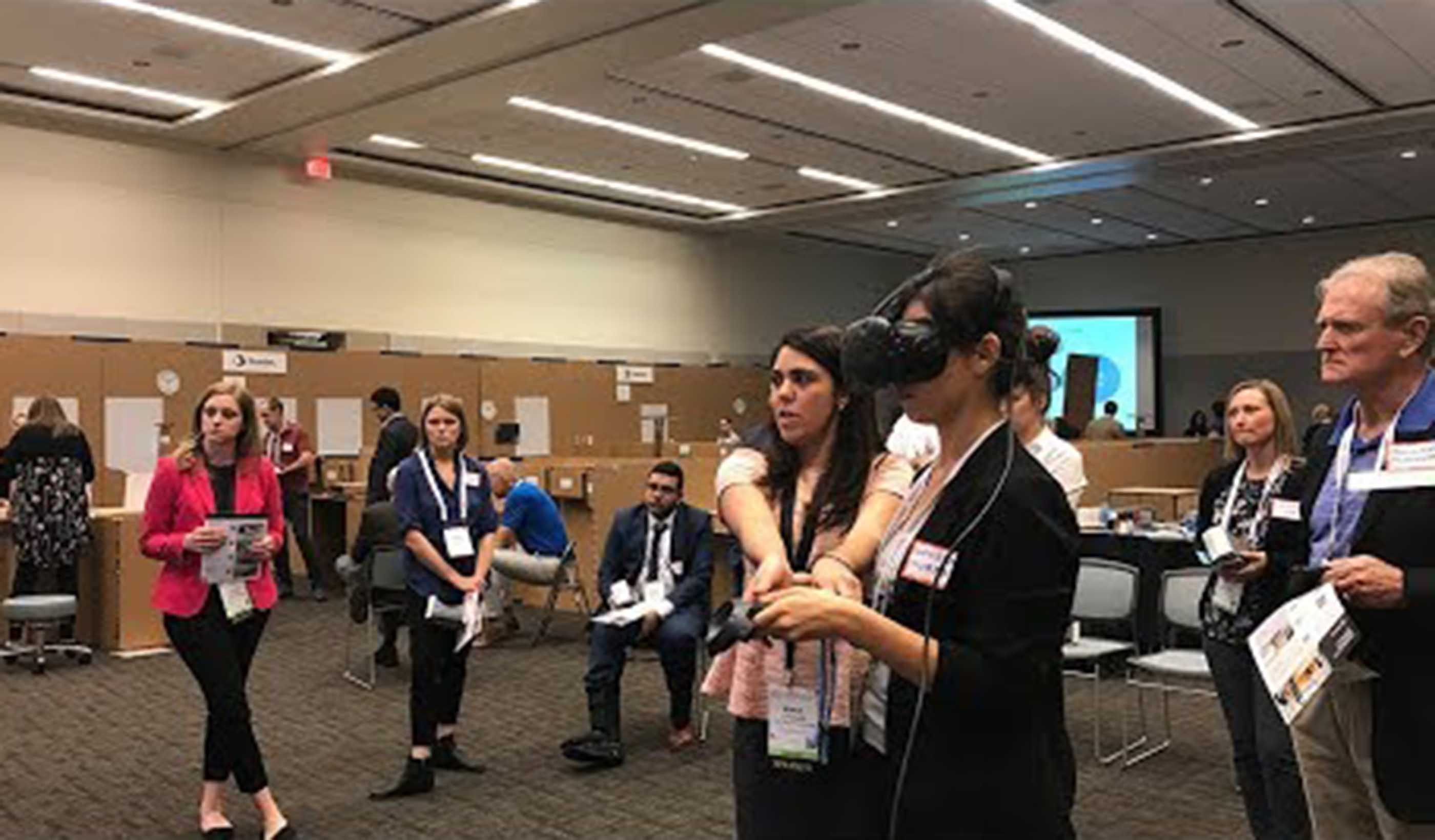

Published Article XR Marks the Spot: Using extended reality to propel innovation

-

Blog Post Preparing for building performance standards in cities

-

USSD 2024 – Stantec Presentation Schedule

-

Video How we decarbonize the mining industry

-

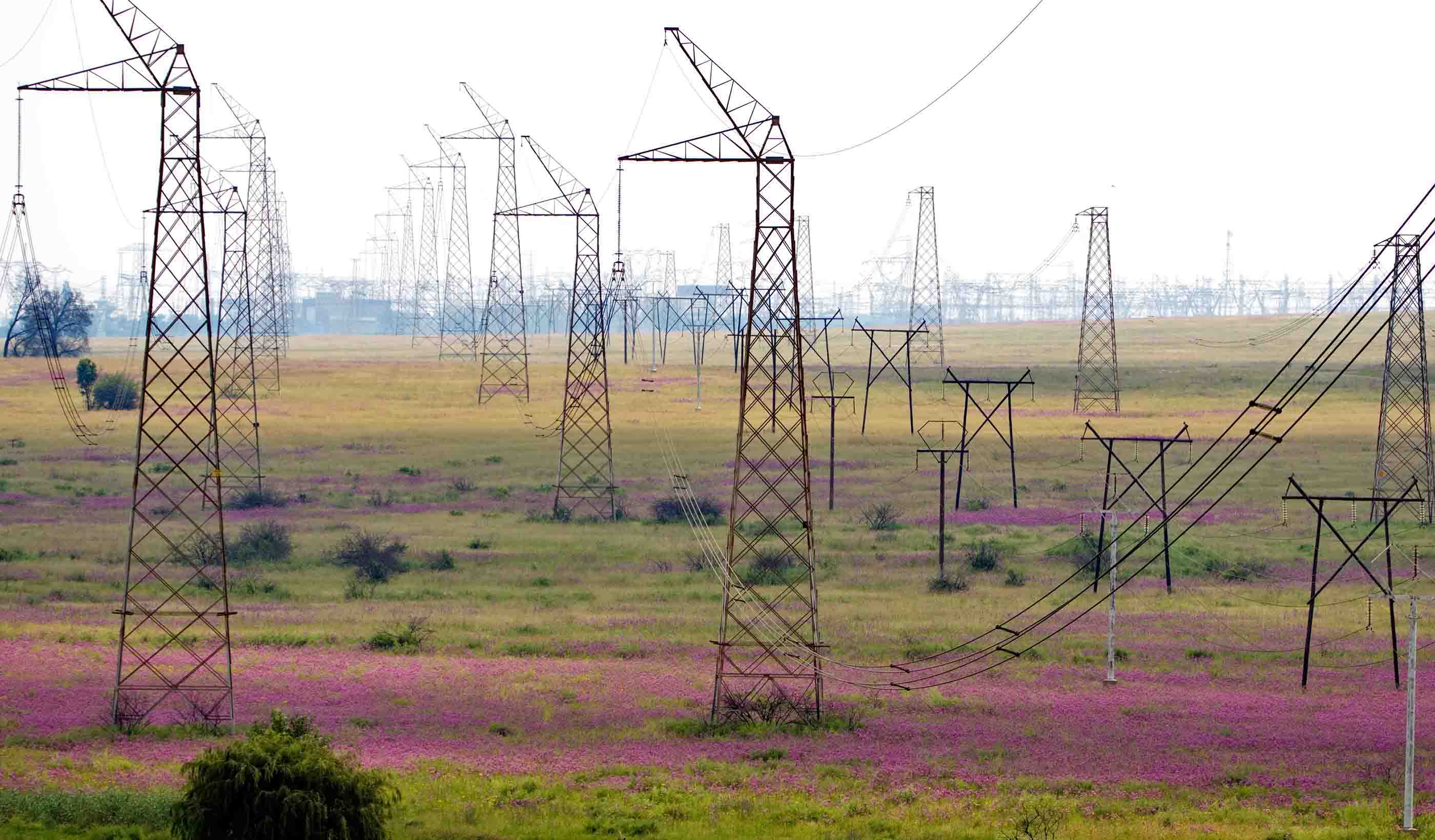

Blog Post How integrated thinking can help boost the energy transition

-

Technical Paper At the intersections of history

-

Publication Design Quarterly Issue 21 | Adaptive Reuse

-

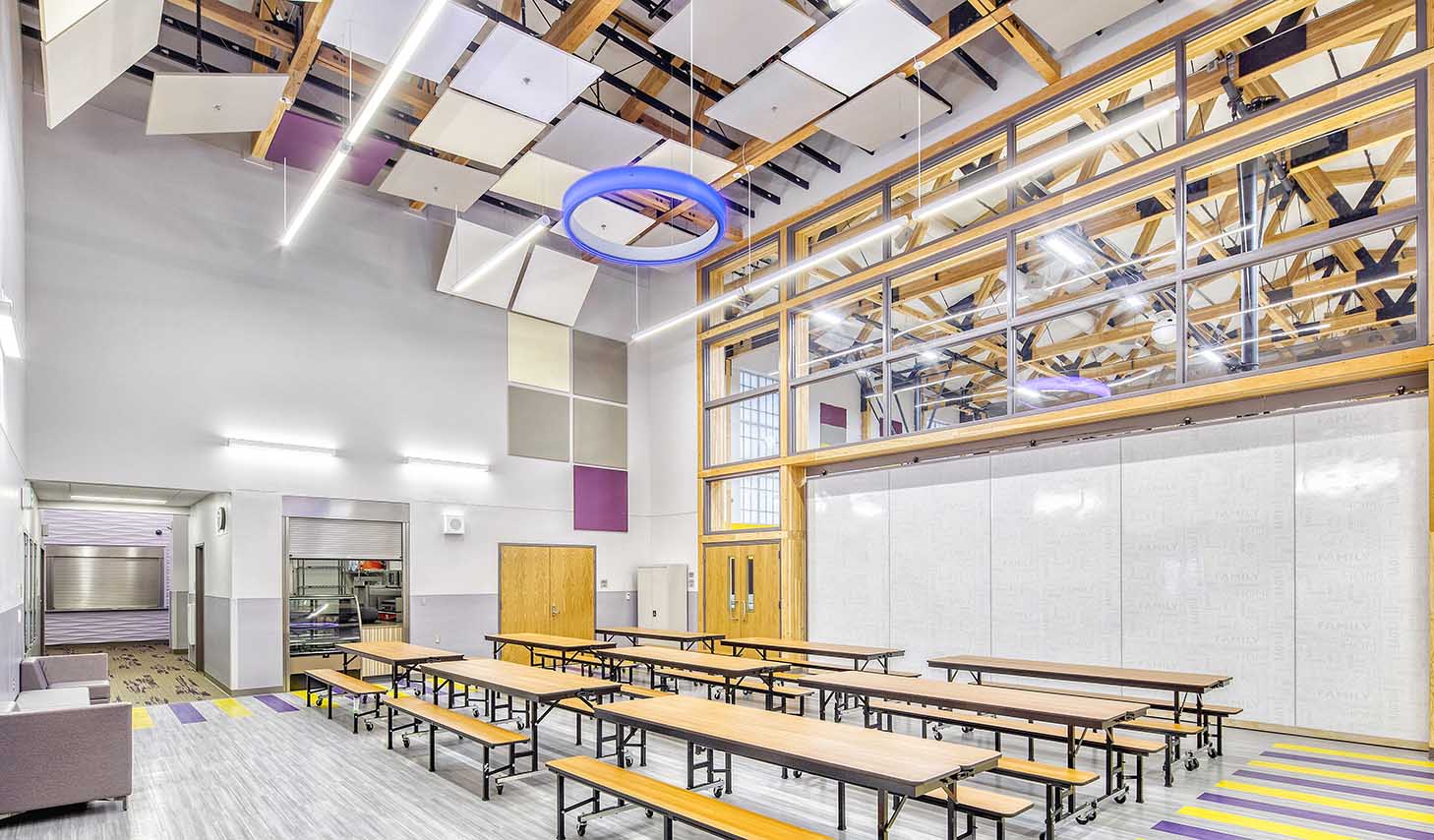

Video Early childhood education in the modern world

-

Blog Post A sustainability roadmap is key in food manufacturing facility scale-up projects

-

Video A road project uncovered a historical archaeology site and one family’s connection to it

-

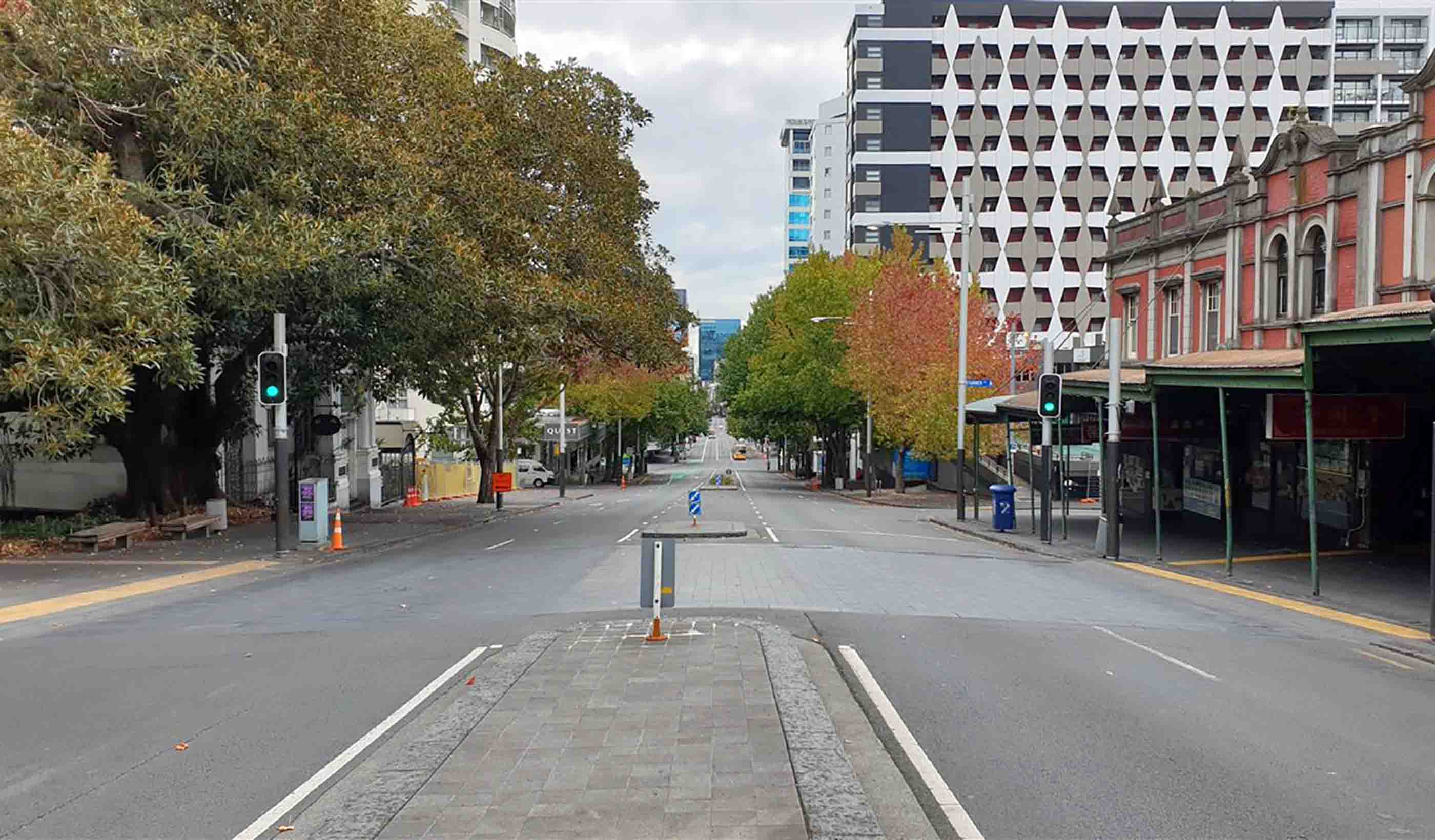

Blog Post Transportation planning strategies for the FIFA World Cup and major events

-

Blog Post Flash floods: Predicting the most difficult type of flood risk

-

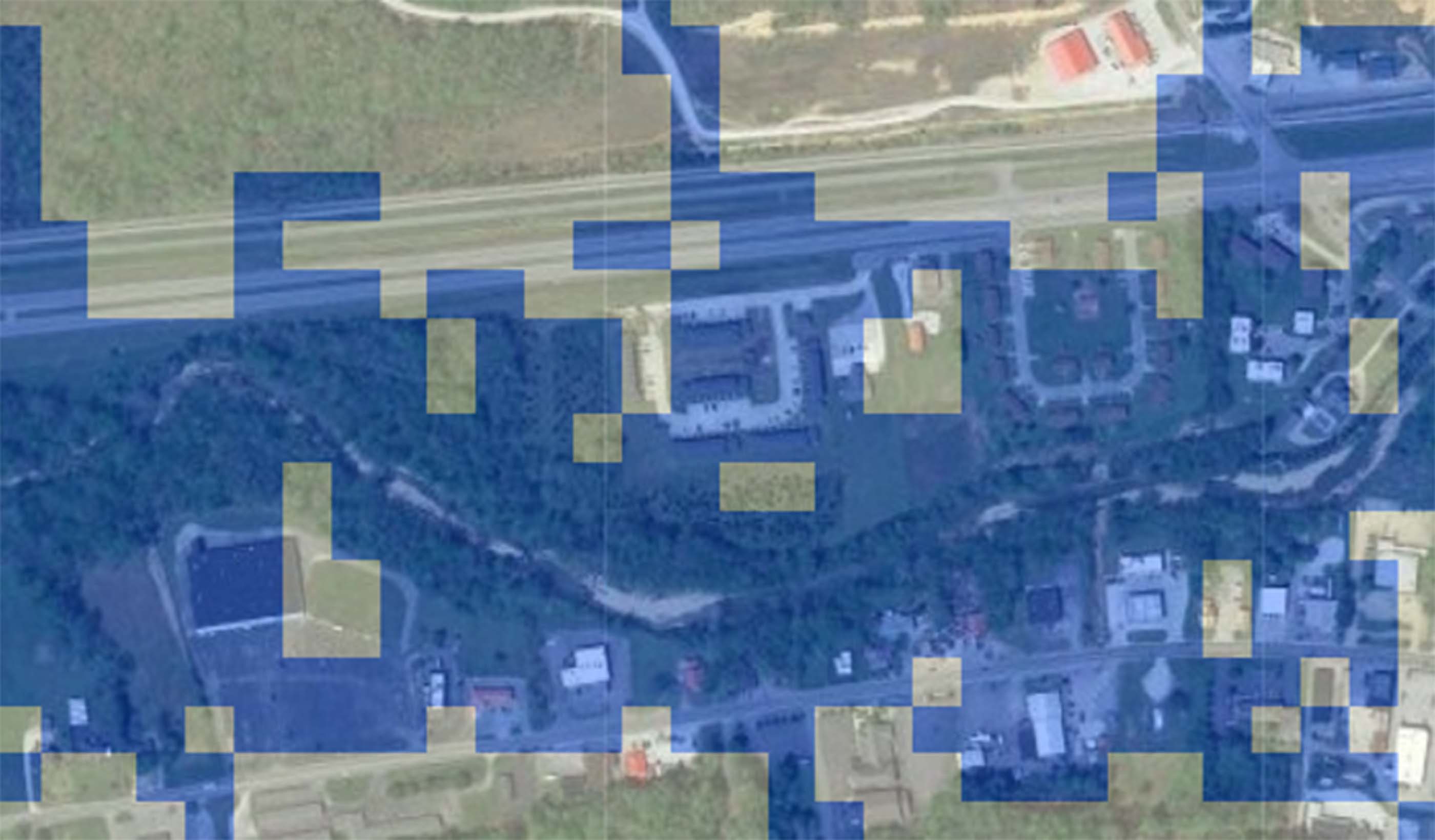

Published Article What's the hype around machine learning and AI for flood mitigation?

-

Blog Post A single electricity market can increase affordability and reliability in Africa

-

Podcast Stantec.io Podcast: The Energy Transition

-

Blog Post 6 tips for designing allied health education facilities

-

Webinar Recording Navigating the storm: Solutions for automation and innovations in operational technology

-

Video Innovation Insights: How can artificial intelligence and data transform the AEC industry?

-

Blog Post Edge data centers can speed your online experience

-

Video Acoustics: Impact of noise and vibration

-

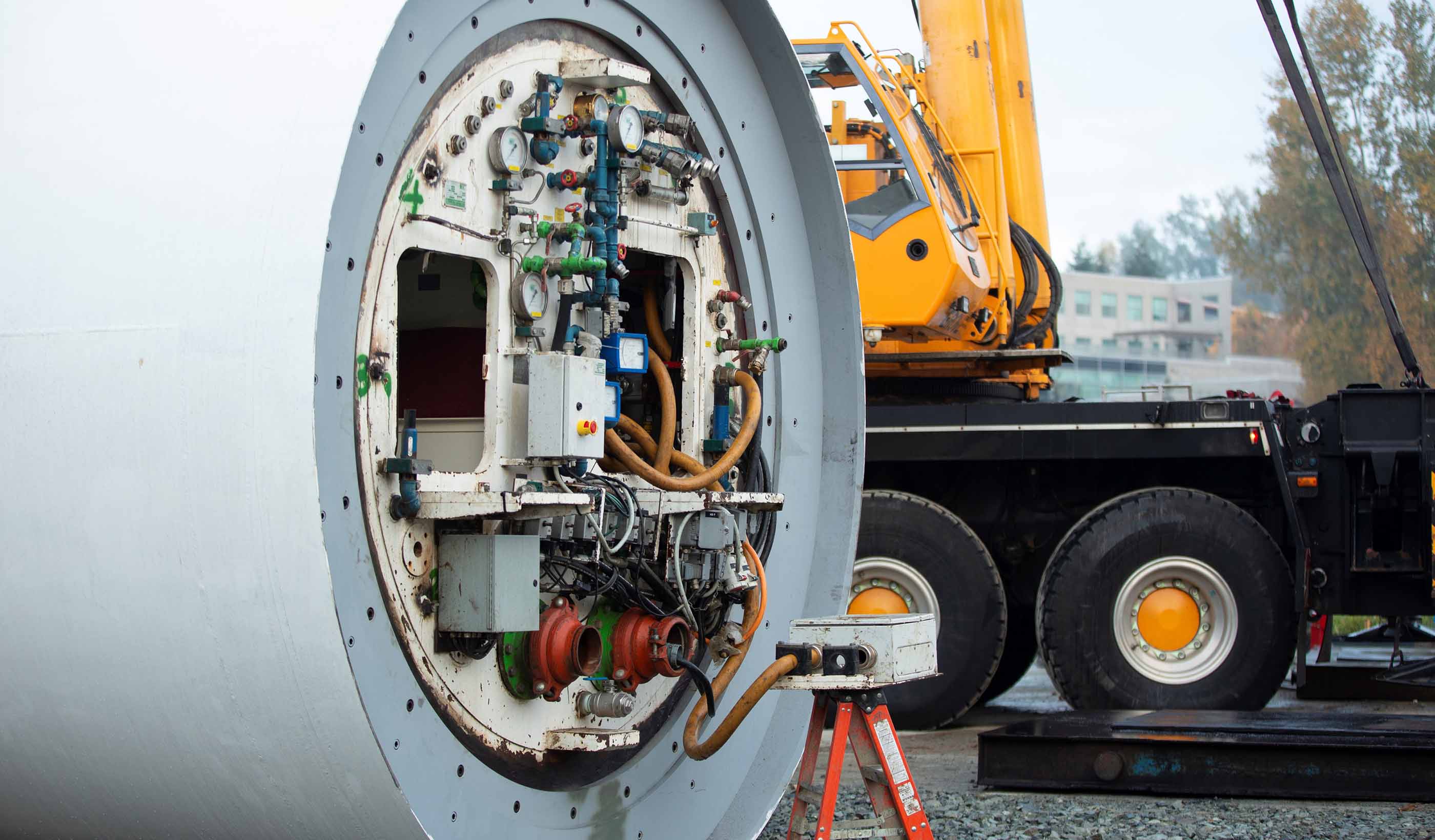

Conference NASTT 2024 No-Dig Show—Stantec Presentation Schedule

-

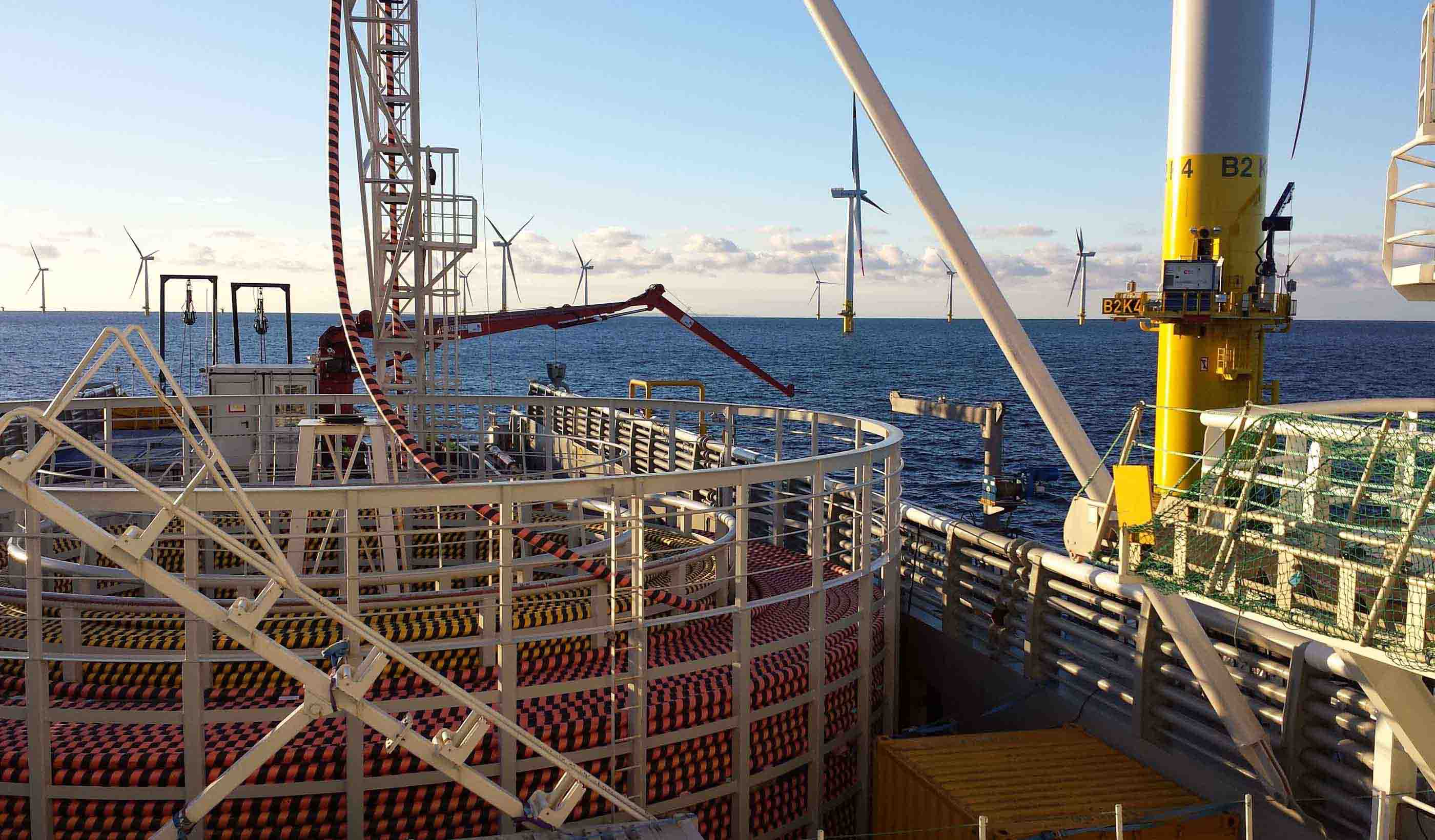

Blog Post Driving decarbonization with renewable floating offshore wind energy

-

Webinar Recording No H2 without H2O

-

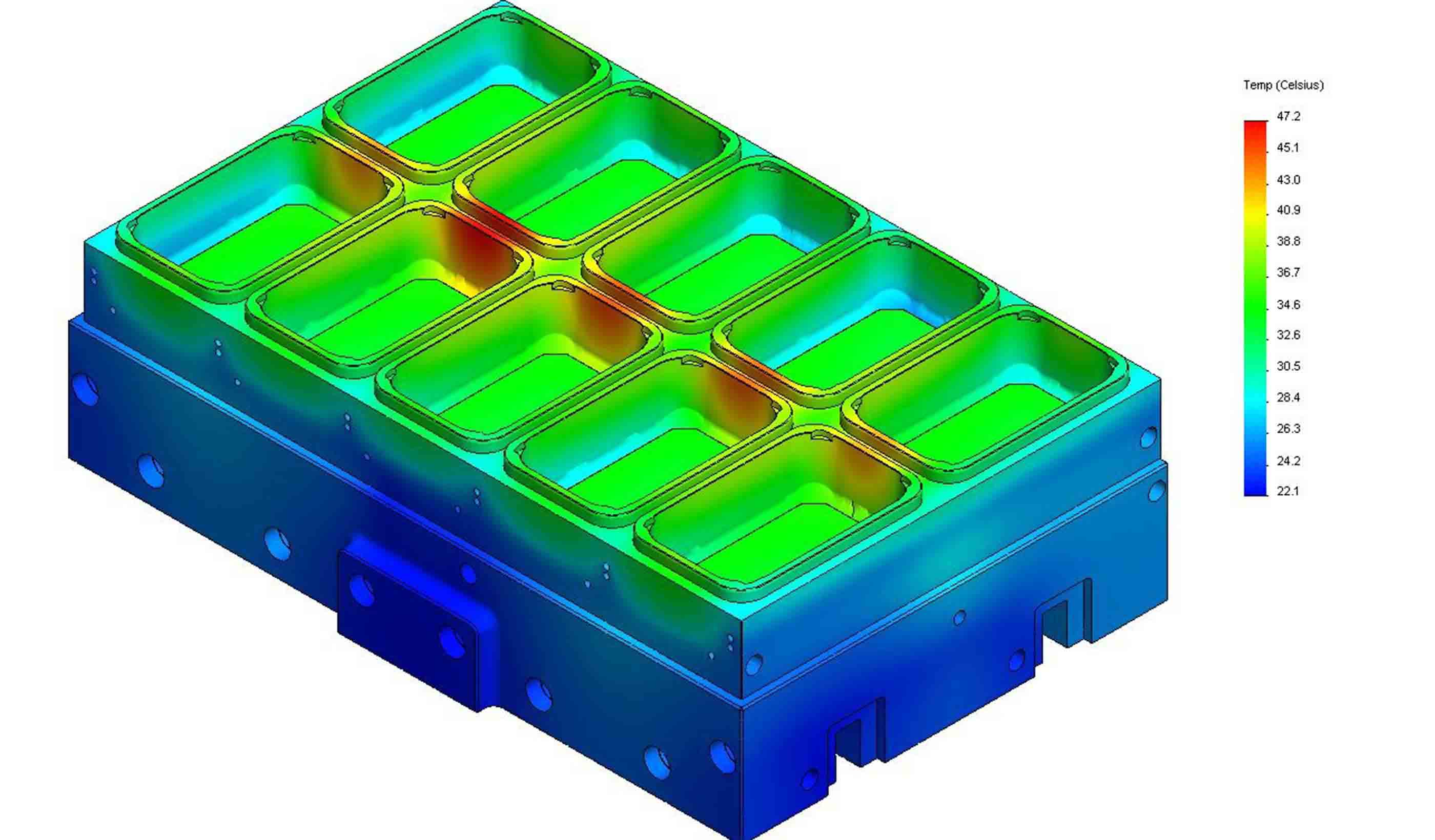

Blog Post 8 ways to cool a factory

-

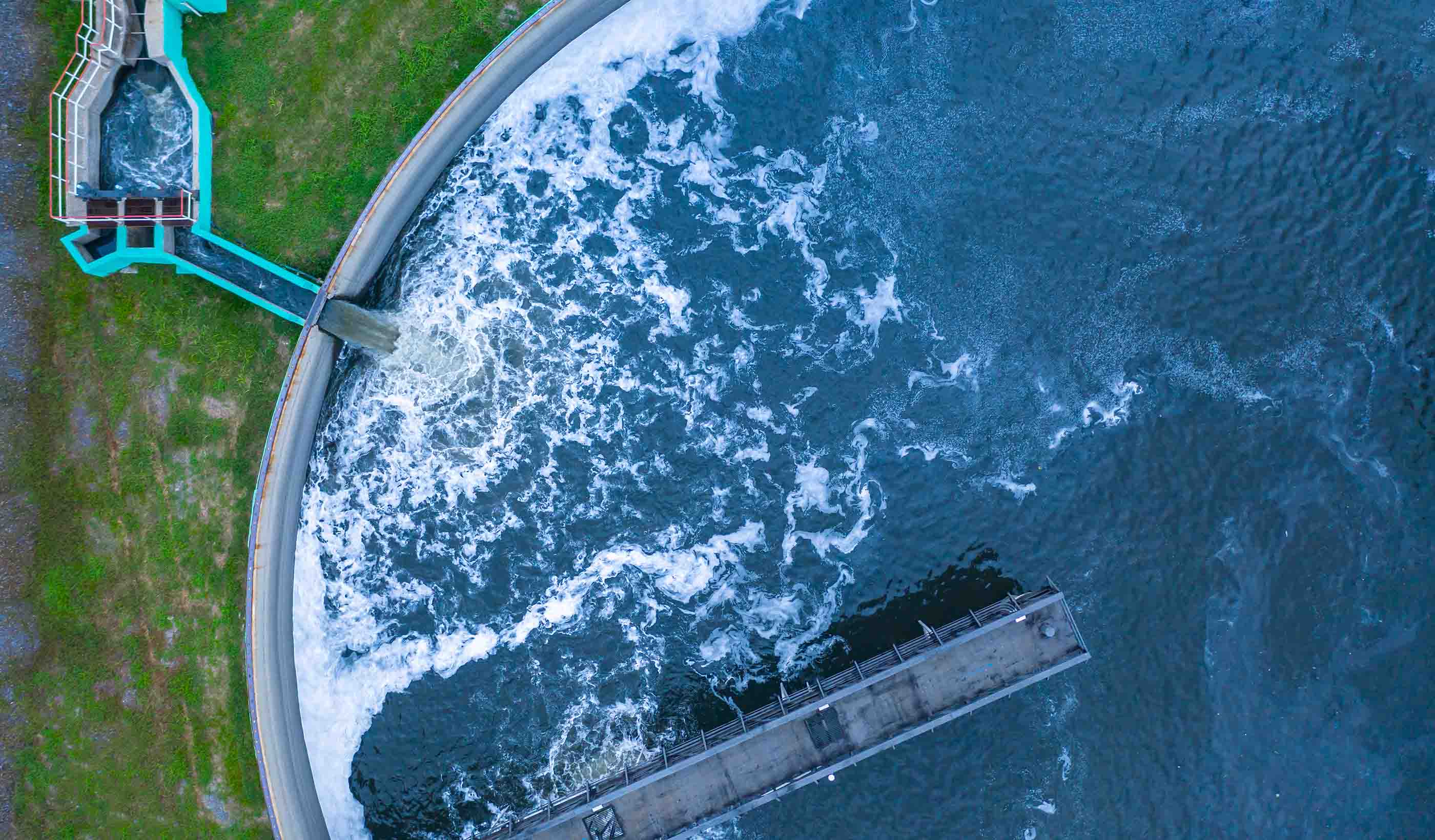

Video Interest is growing for recovering resources from wastewater

-

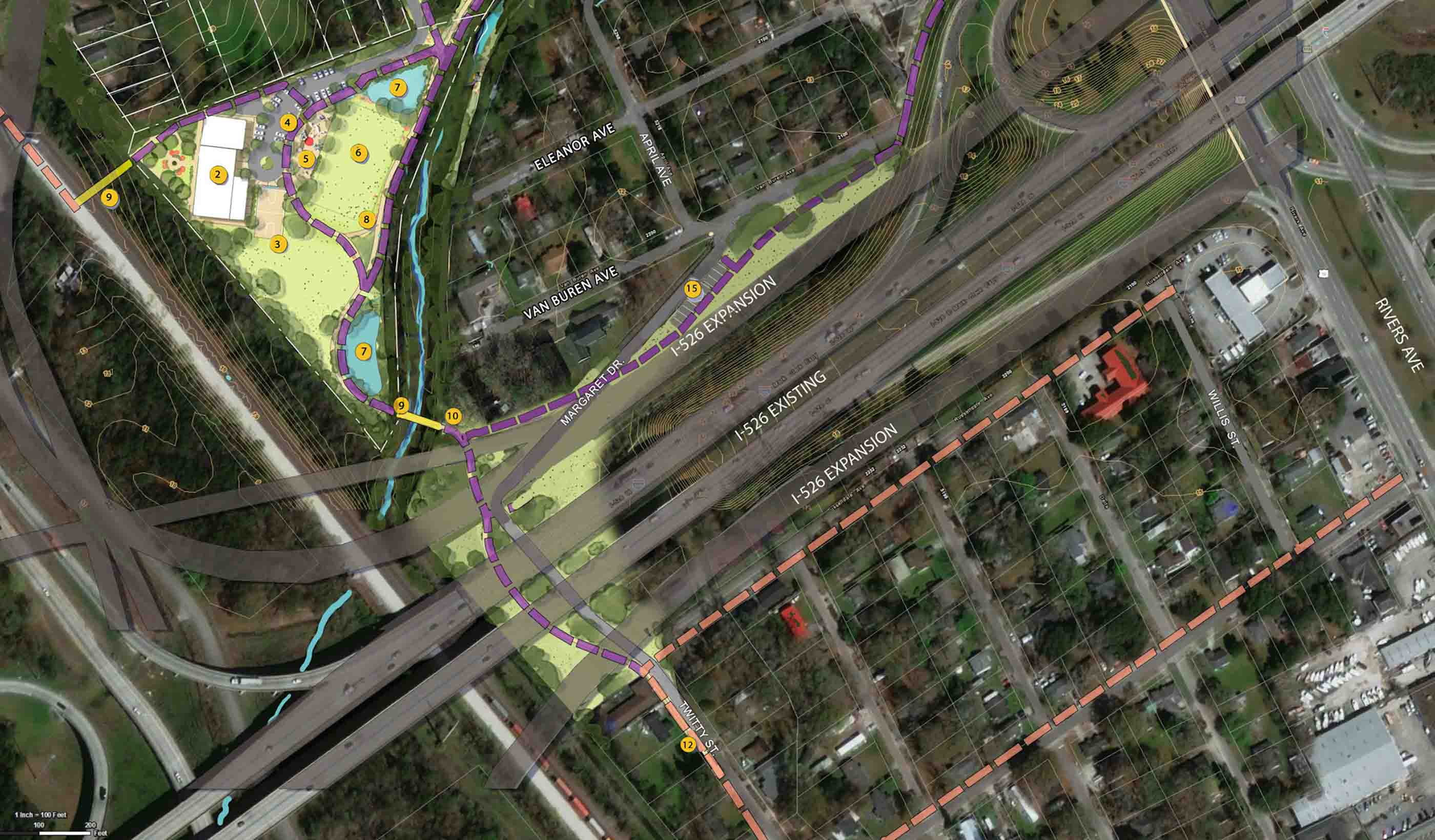

Video Prioritizing environmental justice on a $3 billion transportation megaproject

-

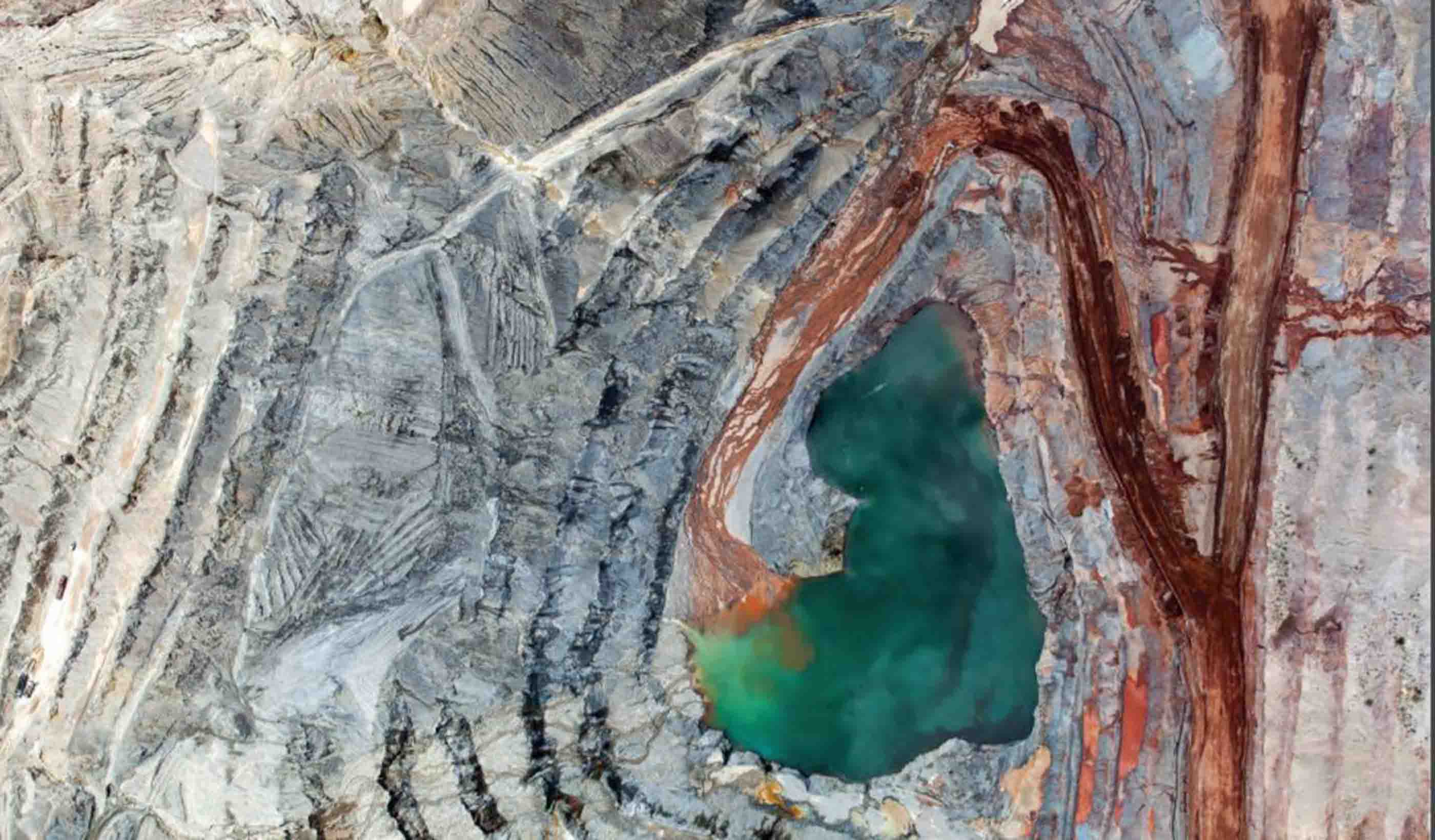

Published Article Mine by design

-

Blog Post Canada PFAS in the mining industry: Potential impacts, risks, and challenges

-

Blog Post A sustainable approach to readying critical minerals for decarbonization

-

Published Article Tech transformation: An inflection point for consultative engineering

-

Conference 2024 WEF Collection Systems and Stormwater Conference—Stantec Presentation Schedule

-

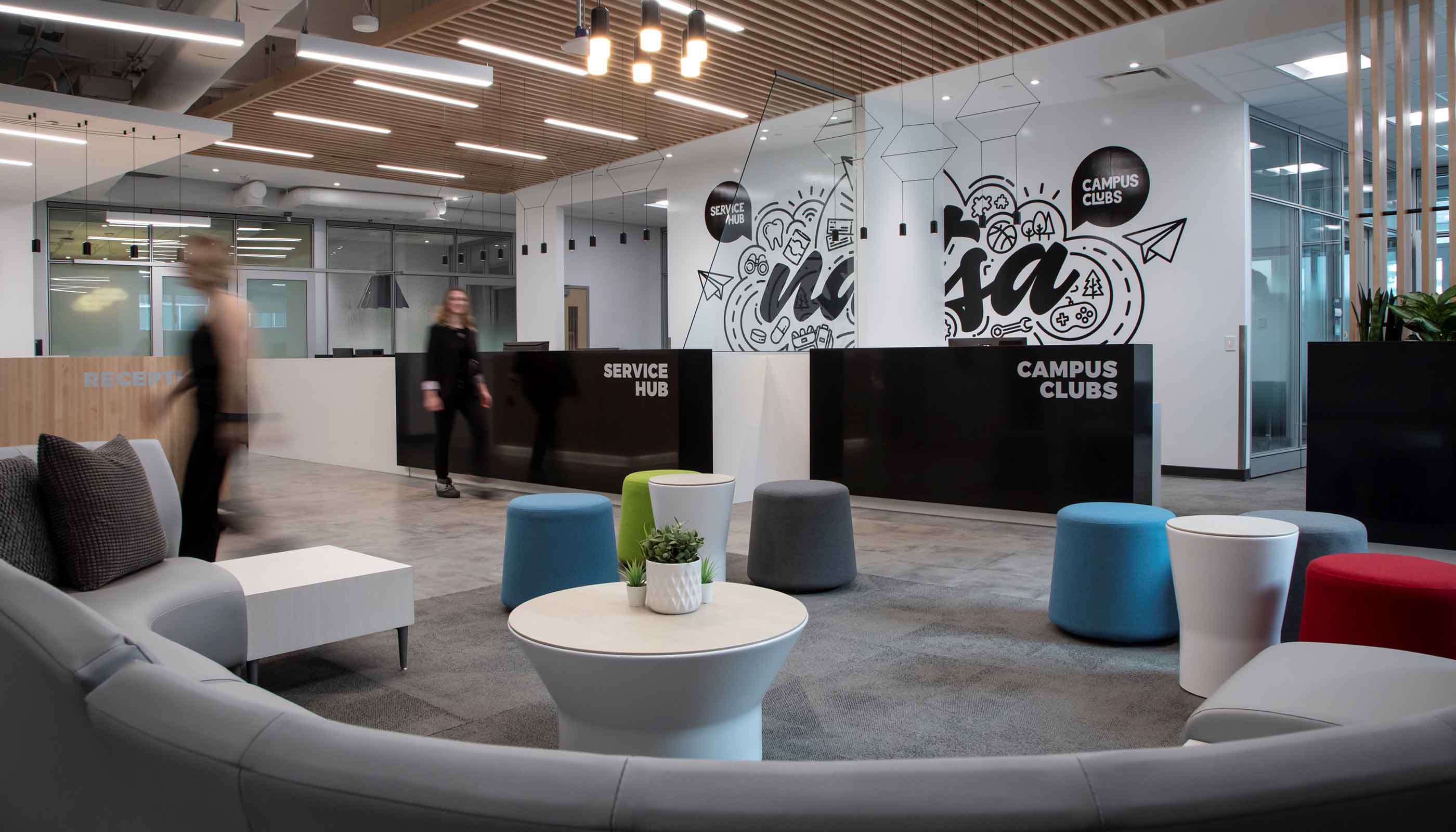

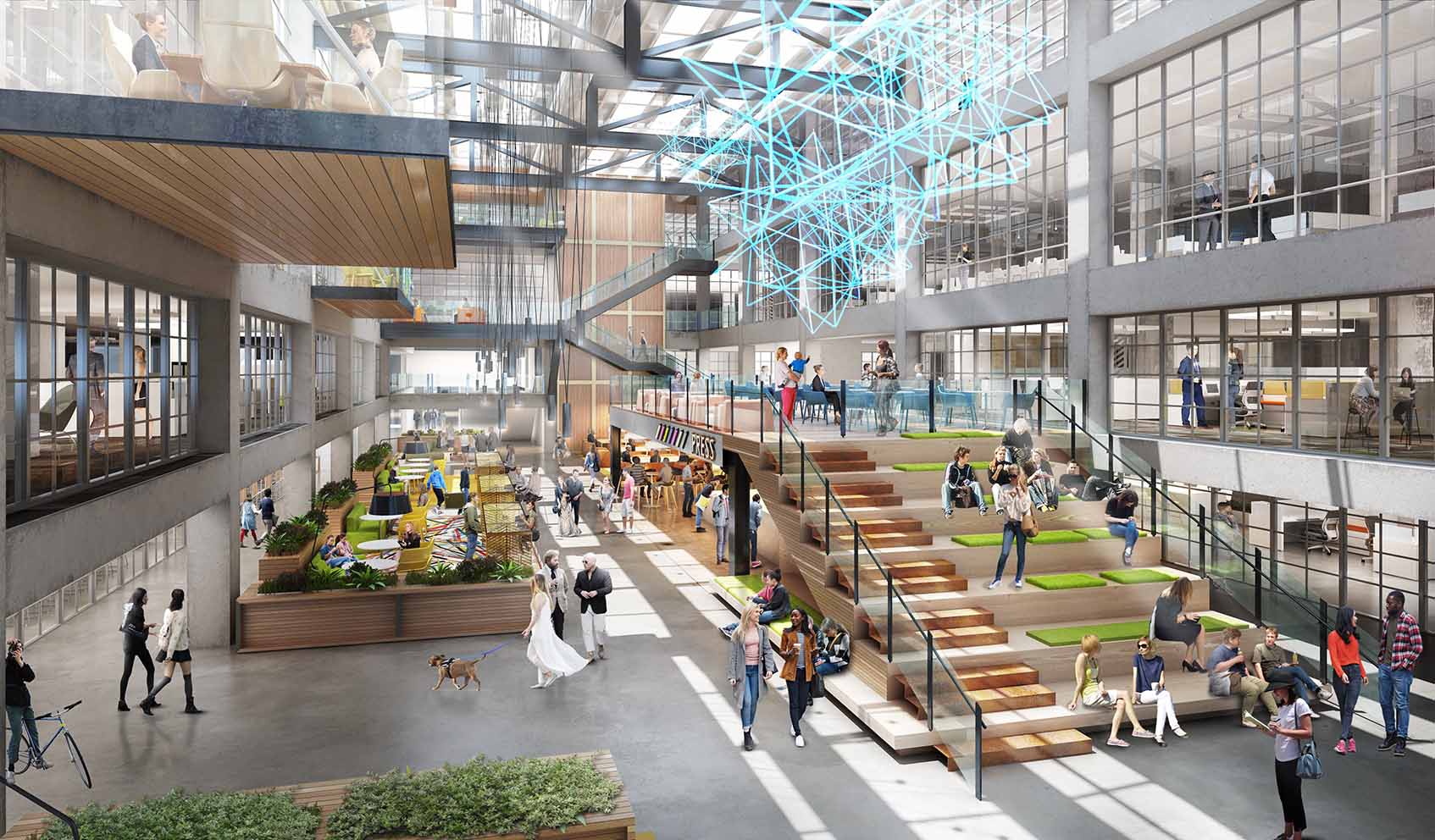

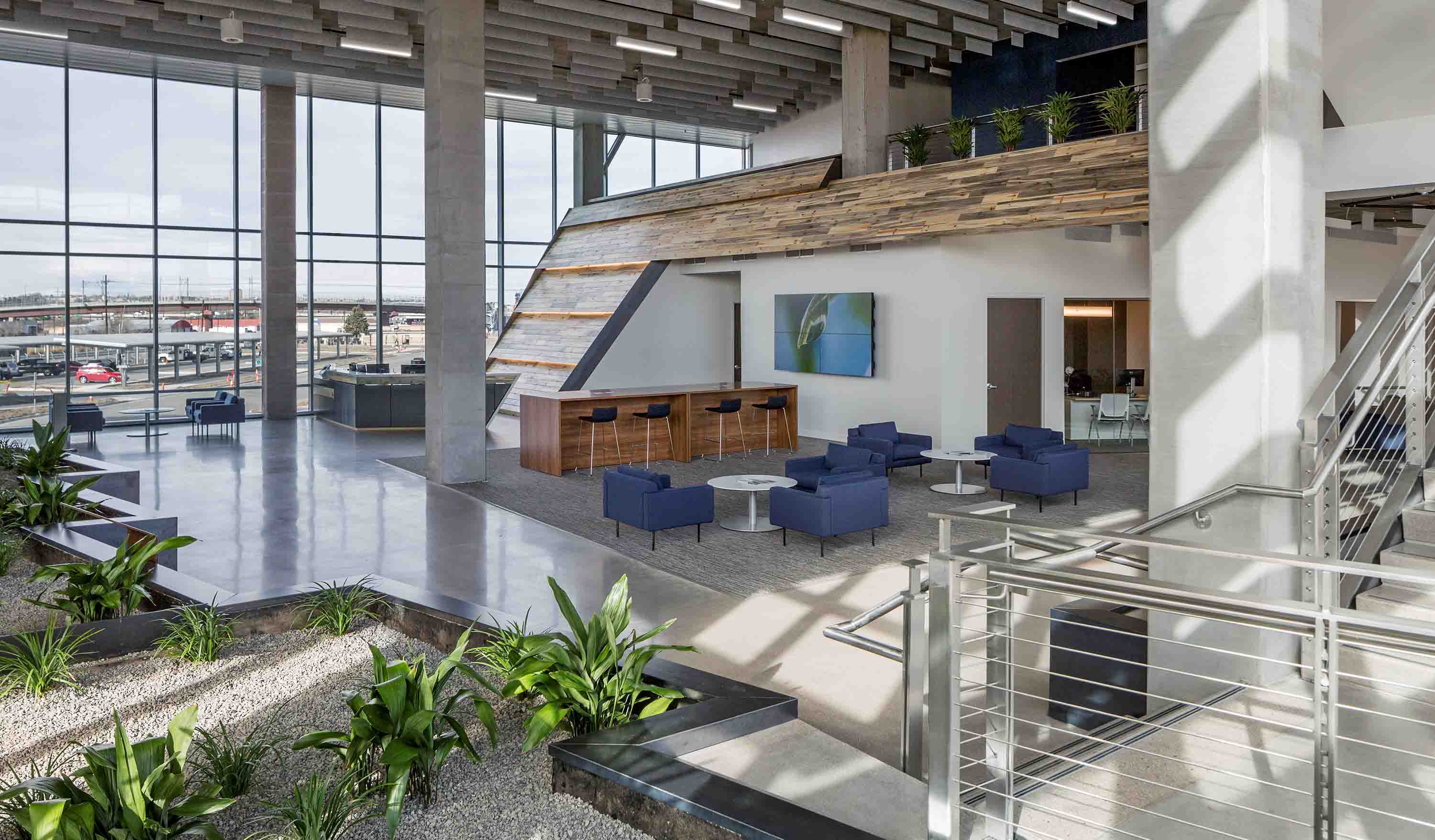

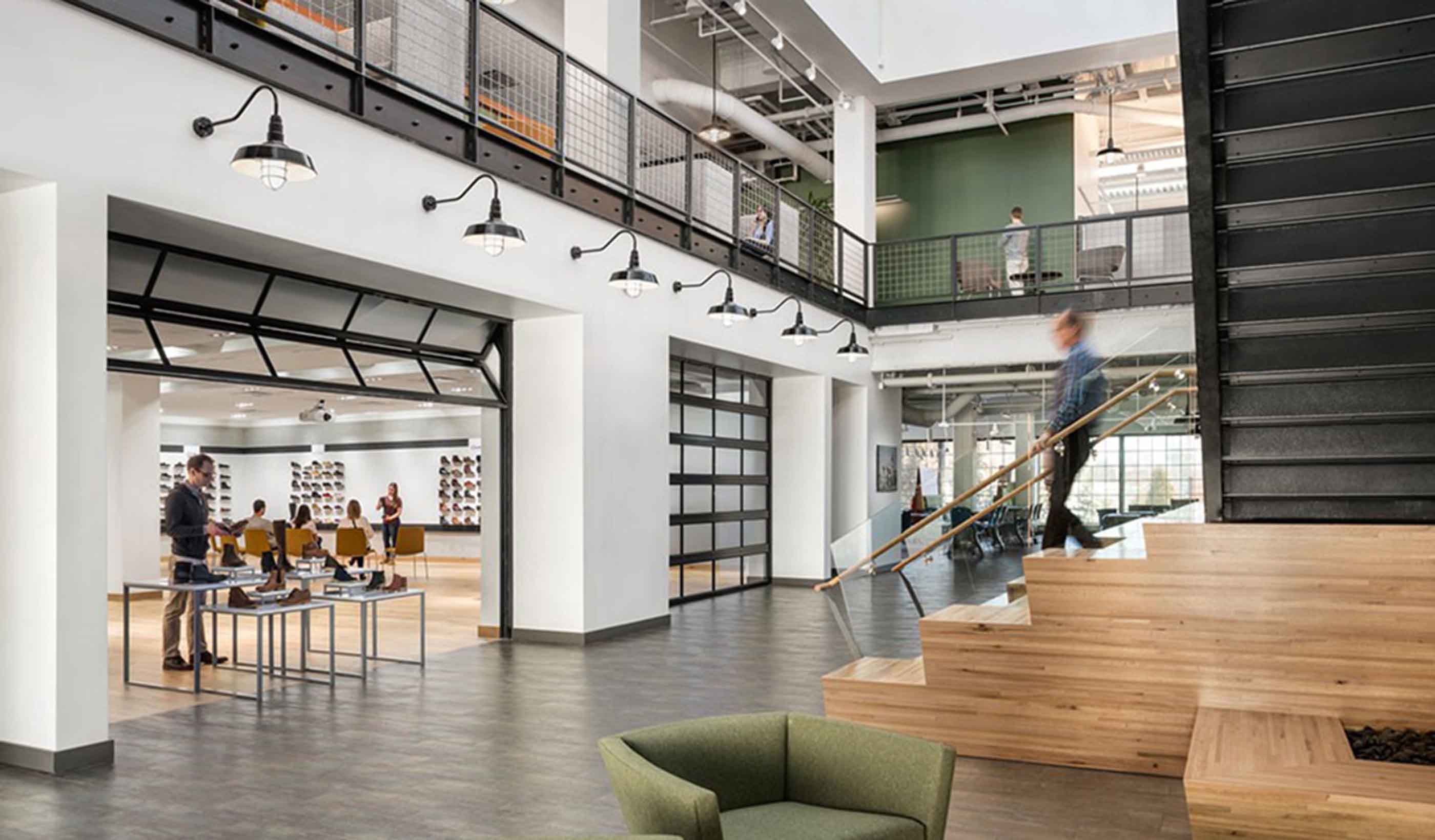

Blog Post Designing a workplace experience to answer: ‘Why should I go into the office?’

-

Video How Albany converted an underused highway ramp into a city park

-

Blog Post Can we recycle captured carbon to produce green hydrogen and biomass?

-

Published Article 3 things to consider when creating a lead service line inventory

-

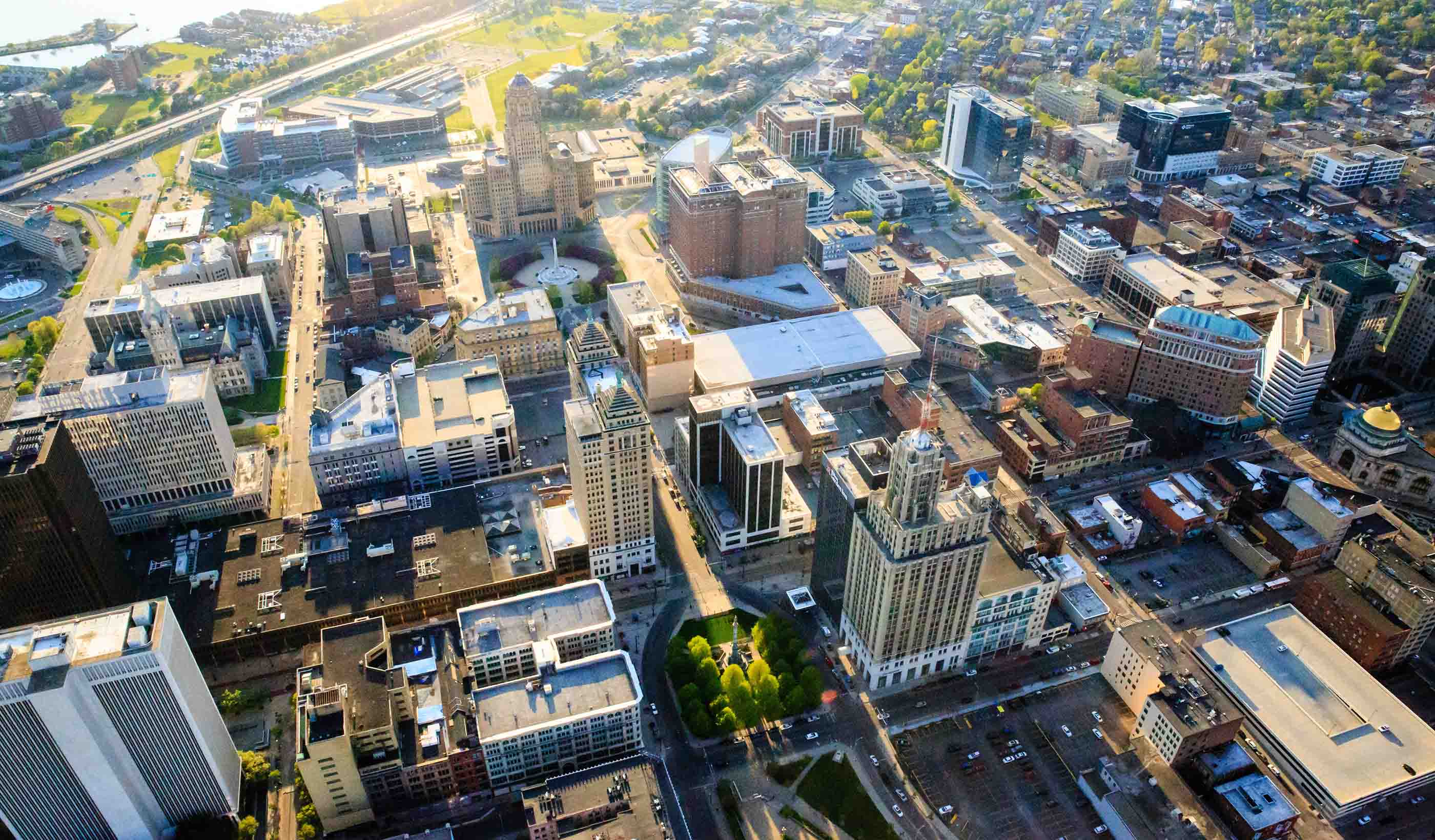

Published Article Live, work, play: Office building conversion practices for downtown revitalization

-

Blog Post Small modular reactors: Driving energy security with nuclear power

-

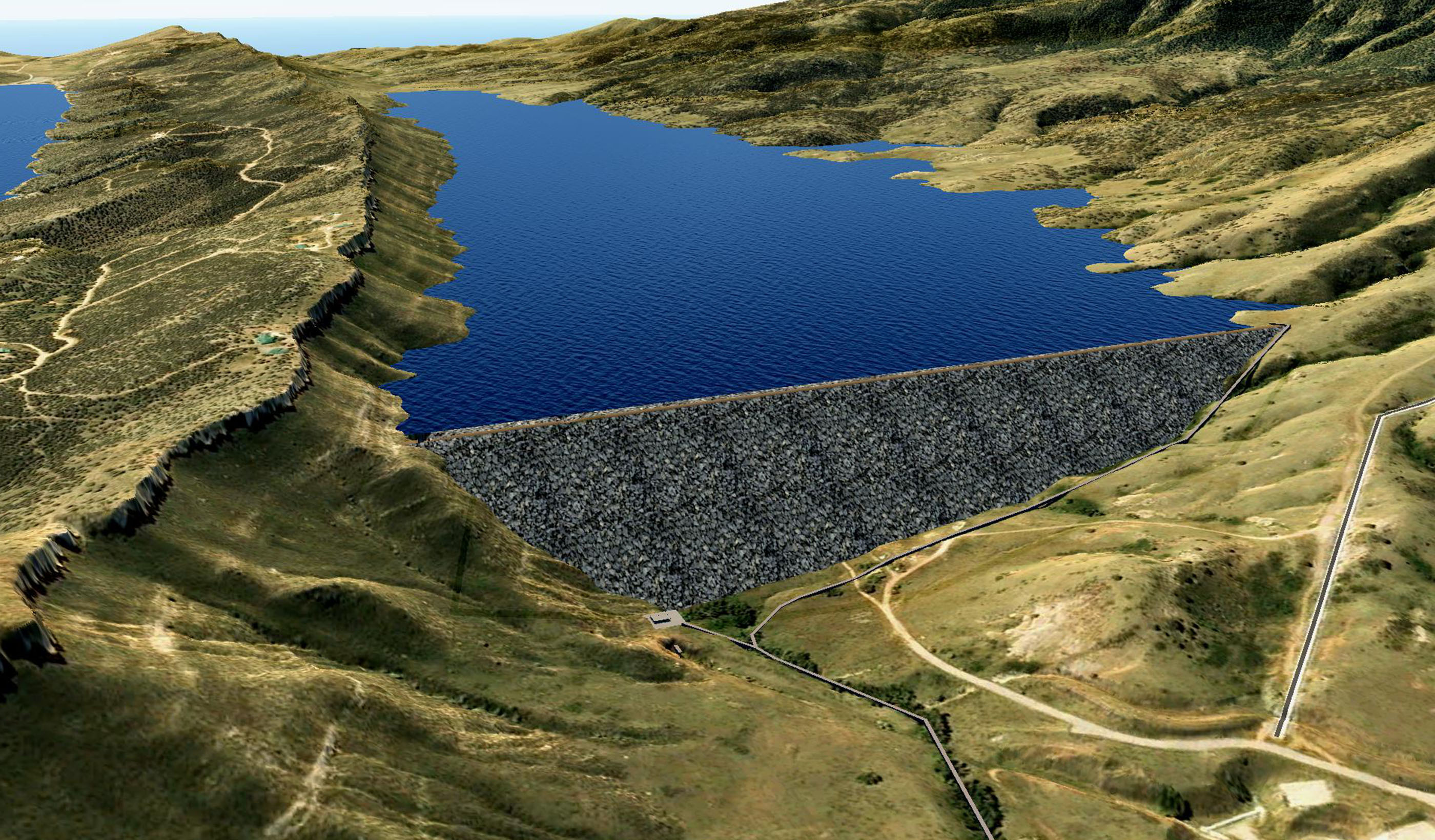

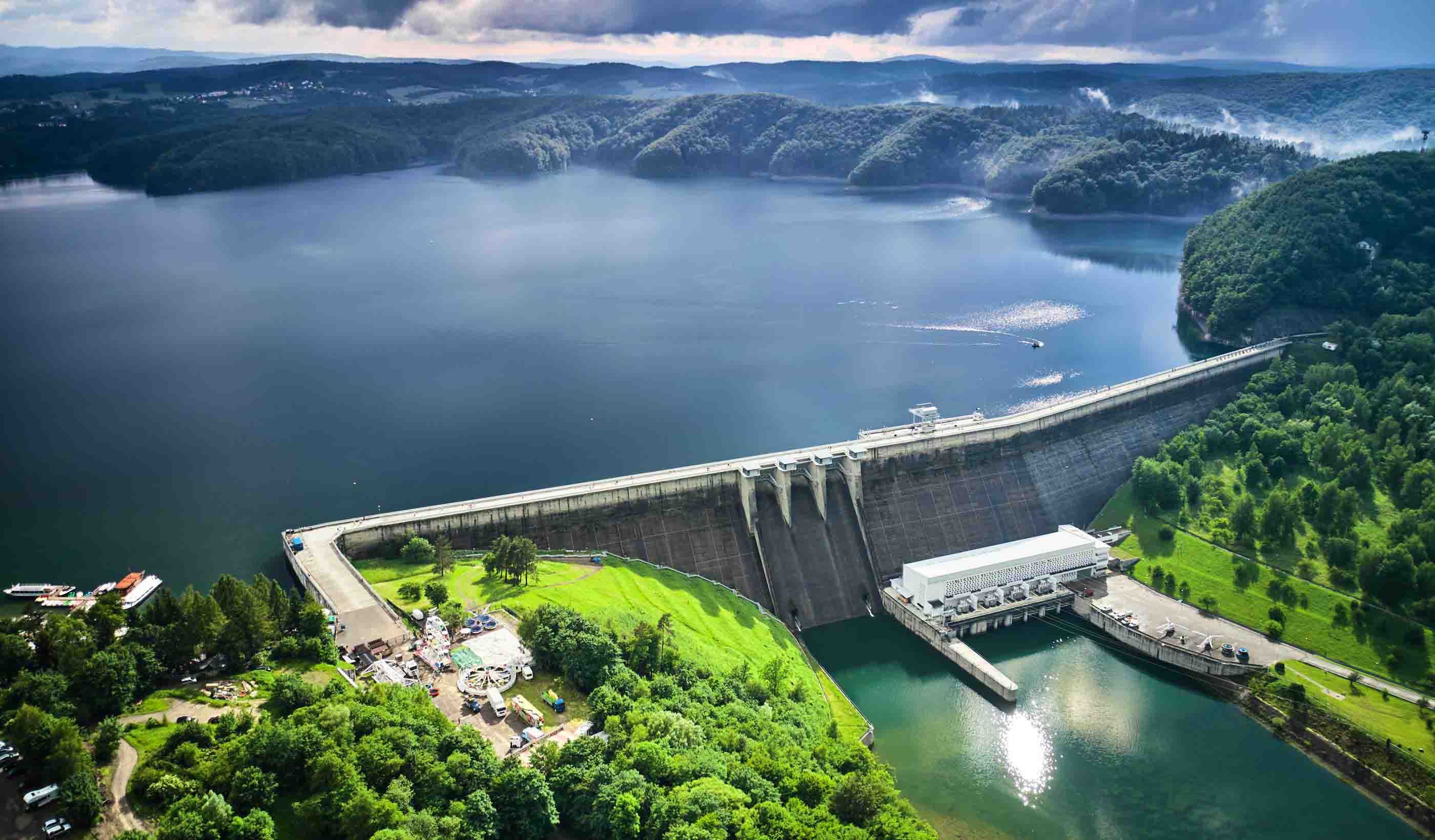

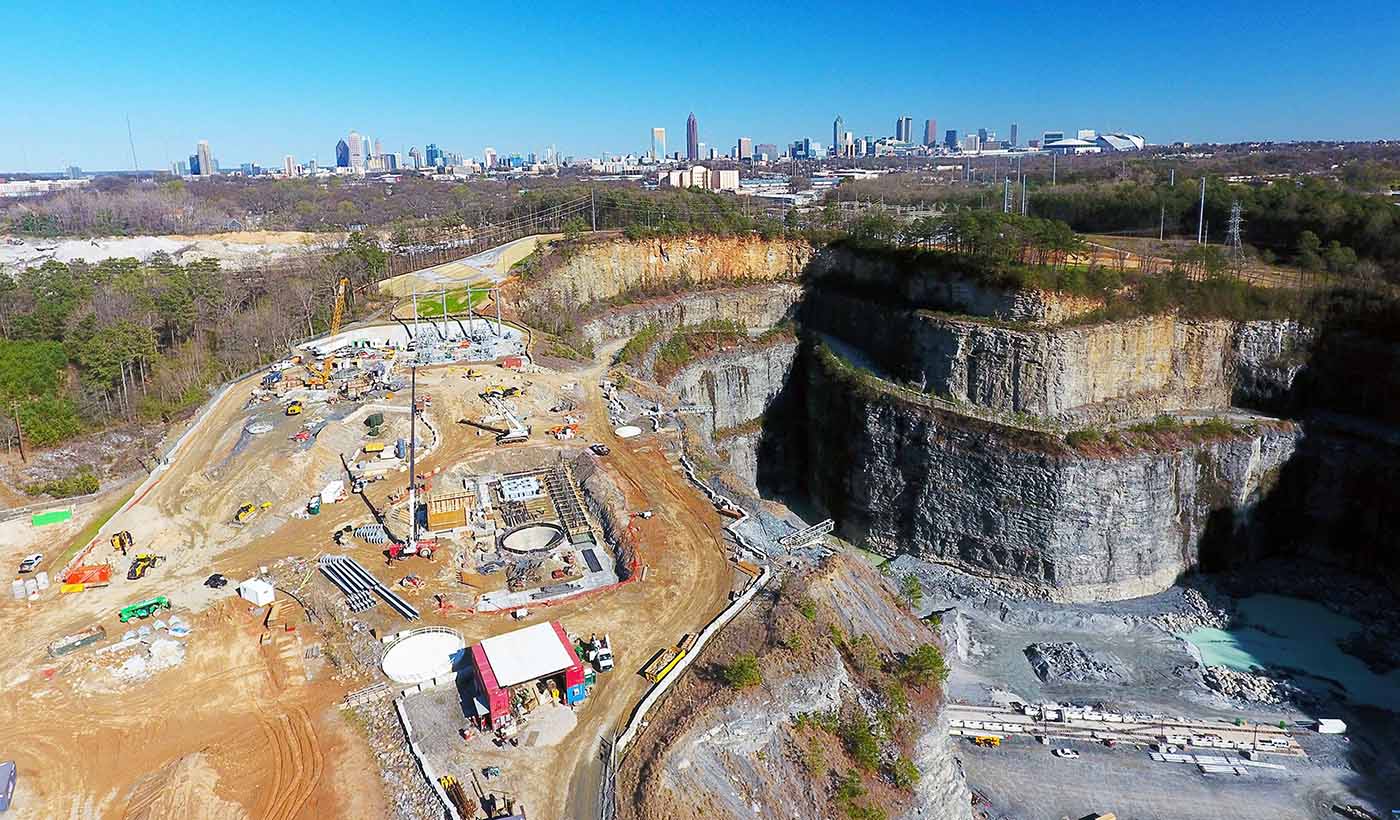

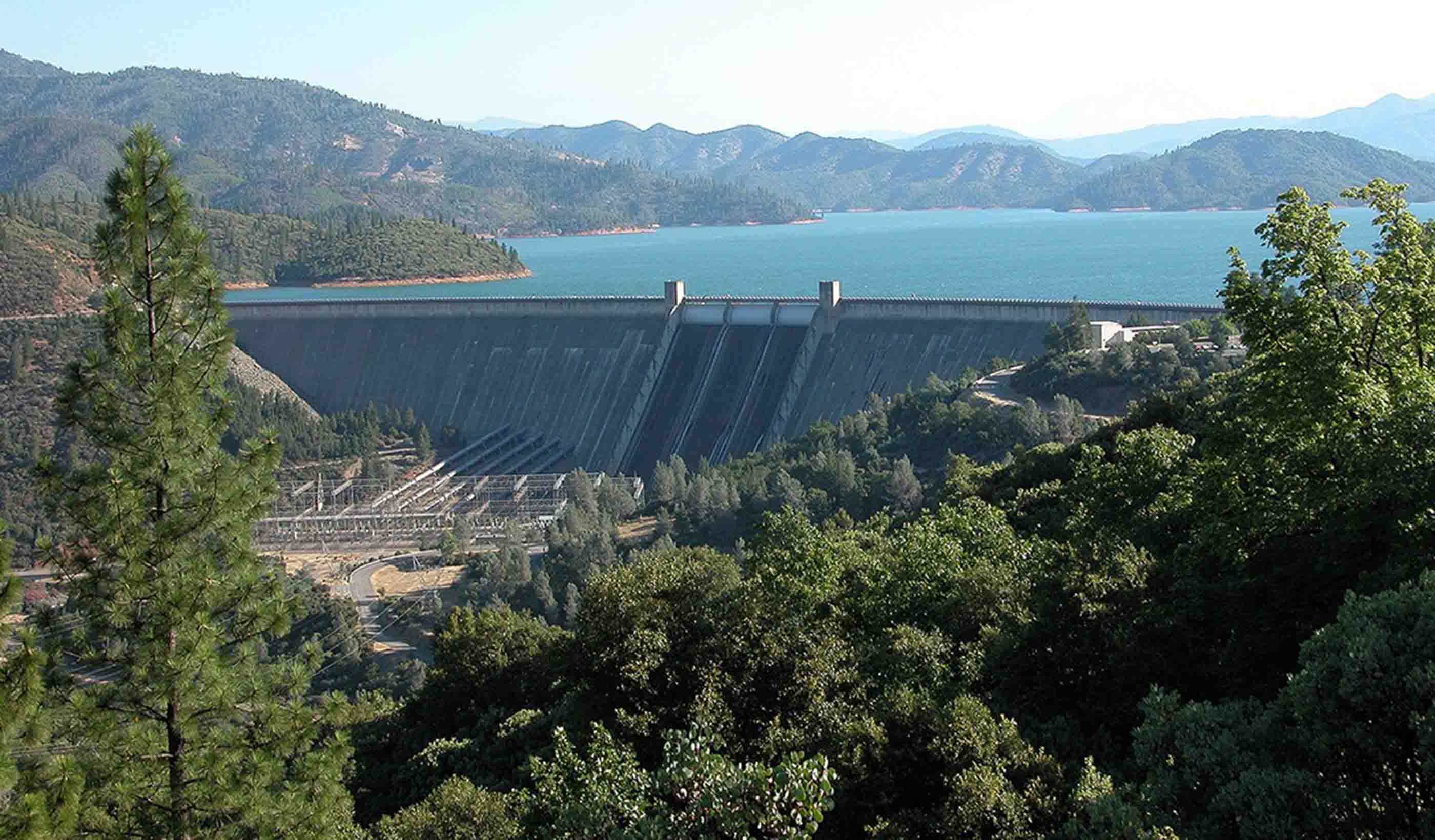

Published Article Denver Water increasing height of Gross Reservoir Dam

-

Podcast Stantec.io Podcast: Advanced Tech and Reality Insight

-

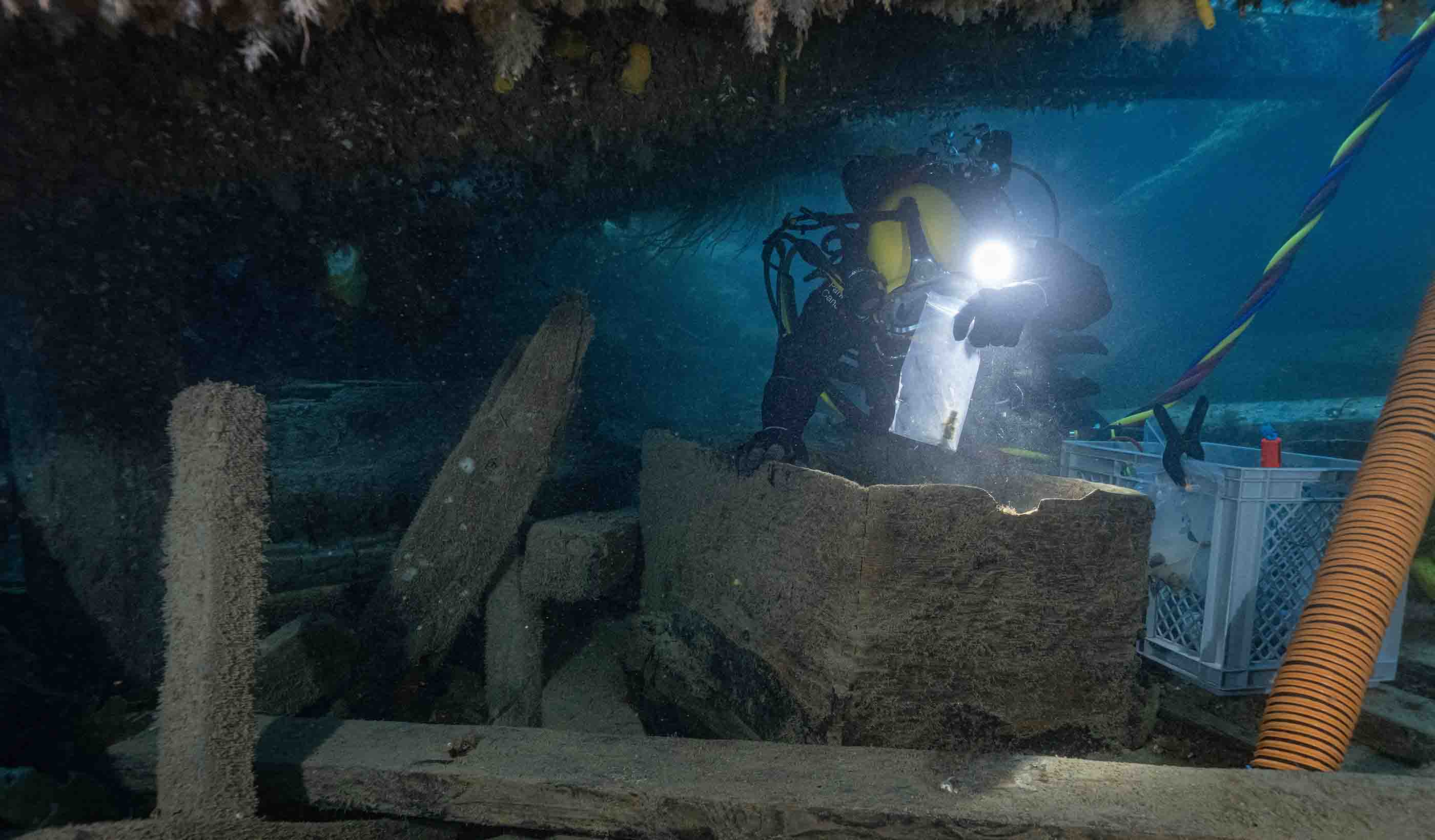

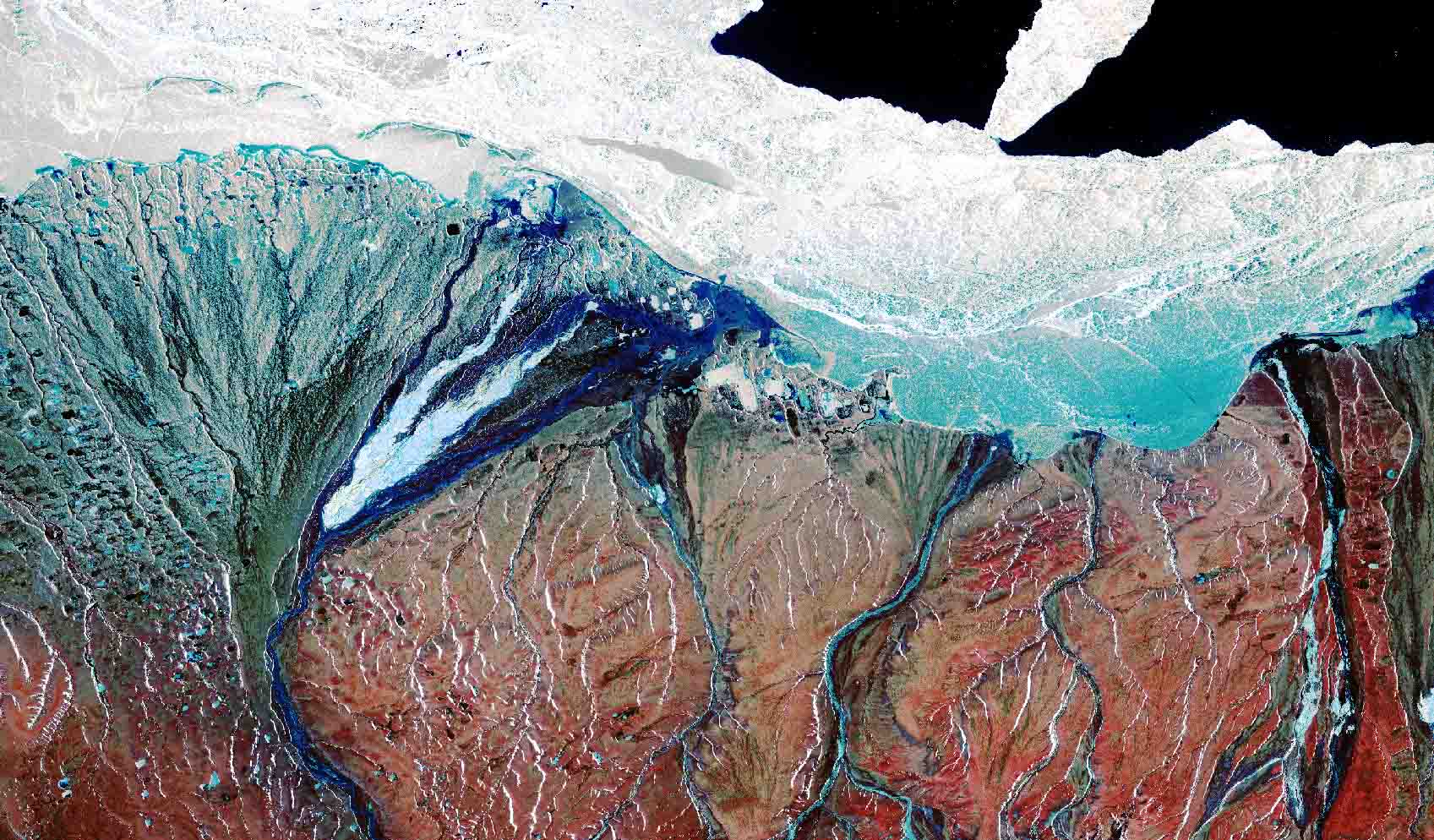

Published Article Franklin Expedition shipwrecks in the Arctic face a new threat—melting ice

-

Video Innovation Insights: How does innovation impact the AEC industry?

-

Blog Post Could ‘smart’ building facades heat and cool buildings?

-

Published Article Towering transformation: Yale revamp of historic structure embraces green design

-

Blog Post Sustainable mining finances: Exploring costs and liabilities

-

Blog Post What is a comprehensive plan? 6 steps to help your city

-

Published Article Sense of Community: Culturally responsive design fits buildings to place and purpose

-

Webinar Stantec Water Webinar Series – 2024 Presentation Schedule

-

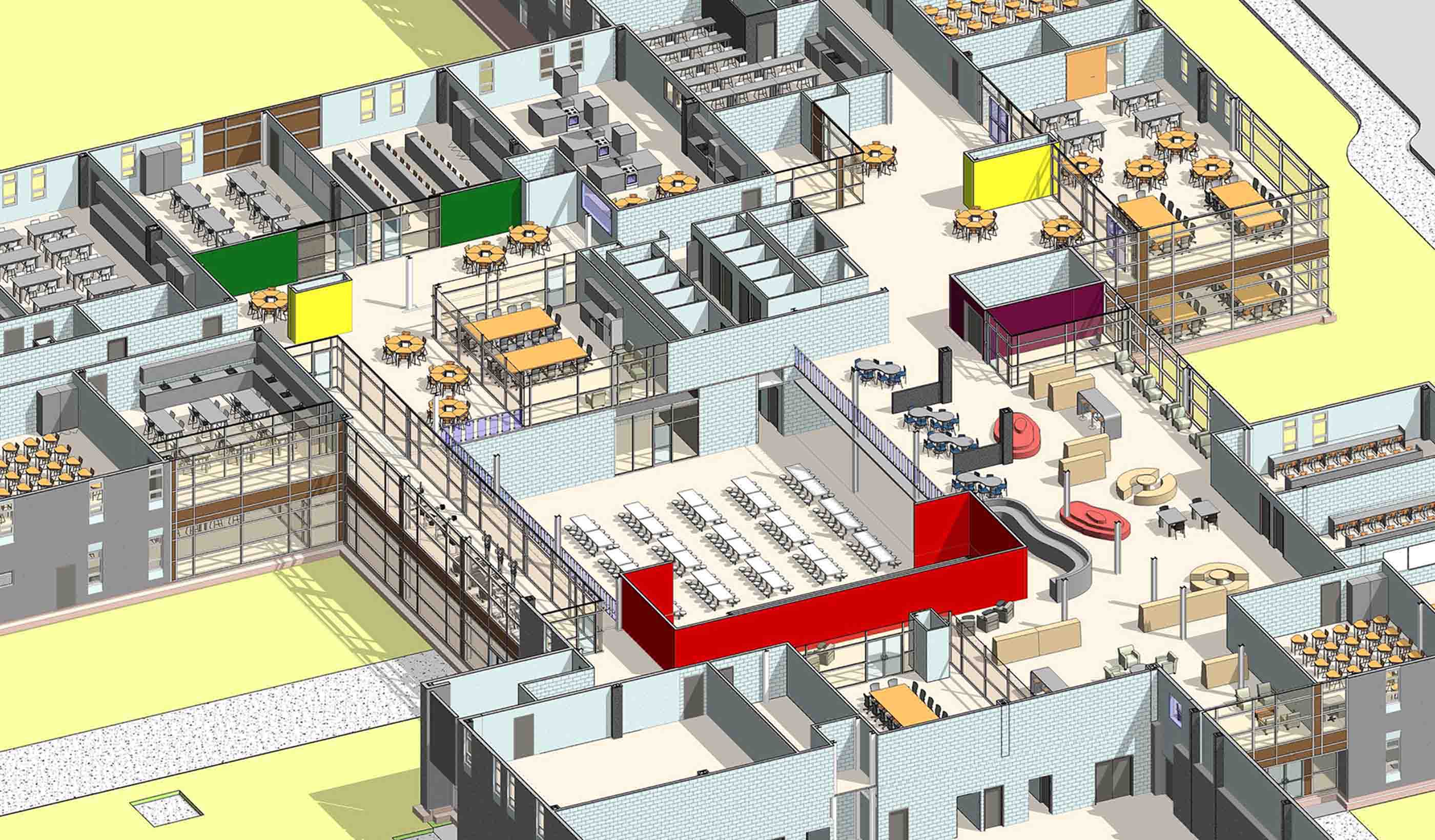

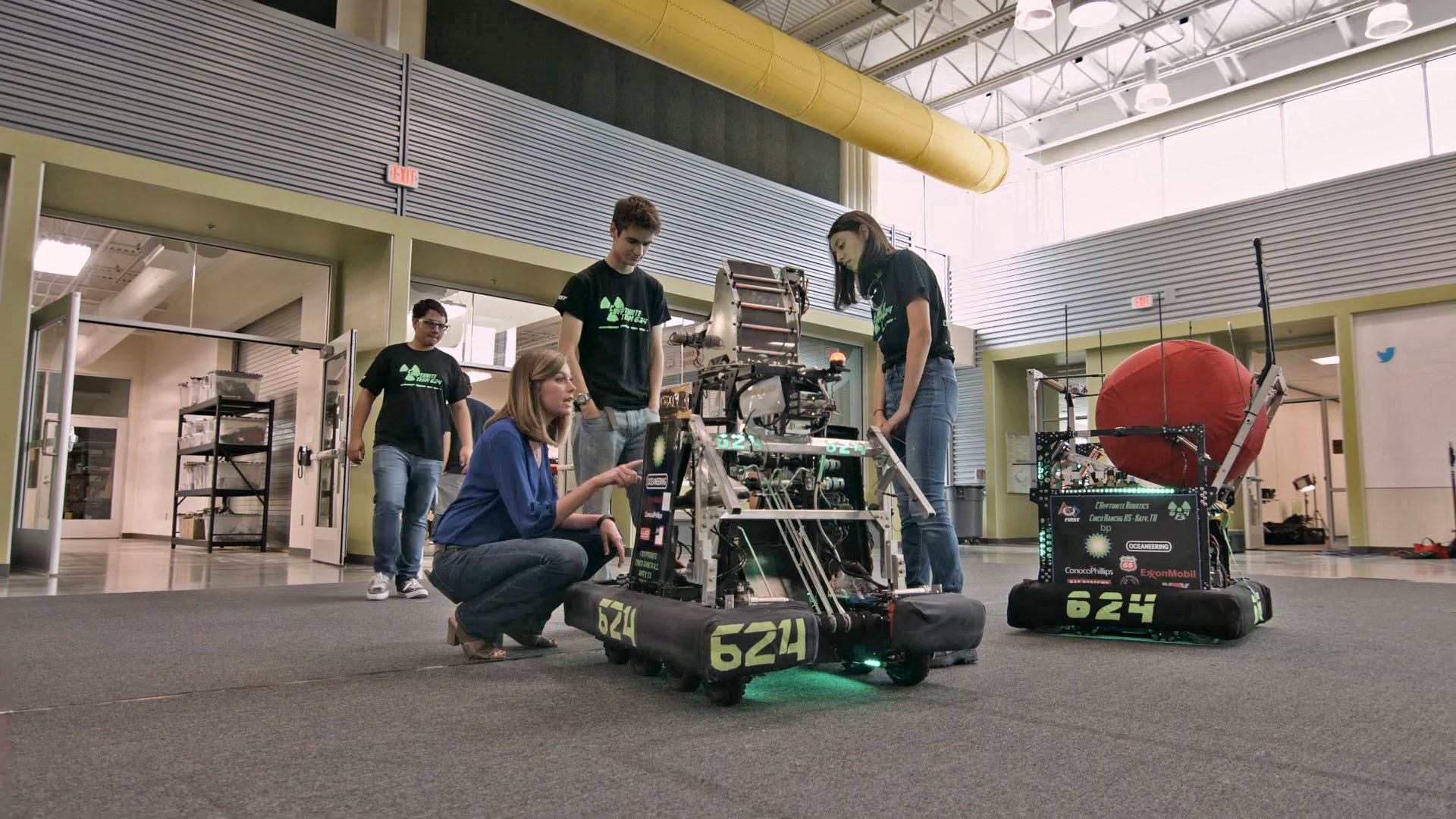

Video Behind the Scenes: Designing for Career and Technical Education

-

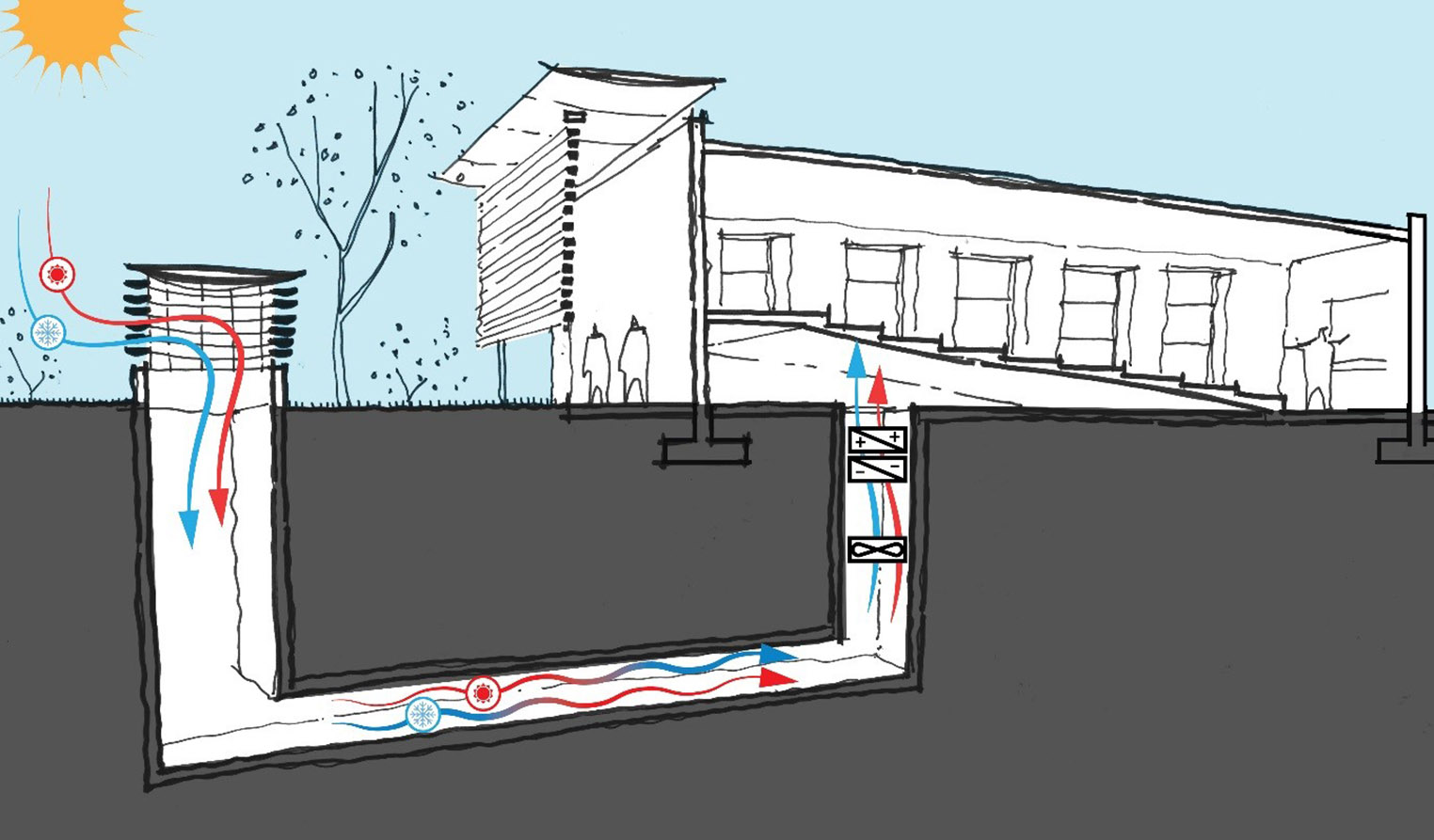

Blog Post Geoexchange system installation: 6 steps that prioritize safety and efficiency

-

Published Article What’s the deal with efficiency?

-

Blog Post 3 ways digital technology is pushing a revolution in the AEC industry

-

Webinar Recording Prepare for the Unexpected: Don’t let a flood put you under water

-

Publication Inside SCOPE at COP28

-

Blog Post The next destination: Passive design airports

-

Podcast Stantec.io Podcast: The Growth and Innovation Episode

-

Blog Post How do we secure the water supply needed for hydrogen production?

-

Video Raising dams to provide water security

-

Podcast Key water megatrends with Arthur Umble

-

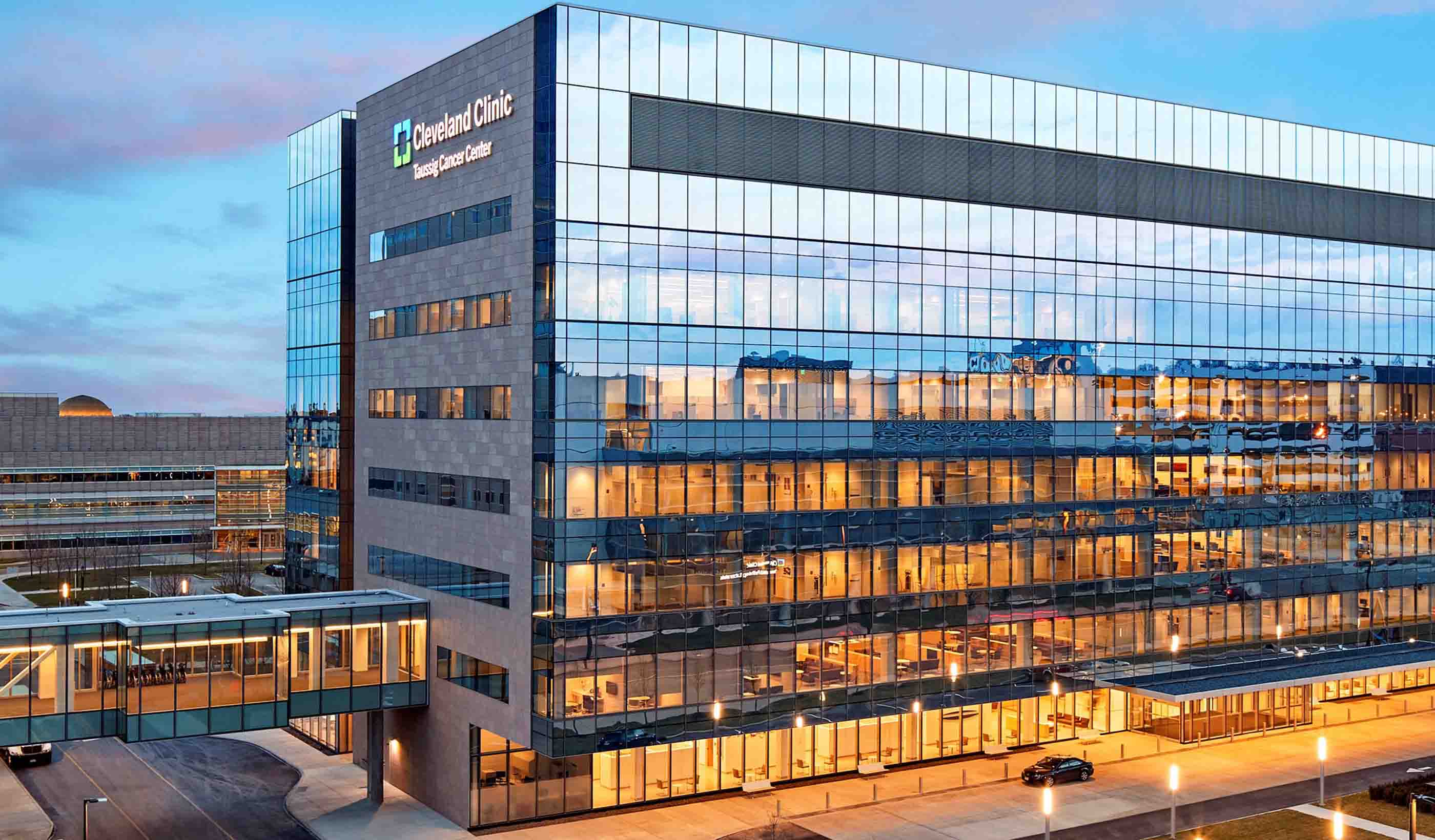

Blog Post Why decarbonizing hospitals smartly is better than electrification for healthcare design

-

Video Bringing a safe, reliable drinking water system back to Jackson, Mississippi

-

Blog Post Stream restoration requires working the bugs into your project

-

Blog Post Shrinking space: Post-pandemic law firm offices are smaller and more communal

-

Blog Post How green stormwater infrastructure can help cities manage intense rainfall events

-

Blog Post Building decarbonization strategy: It starts before electrification

-

Published Article Is your smart building an easy target for hackers?

-

Published Article Pumped storage in the USA: A story of IPPs, PPAs, and regulated utilities

-

Published Article The devastation of debris flows and the difficulty of predicting them

-

Published Article Optimizing complex HDD design and overcoming subsurface challenges

-

Blog Post Stantec’s Top 10 Ideas from 2023

-

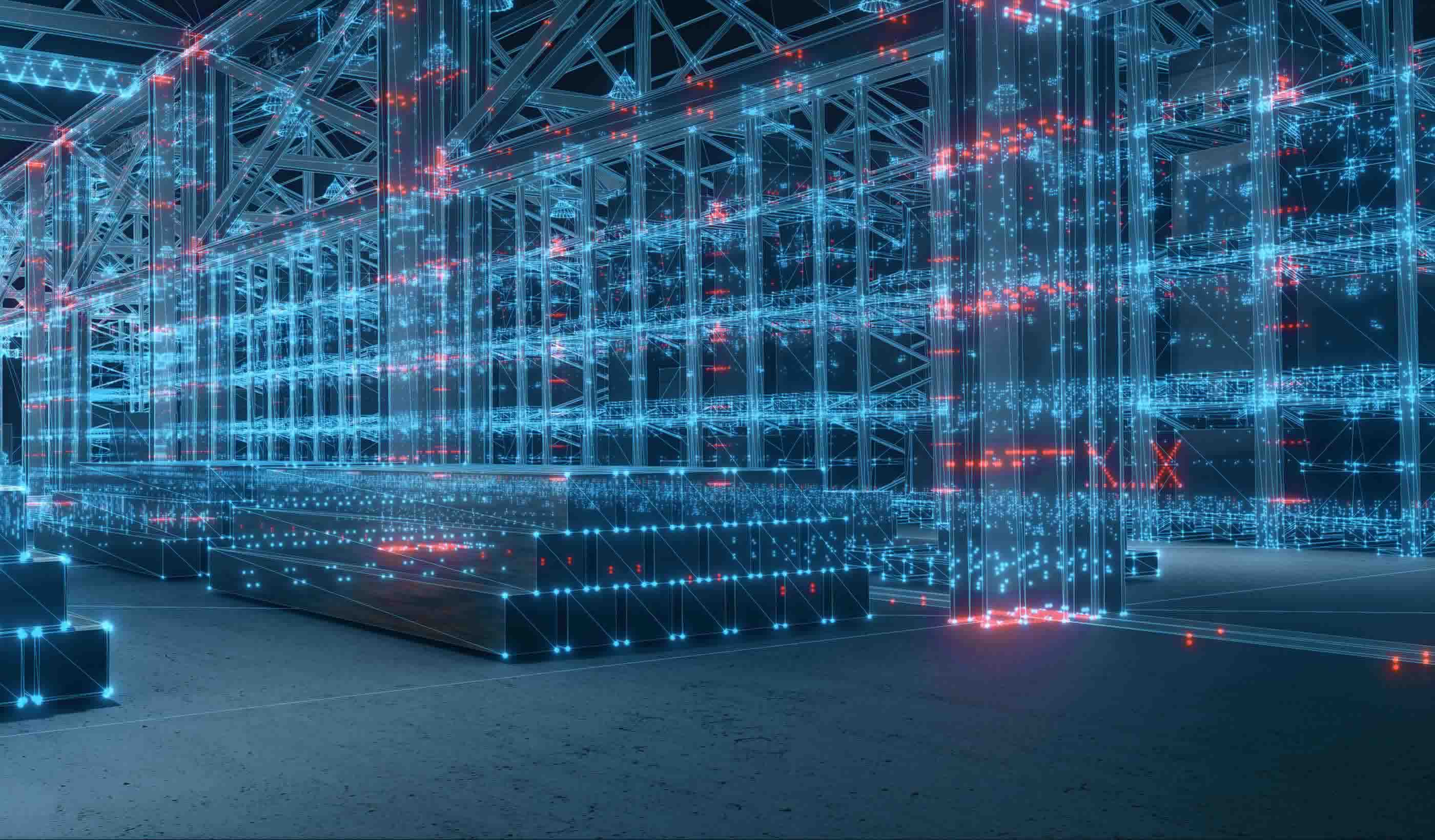

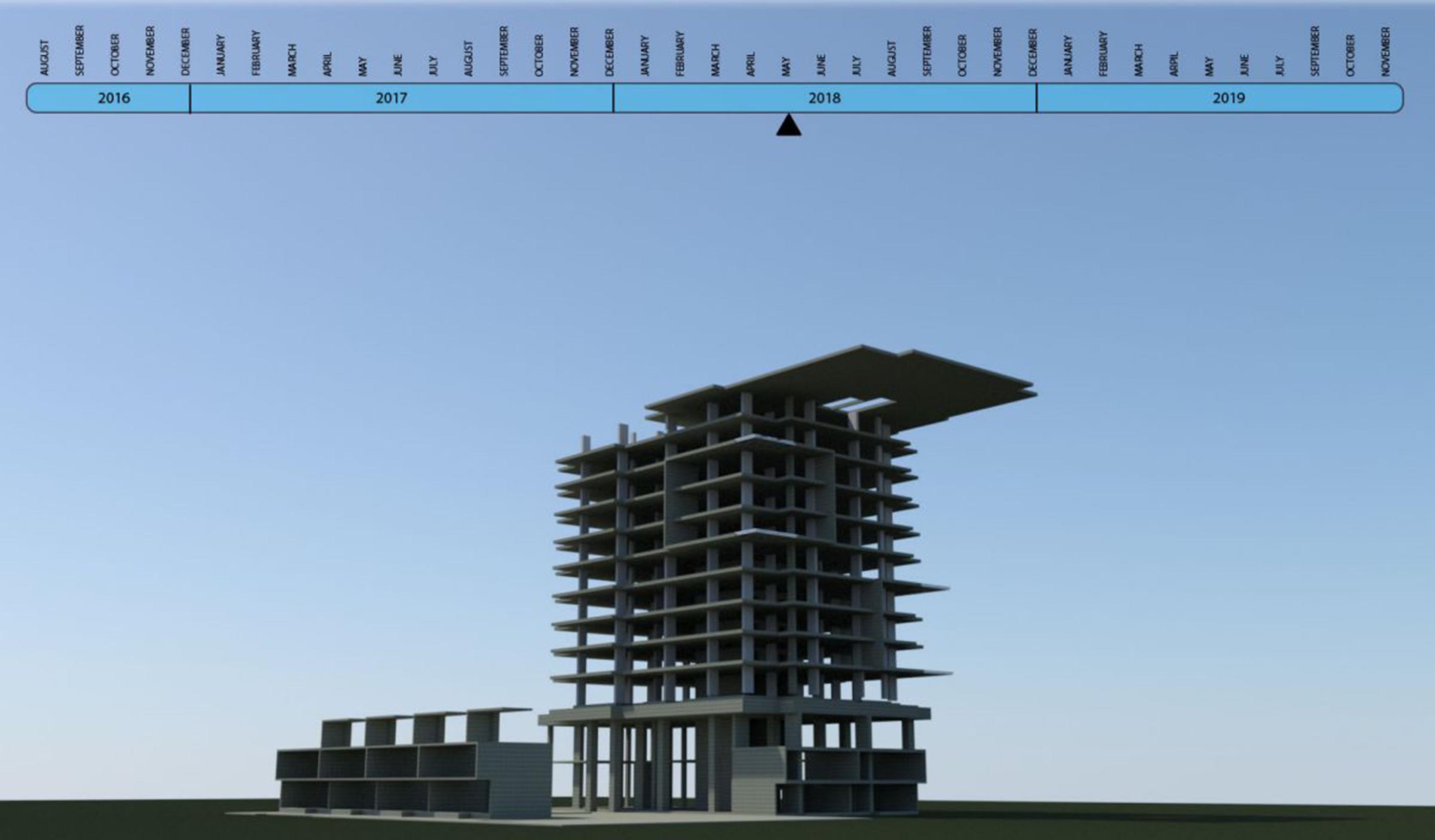

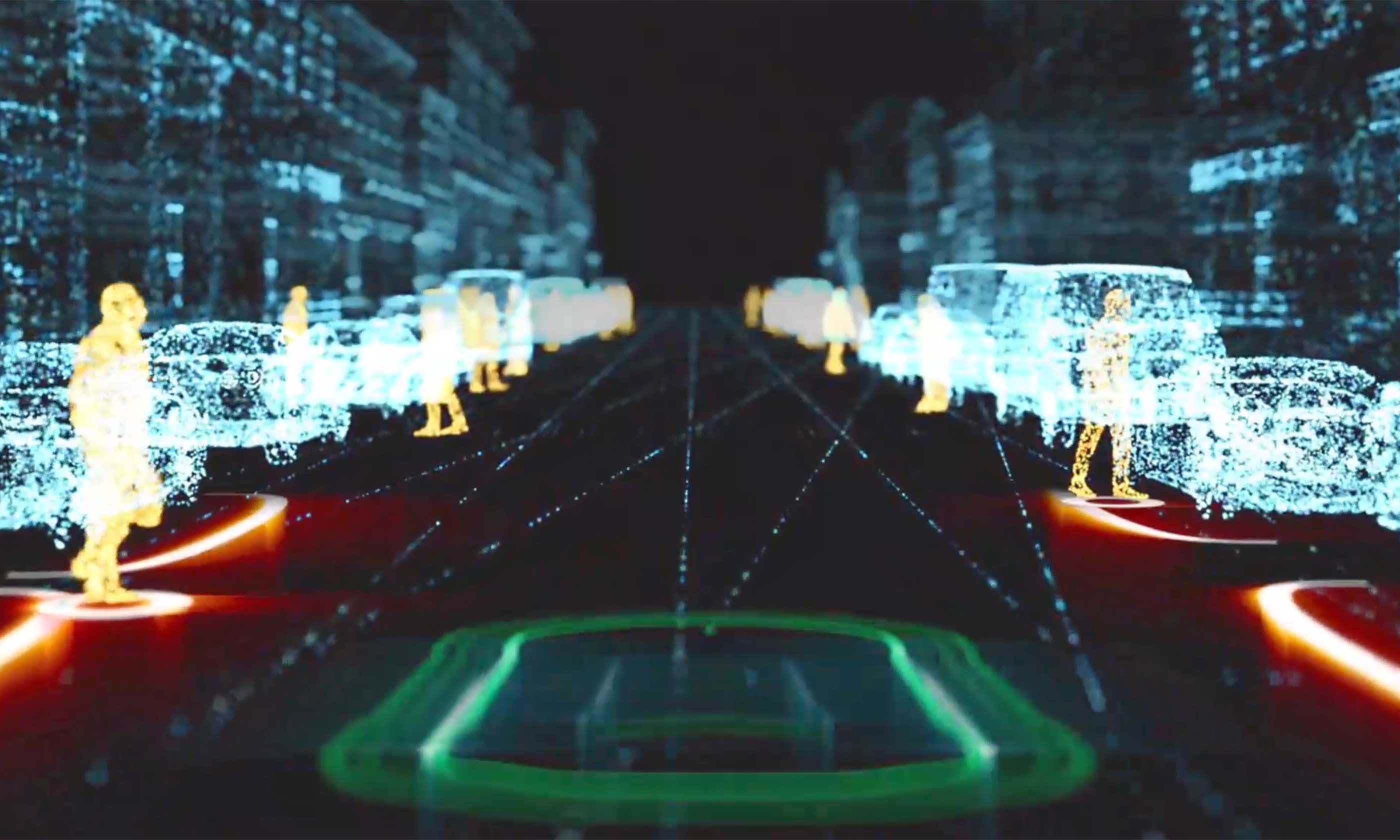

Published Article Public agencies test digital twin tool for improved asset monitoring

-

Blog Post 3 steps to achieve One Water in the real world

-

Whitepaper Supporting healthier and more resilient communities through investments in mobility

-

Blog Post Does your building need a life cycle assessment?

-

Podcast Stantec.io Podcast: The Rail Episode

-

Report Showcasing success with Nature-based Solutions at COP28

-

Published Article The high stakes game

-

Published Article Stantec on mining’s quest for healthy closure

-

Blog Post Flood risk modeling using machine learning helps protect communities. Here’s how.

-

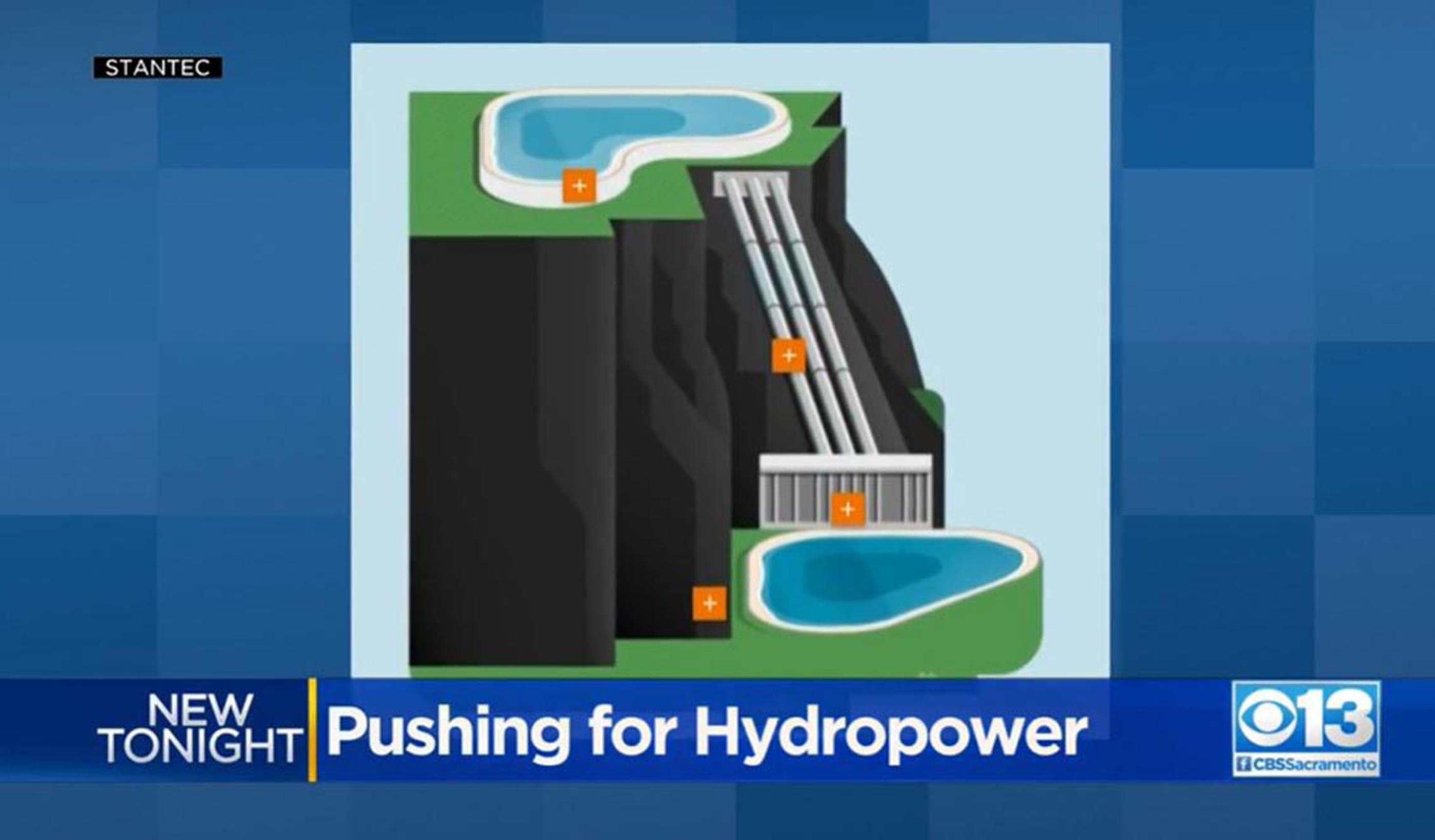

Blog Post Pumped storage hydropower and the Inflation Reduction Act are a renewable powerhouse

-

Published Article Sounding off on open office plans and workplace acoustics

-

Blog Post Low-carbon building materials: Designers discuss alternative options

-

Video How investing in downtowns fosters community and economic growth

-

Published Article Pumped storage hydropower: Helping to drive the energy transition

-

Published Article Turquoise hydrogen producers could capture flourishing graphite market

-

Blog Post Dam removal: An engineer returns to restore a Connecticut river of his childhood

-

Whitepaper Adapting flood prediction for the climate crisis era

-

Publication Design Quarterly Issue 20 | RETHINK

-

Blog Post 5 questions to ask before you write a decarbonization RFP

-

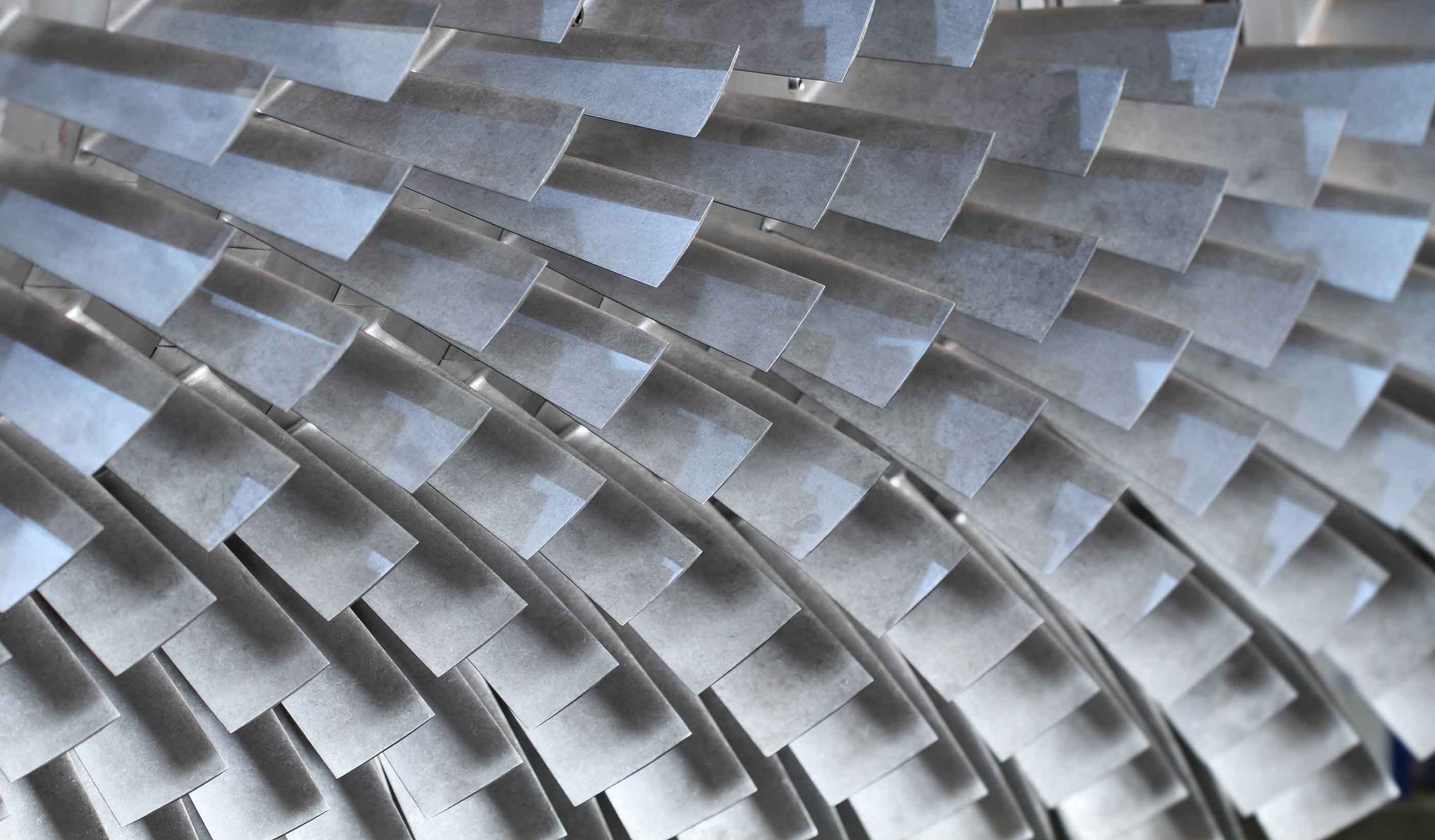

Published Article Applying the 3Rs to mine ventilation

-

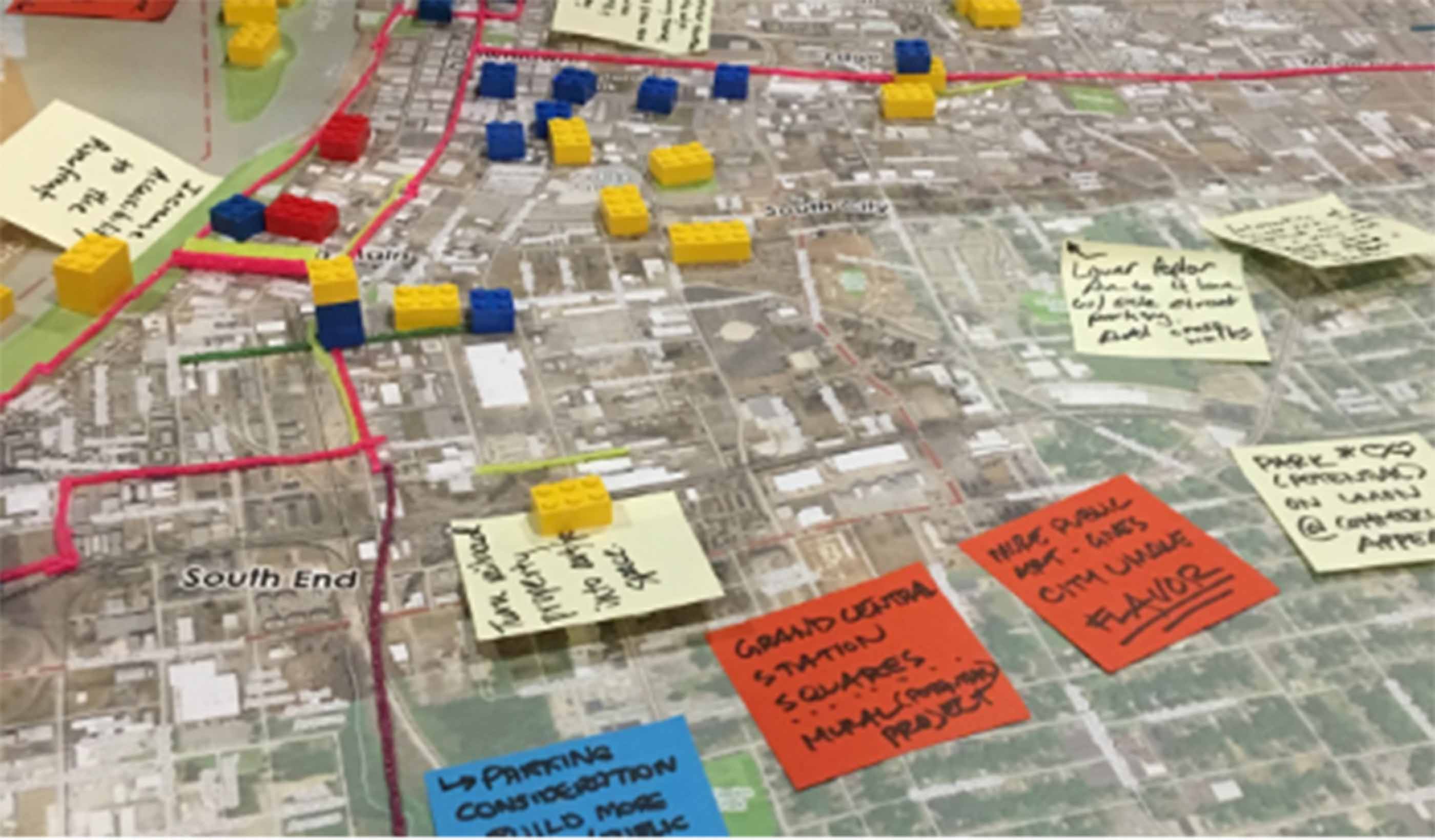

Report Catalyzing Calgary’s Downtown West

-

Published Article Protecting cultural artifacts: Archaeology’s role in building projects

-

Webinar Recording Design and development of large diameter water tunnels

-

Blog Post Mining decarbonization is key to creating clean energy

-

Blog Post New ASHRAE standards tip the balance toward net zero buildings

-

Webinar Recording Run your models faster and get results

-

Published Article Got yourself a deal? M&A lessons from Stantec

-

Blog Post Water for all: How to move past 3 big stressors

-

Video Restoring the coastal resilience of a unique Great Lakes ecosystem

-

Published Article Financing boom supercharges Superfund

-

Published Article Designing for school safety through CPTED and biophilic concepts

-

Video Miami design team sets the table for success

-

Webinar Recording Mall of the Future Webinar Series

-

Blog Post How to create a lead service line inventory through data

-

Video Helping meet tomorrow’s water needs in Colorado

-

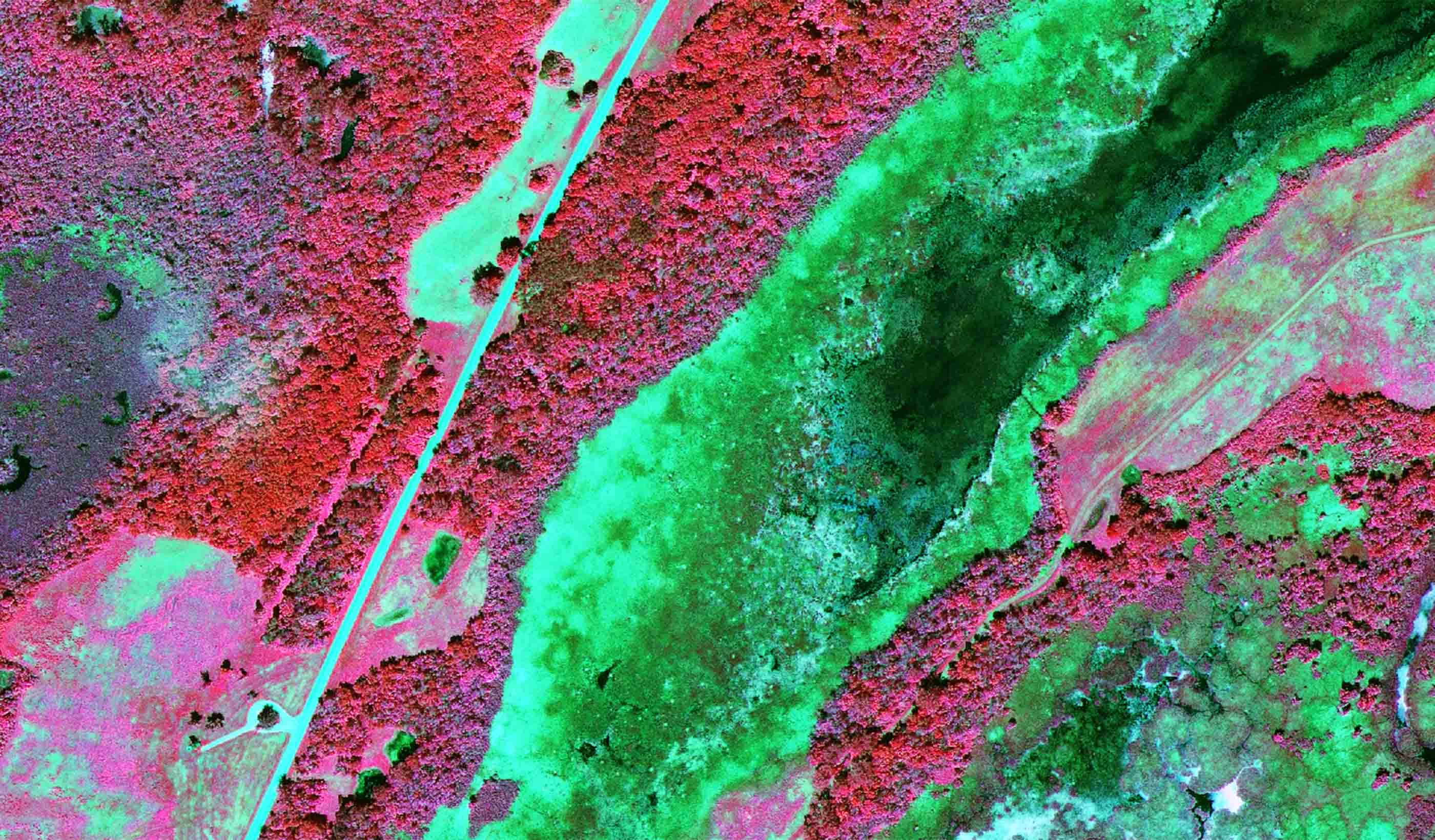

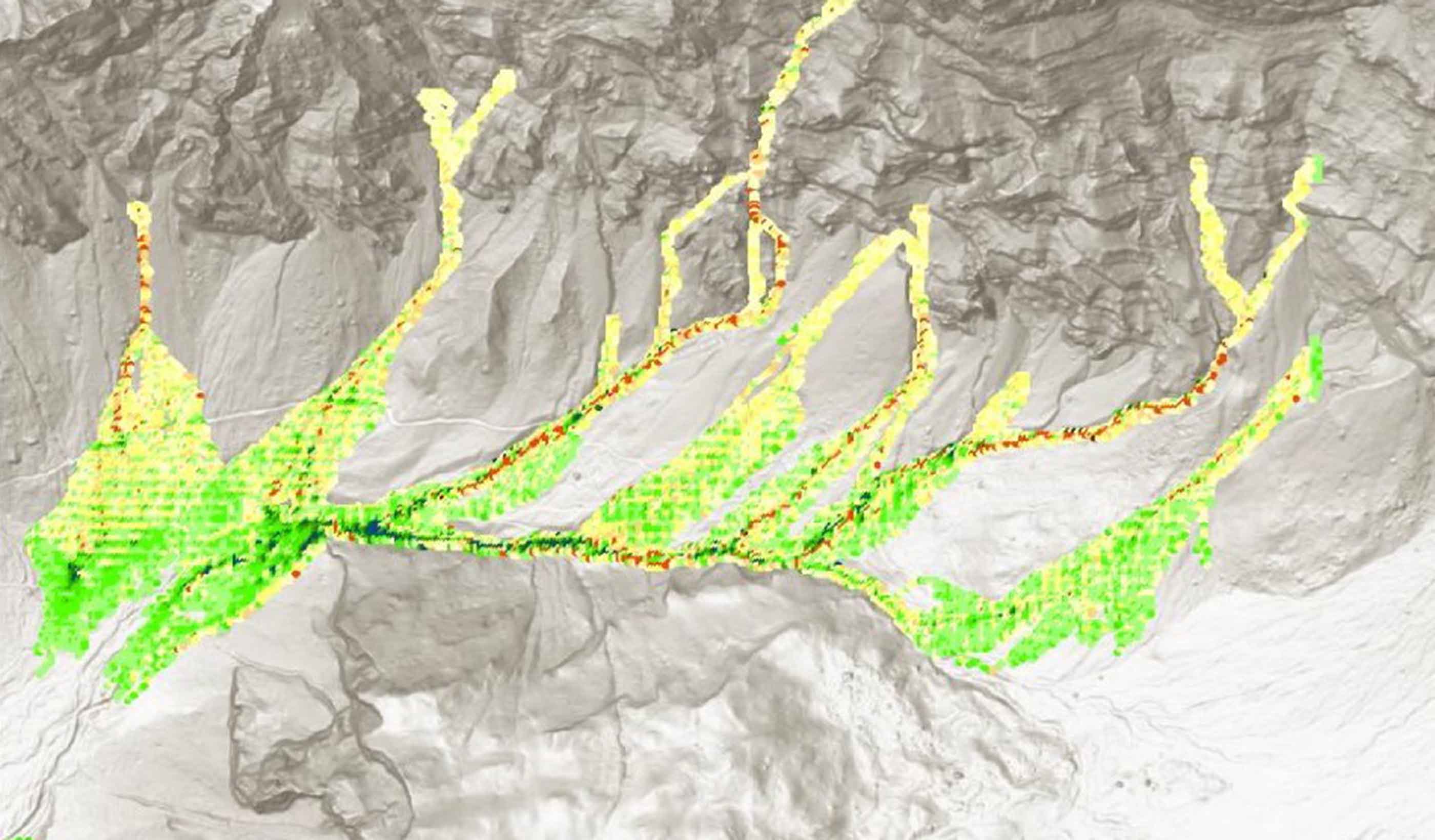

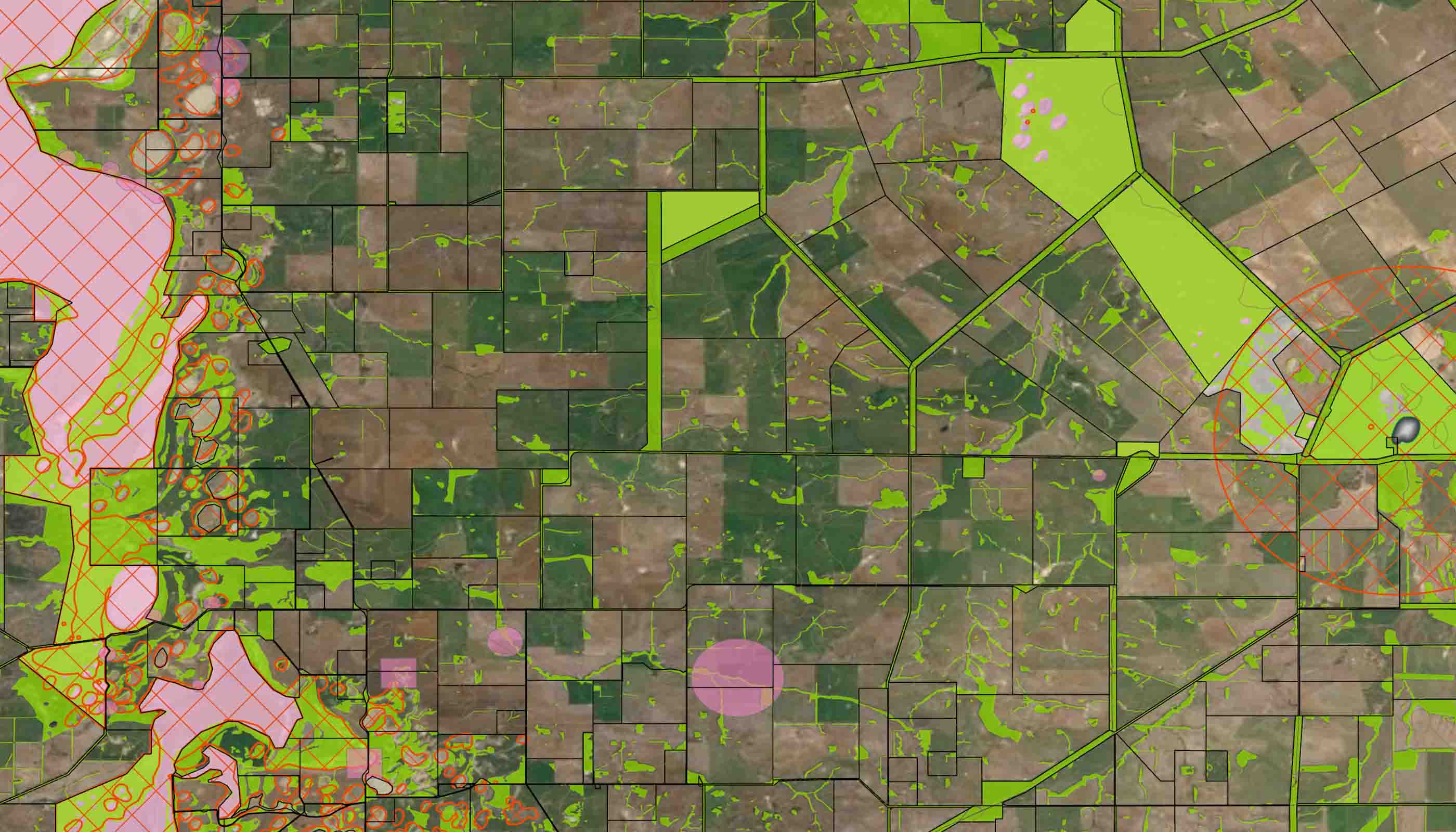

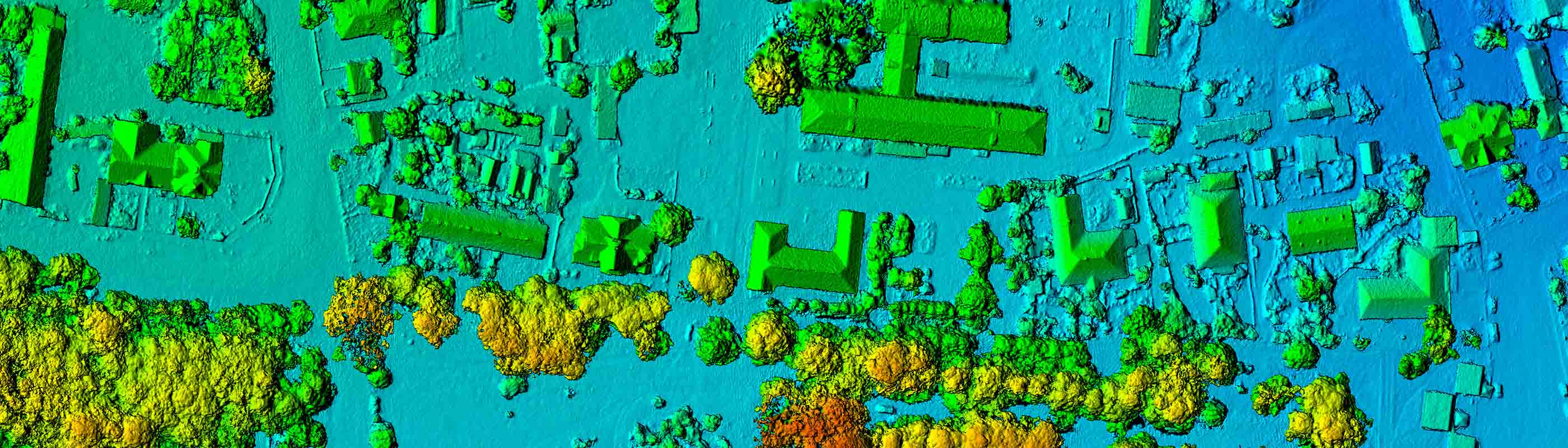

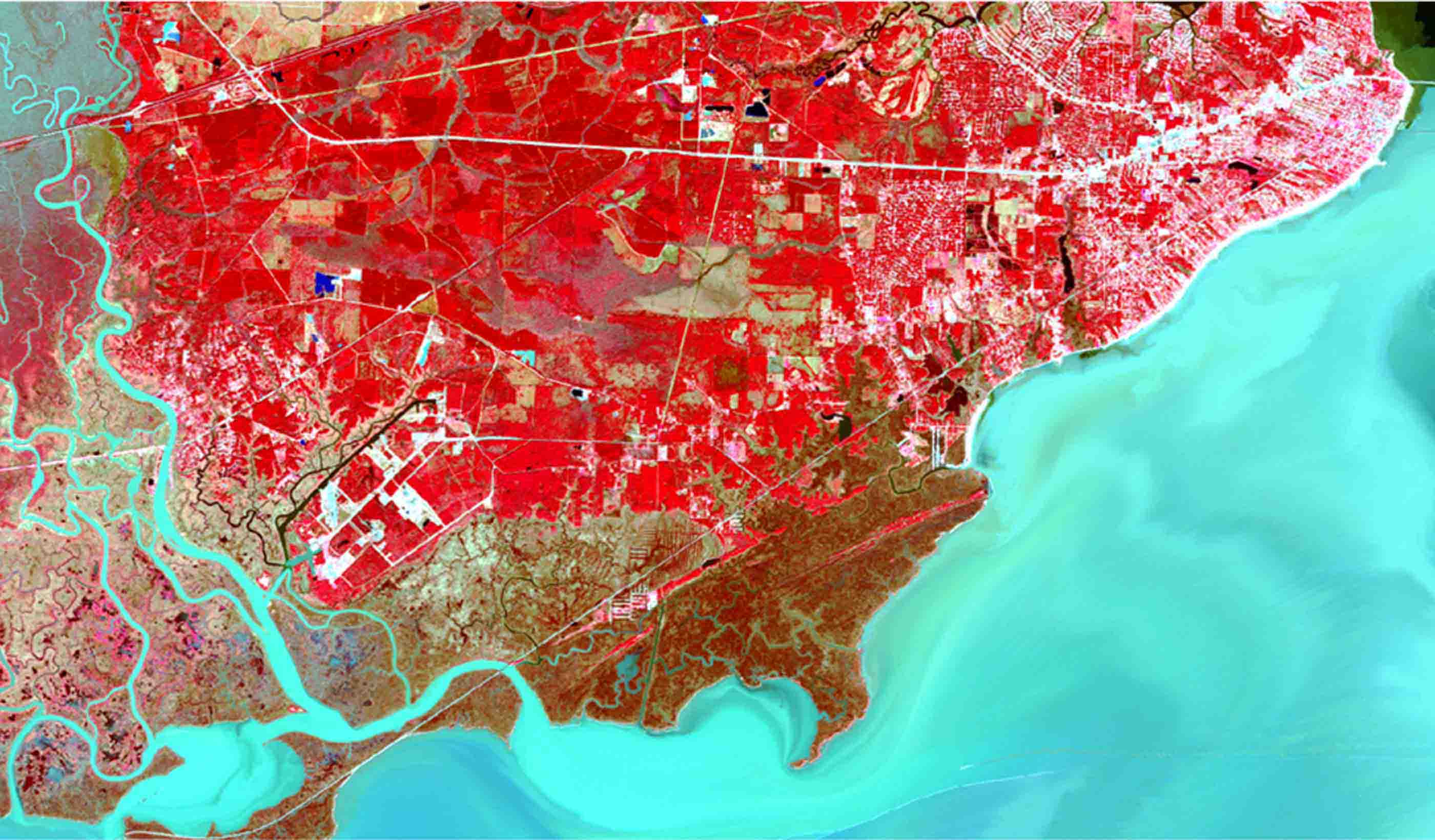

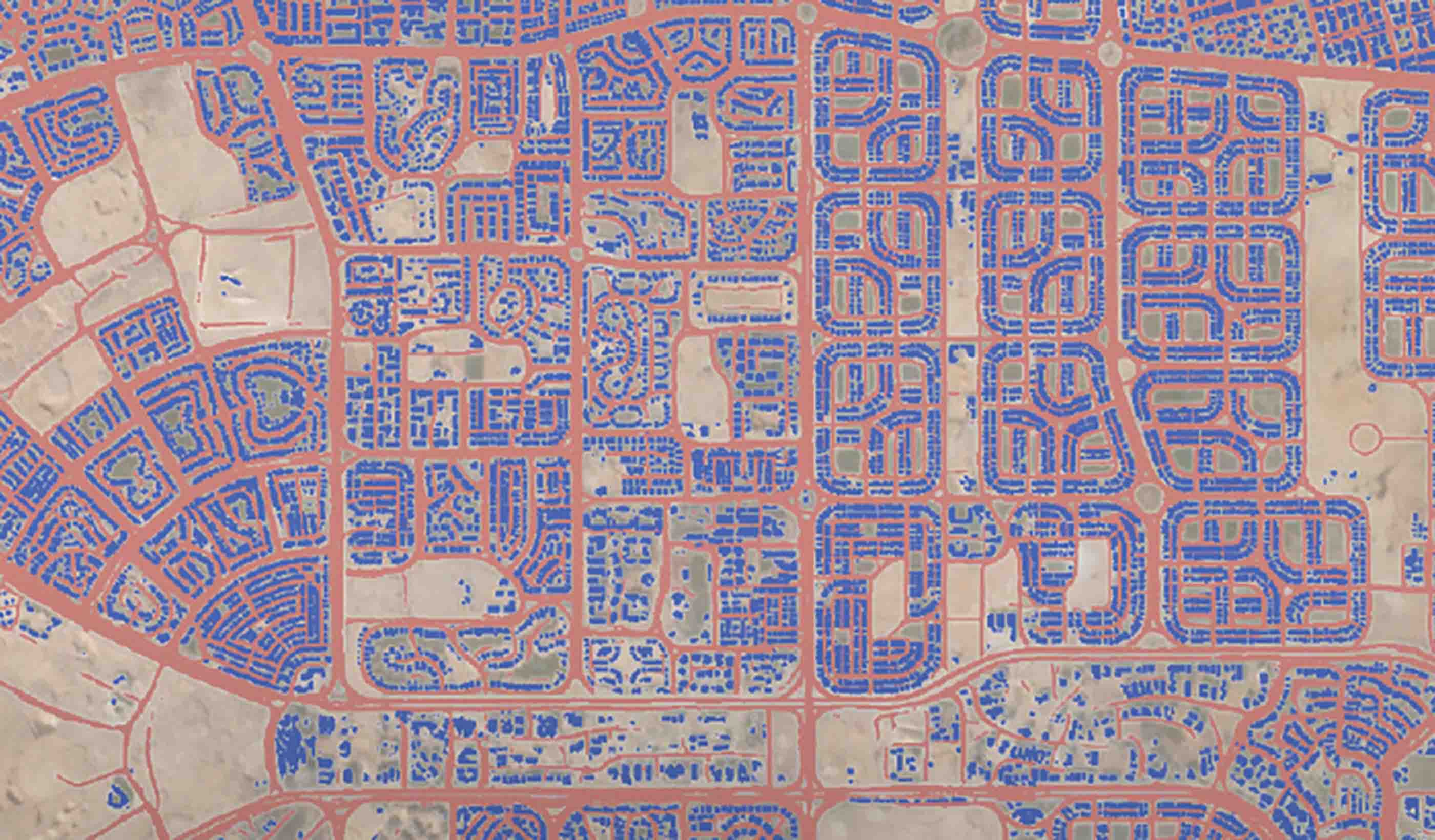

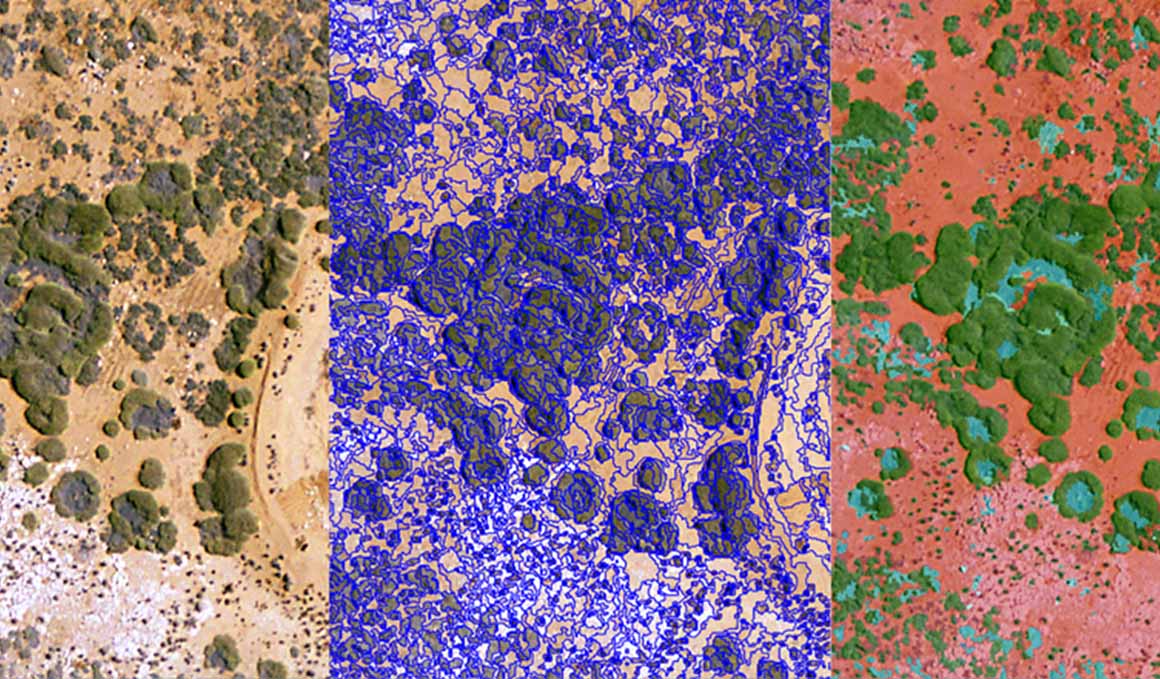

Blog Post Soil carbon models: How remote sensing can measure the carbon stored in your soil

-

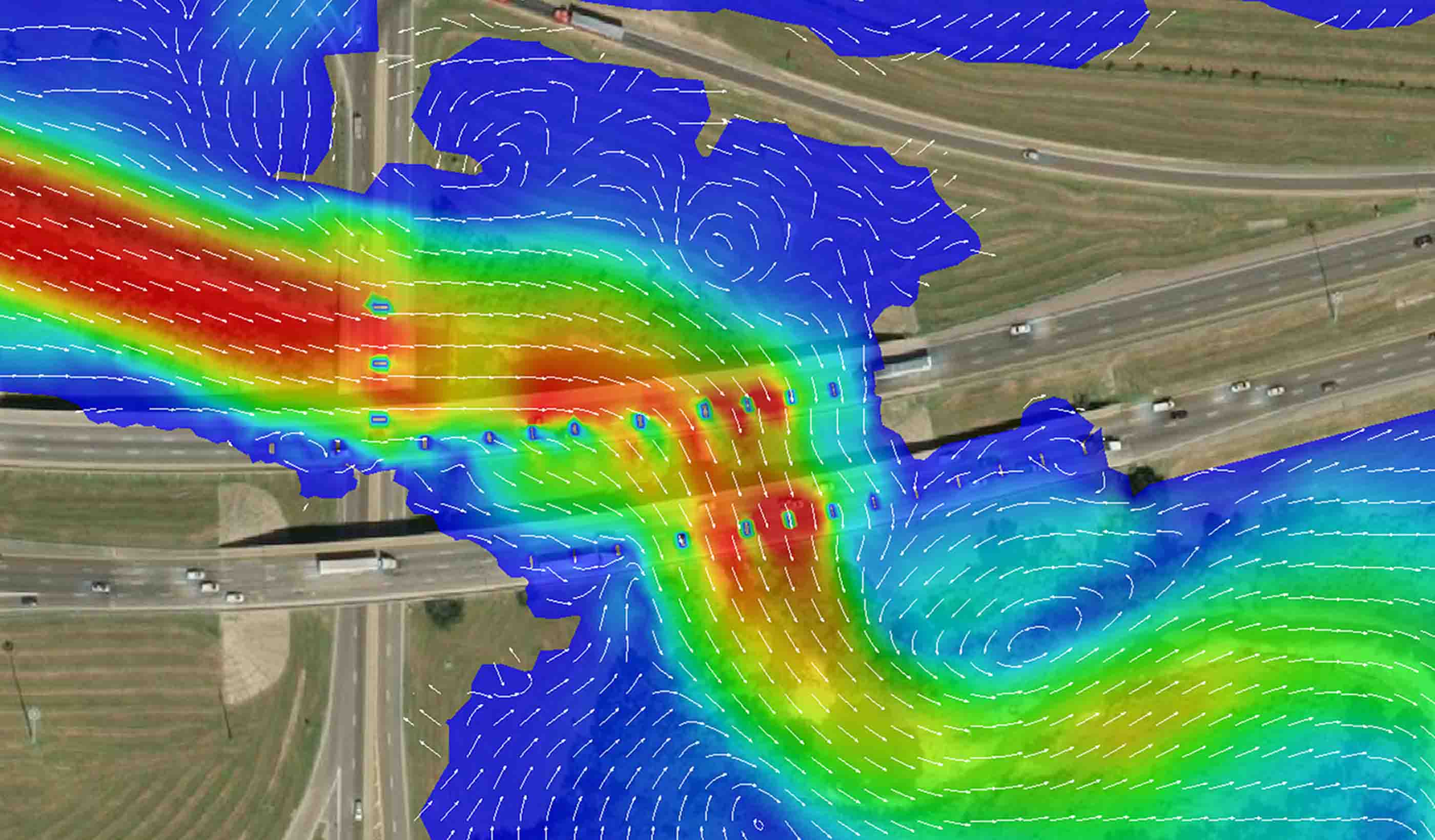

Published Article Hydraulic modeling approaches in drainage design and water resources engineering

-

Published Article Don’t 'debug' the selenium treatment system

-

Blog Post How does the 2020 National Building Code impact seismic design in Canada?

-

Blog Post Conceptual design: How a new digital tool helps buildings engineers work better

-

Podcast Talking Transit-Oriented Development with Sisto Martello

-

Video The Meaning of Design

-

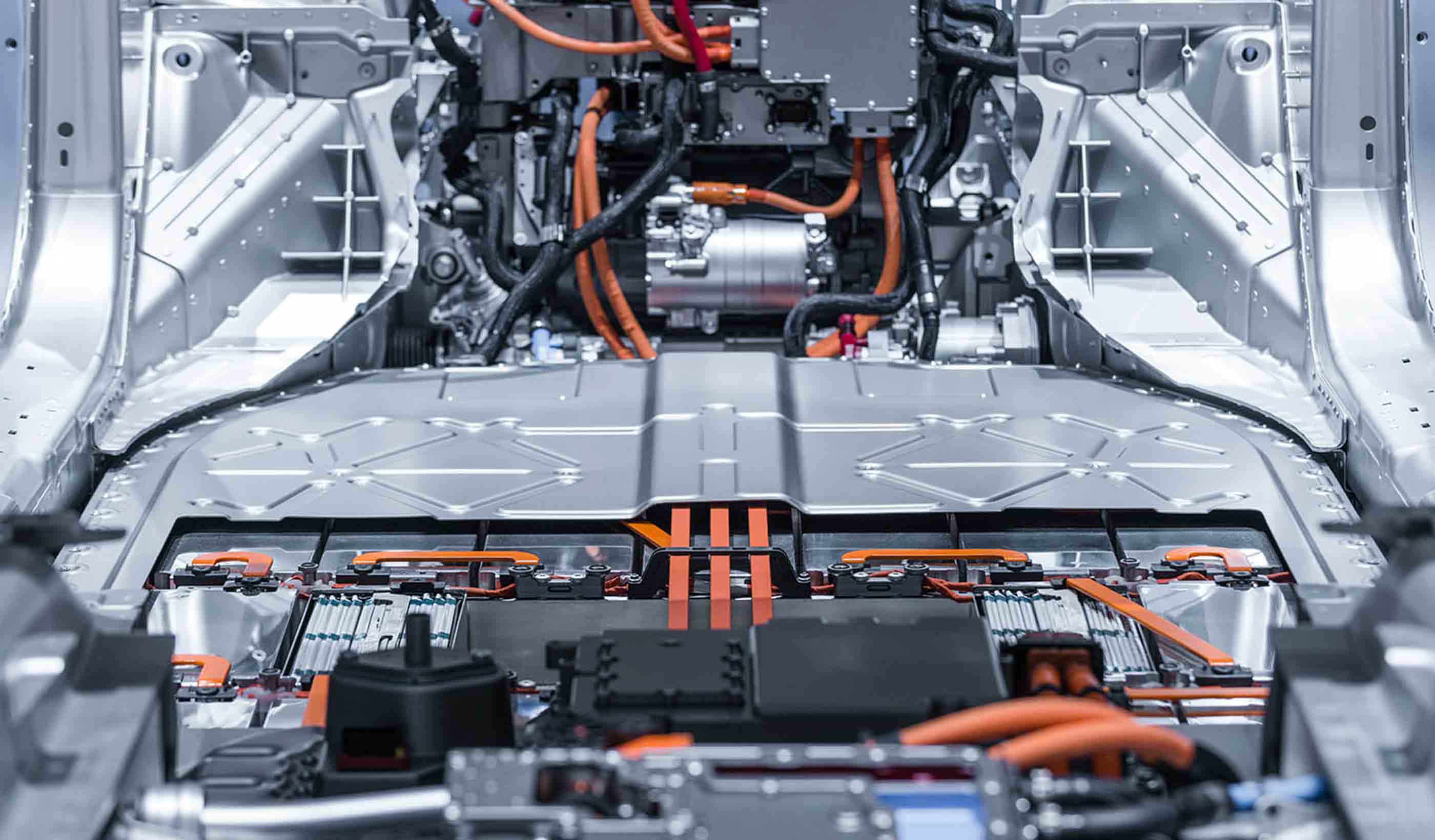

Blog Post Design approaches for the next generation of EV battery manufacturing

-

Blog Post 4 questions a wastewater utility should ask before expanding

-

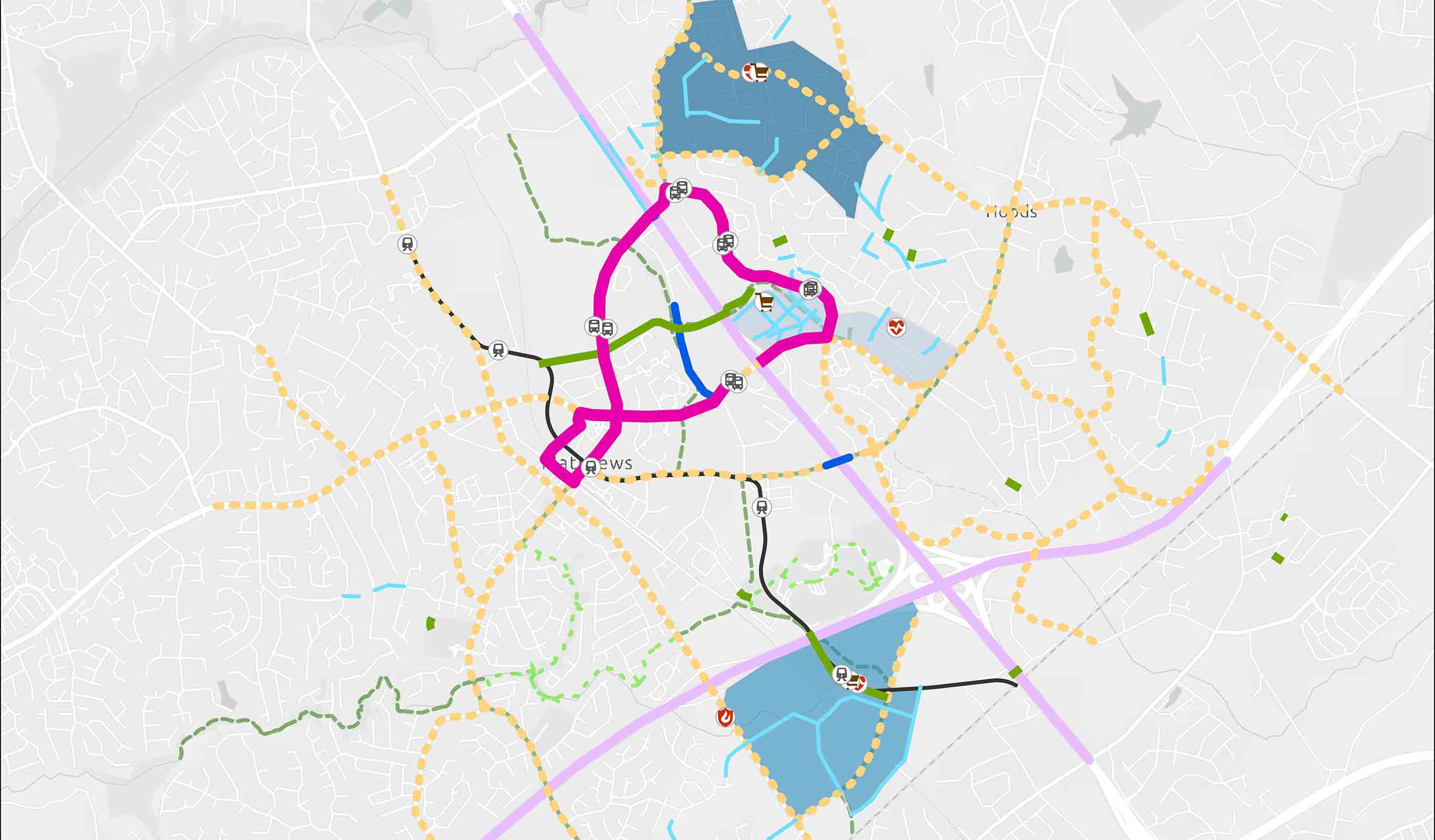

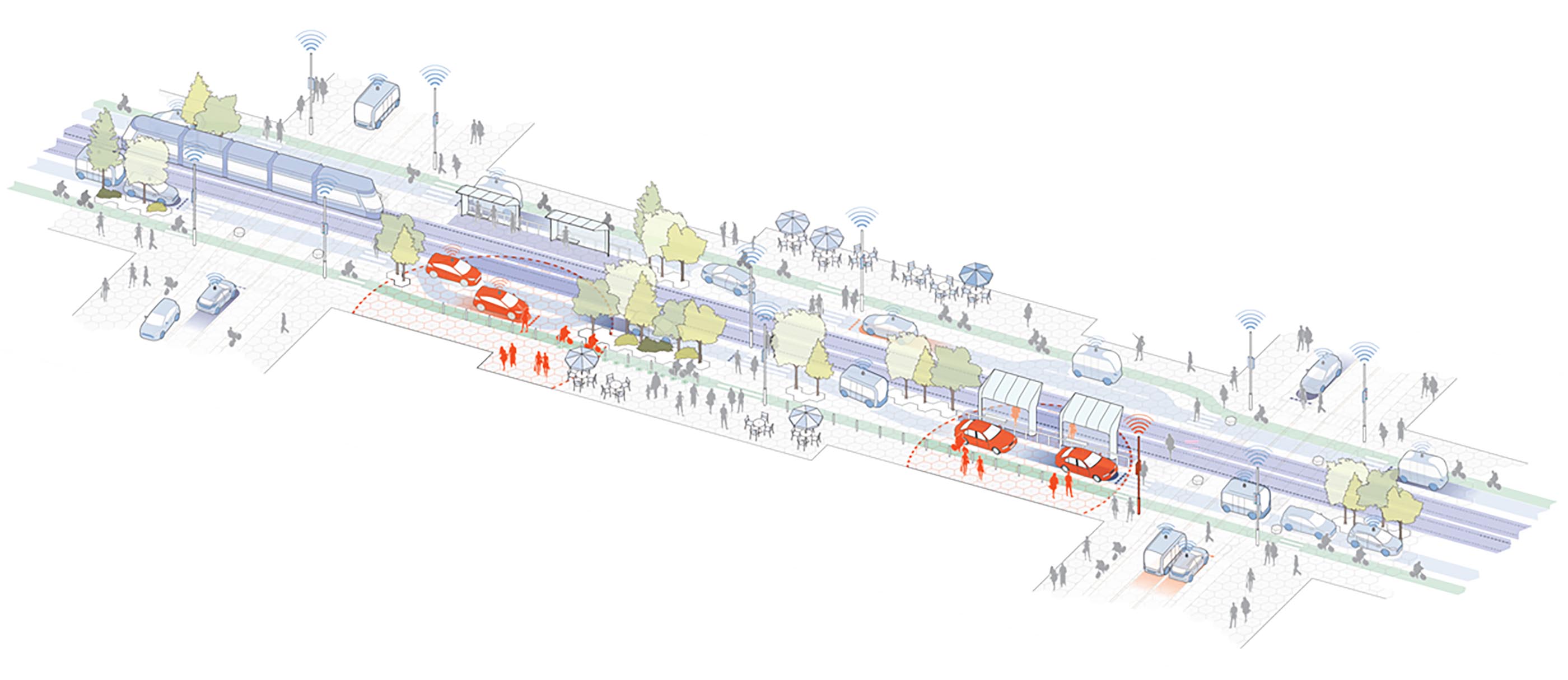

Blog Post Mobility hubs are the key to unlocking corridor development potential

-

Blog Post Dam safety: How technology can help dam owners and operators overcome 3 challenges

-

Blog Post How seahorse hotels protect endangered species in Australia

-

Blog Post What role do we play in decarbonization?

-

Blog Post Carbon capture methods: How can we capture and remove carbon from our atmosphere?

-

Blog Post 100% renewable energy: Making that commitment count

-

Podcast Stantec.io Podcast: The Dam Episode

-

Published Article Sustainably and quickly getting critical minerals to market

-

Blog Post So, you’ve captured carbon effectively. Now what?

-

Blog Post Embodied carbon: Mining to decarbonize buildings

-

Blog Post Reusing water while capturing carbon

-

Published Article Confidence in conveyance

-

Blog Post Passive house design regulations: Here are 8 strategies for multifamily projects

-

Video What is Stantec.io?

-

Podcast Scientists discover dinosaur ‘coliseum’ in Alaska’s Denali National Park

-

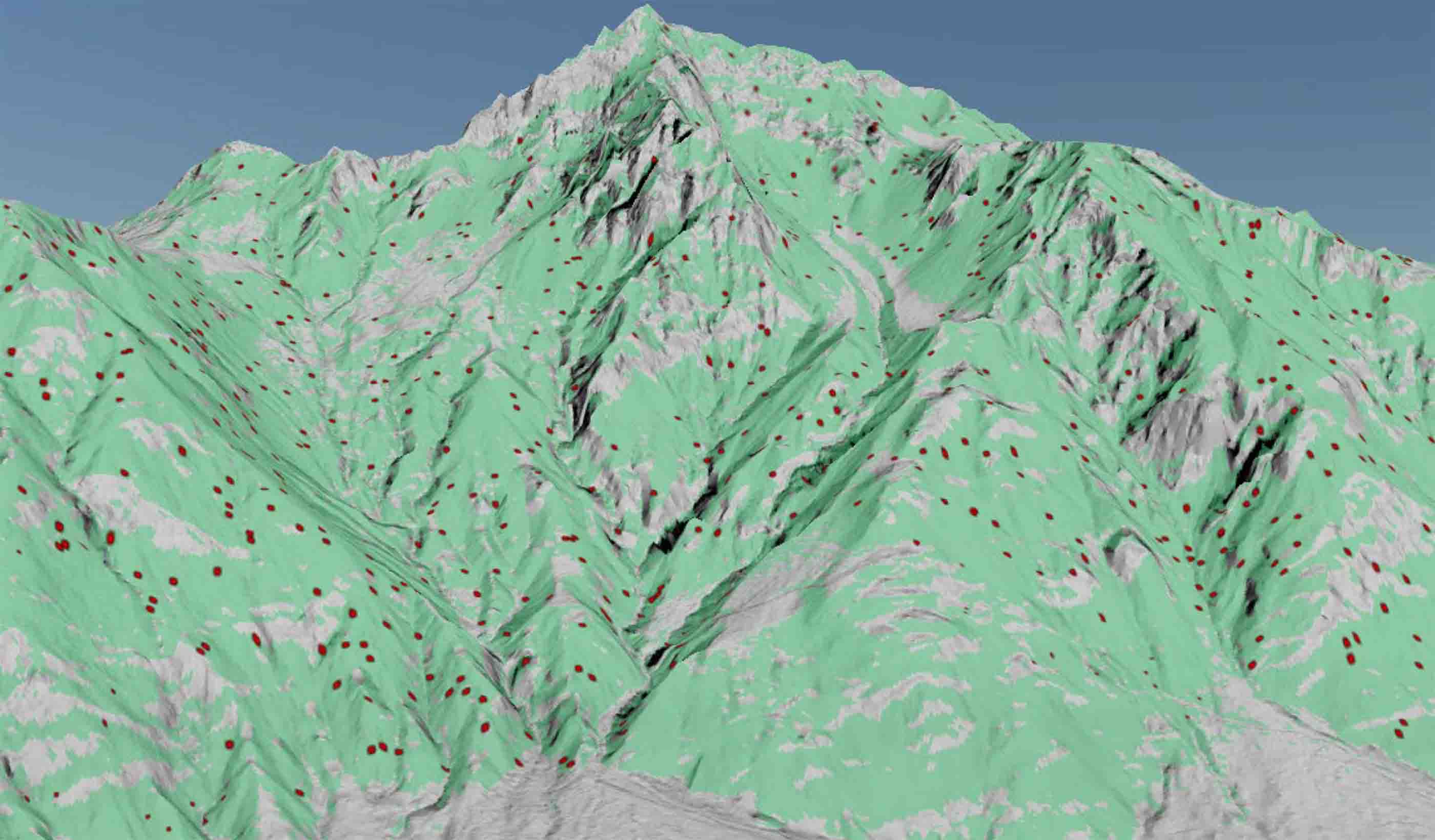

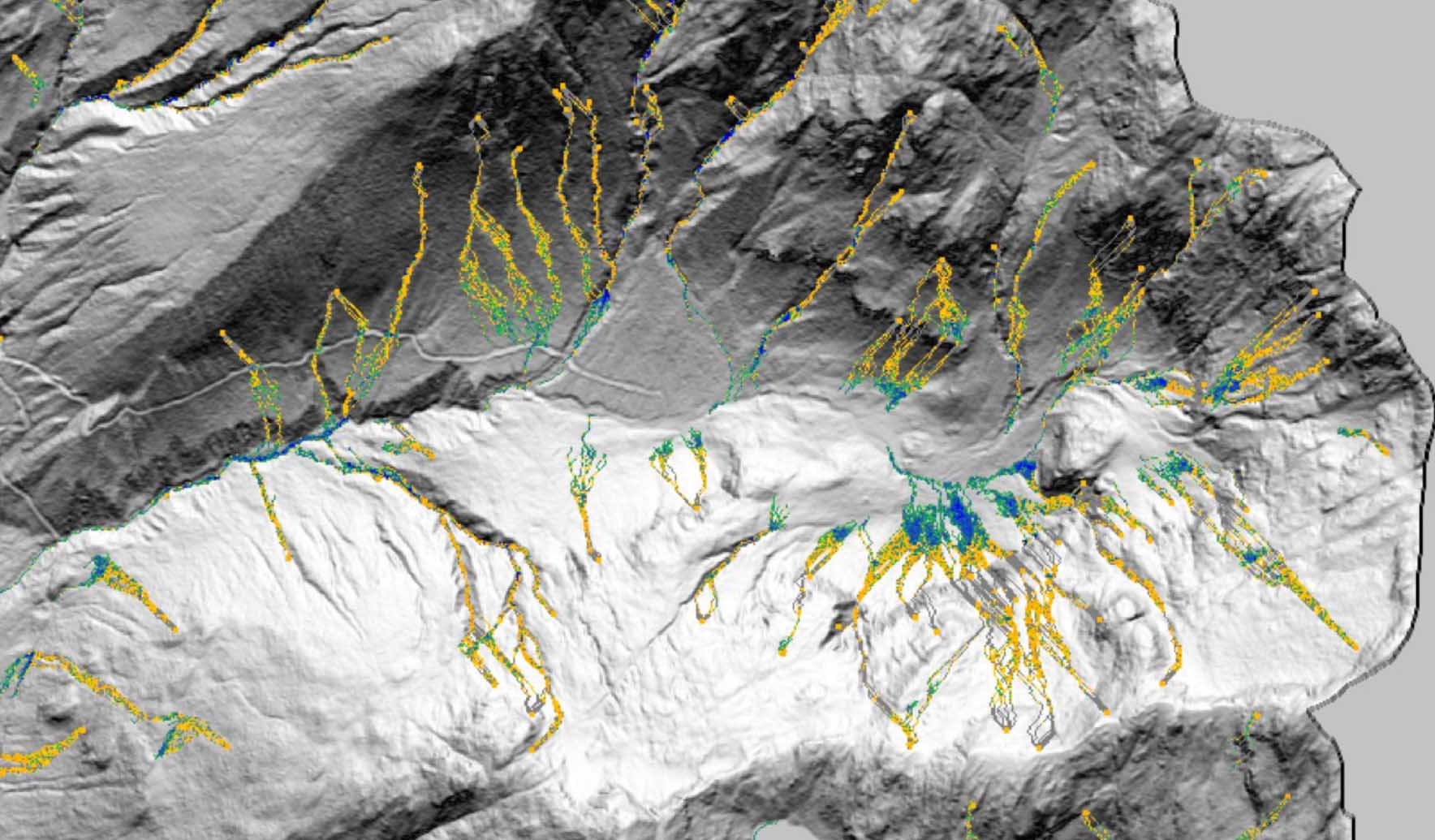

Technical Paper Post-wildfire debris flow and large woody debris transport modeling

-

Technical Paper Advancing debris flow hazard and risk assessments with modeling and rainfall intensity data

-

Published Article Communicating the difference between hazard and risk

-

Technical Paper What does landslide triggering rainfall mean?

-

Webinar Recording Mineral processing through a sustainability lens

-

Technical Paper Debris flow hazard mapping along linear infrastructure: An agent based mode and GIS approach

-

Podcast Stantec.io Podcast: Extreme Weather and Digital Solutions

-

Published Article Floating offshore wind power could be the key to reaching decarbonization targets

-

Blog Post ESG programs that focus on health help build climate resilience, create financial benefits

-

Webinar Recording New US federal incentives will drive wastewater renewables

-

Video Helping create the most sustainable tree nursery business park in Europe

-

Blog Post How do you create thriving, connected places? 5 ways to expand transit-oriented planning

-

Video The costs behind PFAS treatment for drinking water

-

Blog Post The future of design and AI in architecture

-

Video Workplace Reboot: A workspace that reflects a commitment to sustainability, health, and well-being

-

Blog Post Robots in the workplace: This one scans for indoor air quality and more

-

Webinar Recording Optimizing blended water sources to prevent metal corrosion

-

Published Article Nature-based solutions: Crucial innovations for a resilient, sustainable future

-

Blog Post Solving for H: 4 challenges hydrogen experts are working to resolve

-

Published Article Addressing climate change

-

Design Quarterly Issue 19 | Decarbonization

-

Published Article Destination Maintenance

-

Published Article Developments in custody transfer metering of natural gas

-

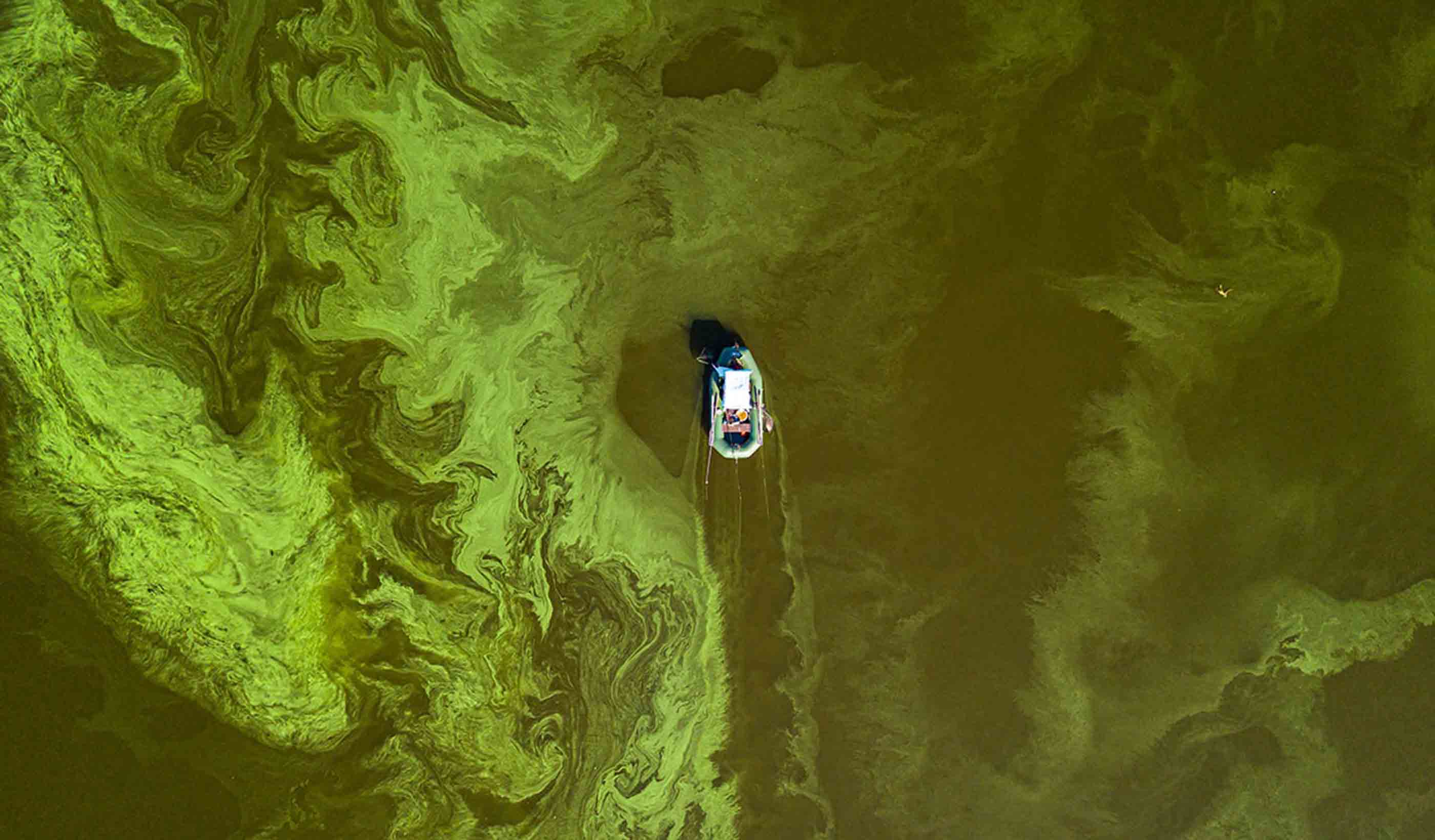

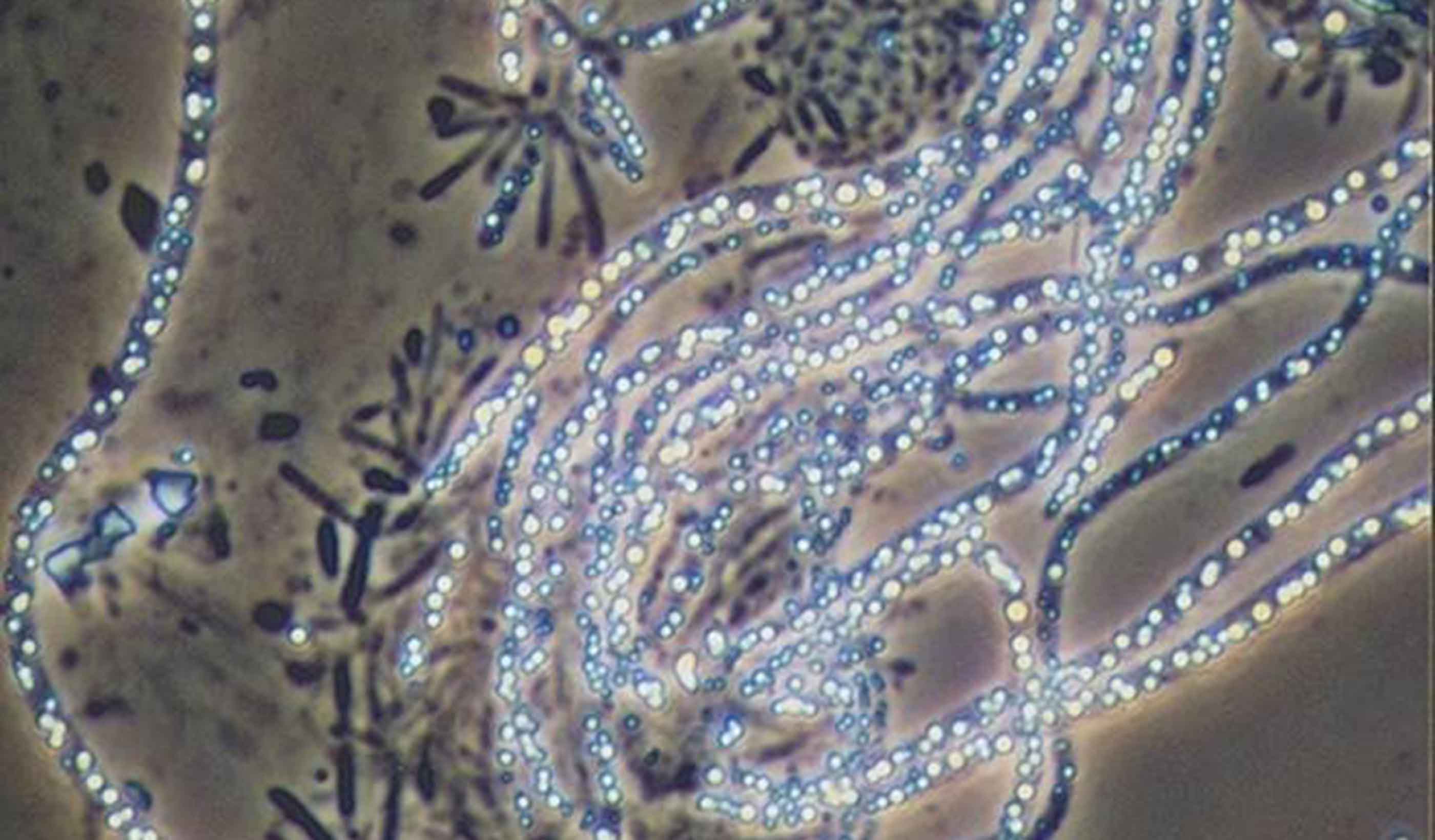

Technical Paper Blind spots in CyanoHAB monitoring

-

Podcast How Stantec is using XR to propel innovation

-

Video Mitigating flooding impacts through stream restoration

-

Video A better way to move people and goods at airports? Autonomous vehicles

-

Video How can design better prepare future healthcare professionals?

-

Blog Post PFAS in drinking water: What utilities need to know about contaminants in water

-

Blog Post Advanced manufacturing facilities are key to a sustainable future

-

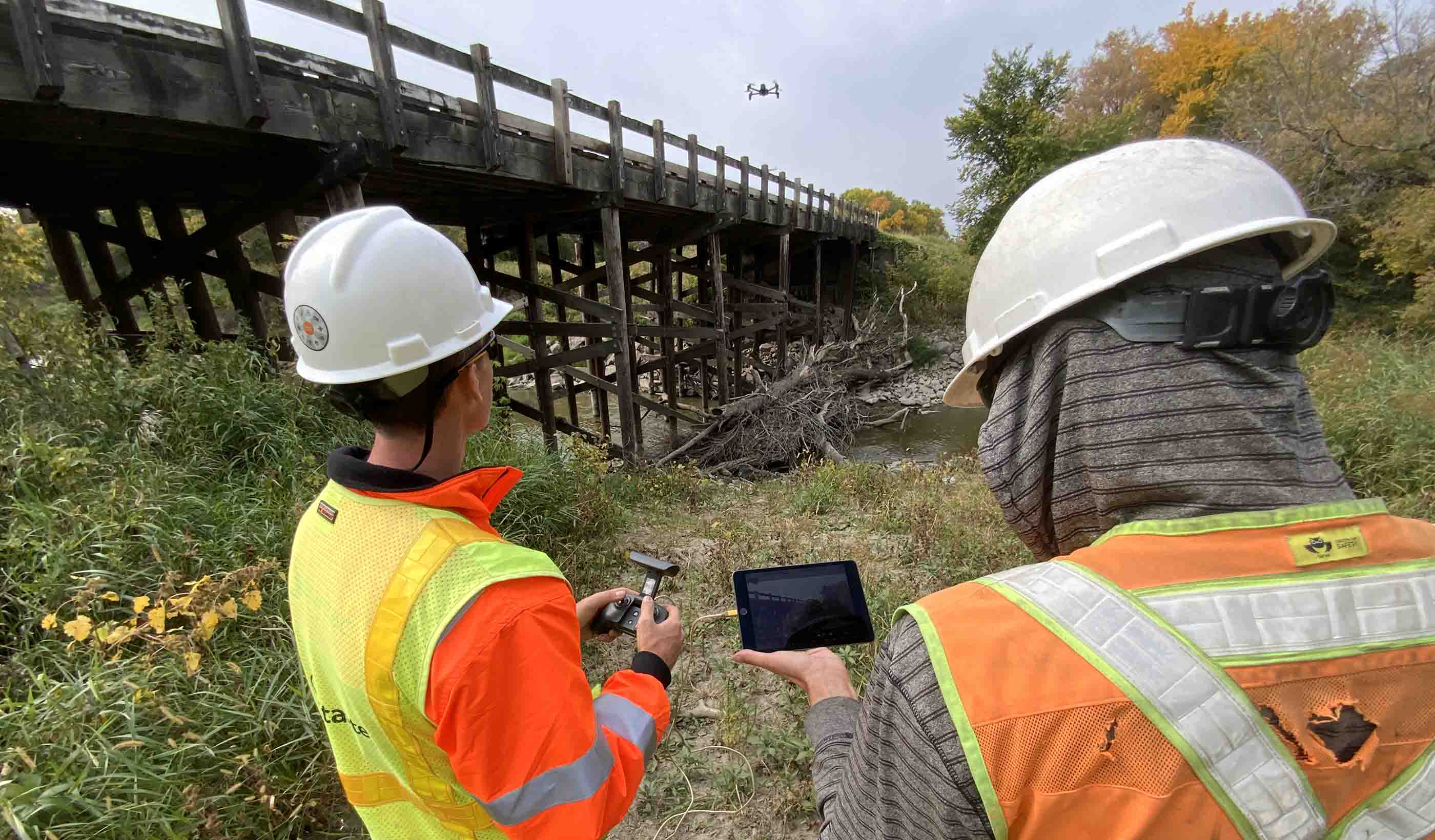

Video How drones are making bridge inspections safer and more efficient

-

Podcast Stantec.io Podcast: Stantec Beacon™

-

Published Article Canadian funding to advance mining’s ESG projects

-

Blog Post Stream restoration is critical for mine reclamation

-

Webinar Recording Potable reuse: Achieving water sustainability in a changing climate

-

Webinar Recording Be prepared for the unexpected

-

Published Article Turning tailings waste into value

-

Blog Post Inclusive student housing: The needs, numbers, and nuances

-

Whitepaper Rationalizing the SEC’s Enhanced Climate and ESG Disclosures

-

Blog Post Trauma-informed care: Indigenous healthcare designed for empathy, wellness, and safety

-

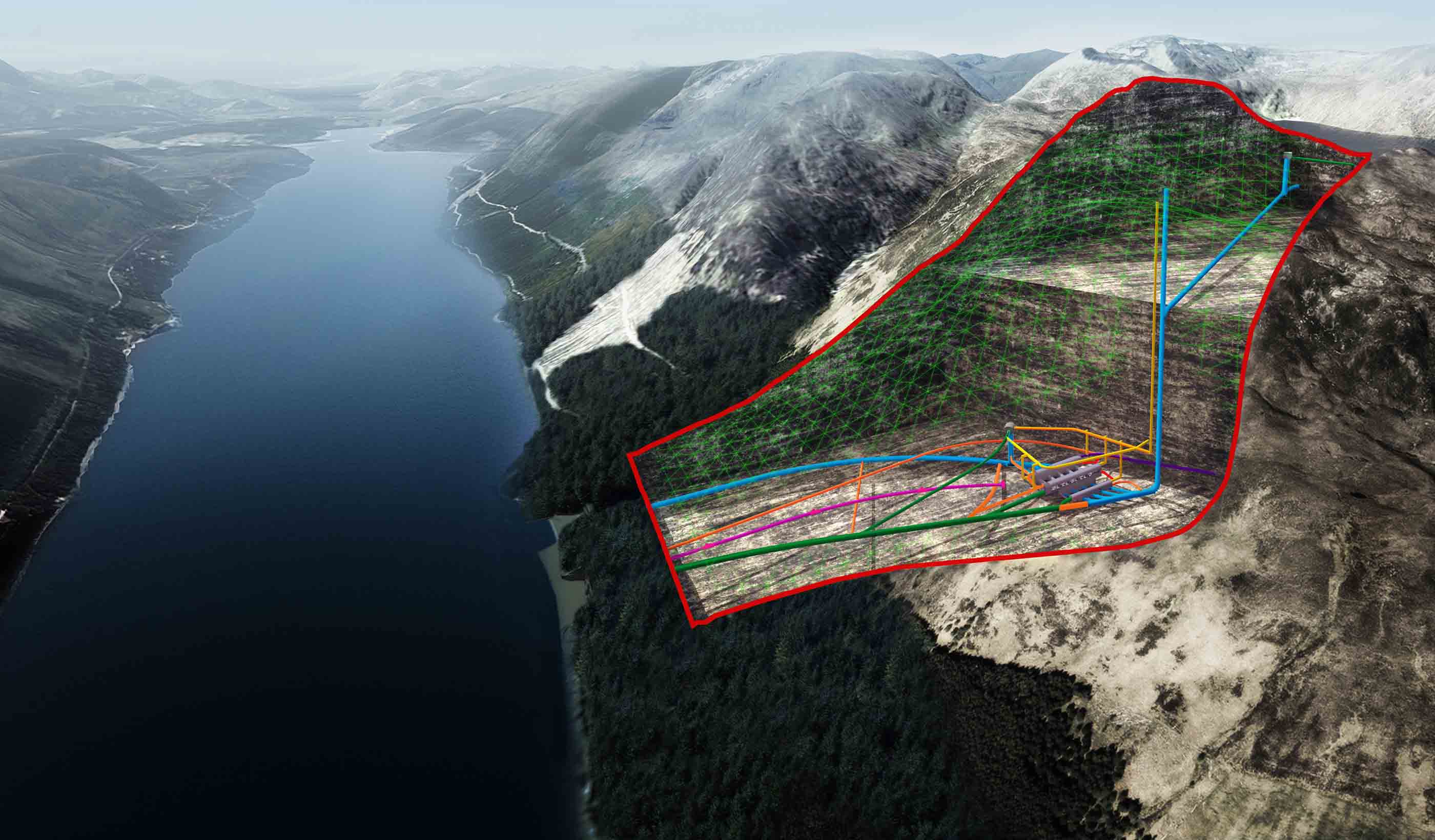

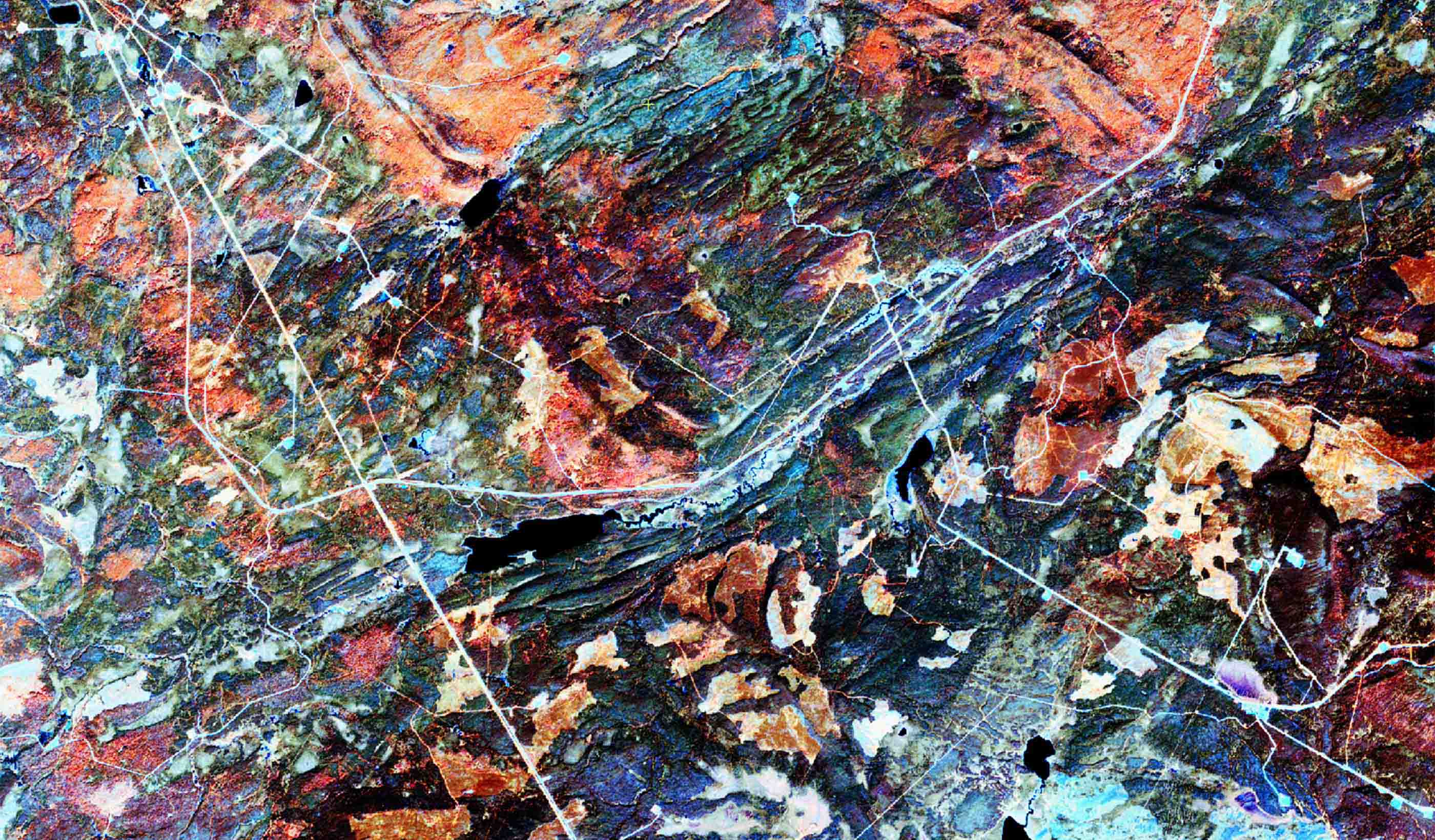

Published Article Integrated digital approach to managing geohazard risk

-

Published Article Analysis: Complexity and controversy cloud US permitting reform

-

Blog Post 5 key steps to put community at the center of transit and development

-

Published Article Detecting leaks using remote sensing pipeline monitoring

-

Published Article Master planning in an age of turbulent change

-

Blog Post Bats in buildings: How airborne eDNA can help you identify bat species at risk

-

Video Stantec Beacon™ and Travers (the largest solar facility in Canada)

-

Published Article Multi-pronged trenchless rehab approach

-

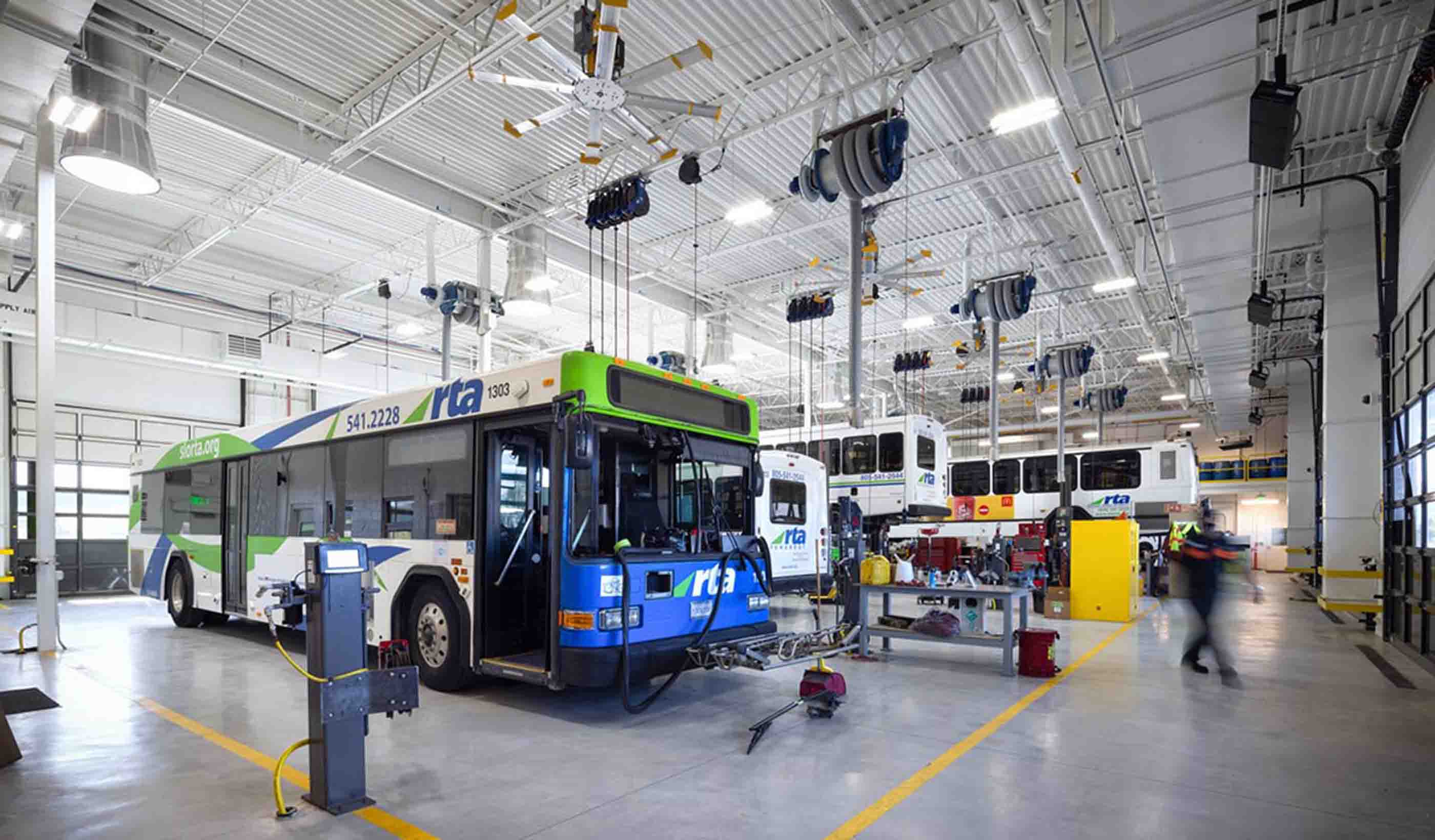

Blog Post Zero emissions buses and the energy transition: How do transit agencies adjust facilities?

-

Published Article How design in education is shaping workplaces of the future

-

Video Implementing AV Applications at Airports

-

Webinar Recording Get ahead of FEMA’s Future of Flood Risk Data Initiative

-

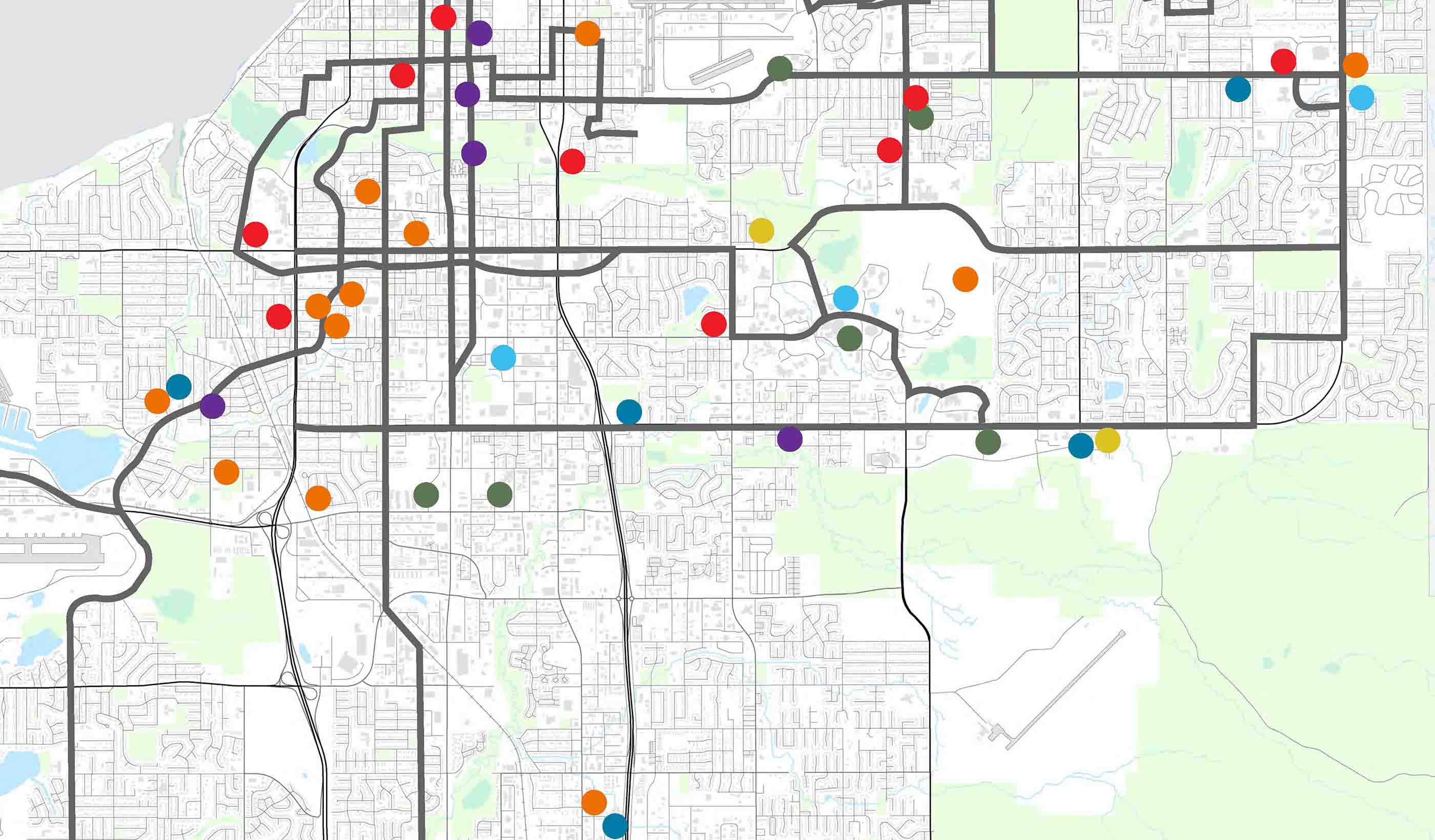

Blog Post How water utilities can develop a digital twin and use GIS mapping to meet future needs

-

Published Article Time for transformative thinking in western water

-

Video How to repeatedly save your client time and money with Stantec Beacon

-

Published Article Elevating community mental health

-

Published Article From A to Gen Z: Designing better workspaces for everyone

-

Report Real-world Examples of Biological Monitoring with Environmental DNA (eDNA)

-

Published Article Consultant Q&A: Stantec's Firas Al-Tahan on Evolution of Mobility, Design-Build

-

Blog Post 8 challenges holding back vertical farming facilities

-

Published Article Net zero potash mining

-

Webinar Recording The 2023 proposed revisions to the steam electric ELGs are out – now what?

-

Published Article Crews cut and roughen Colorado dam for $531M raise project

-

Video Improving transit safety and efficiency with the innovative Yard Control System

-

Video Retrofitting Hammond, IN’s downtown core from car-centric to a walkable neighborhood

-

Blog Post Don’t let construction ground your airport: 5 ways to manage traffic disruptions

-

Blog Post From microchips to canola oil, manufacturers want to build factories in North America

-

Blog Post A modular construction solution to the mental healthcare crisis

-

Blog Post How to fast-track climate change actions by using nature to reduce greenhouse gases

-

Blog Post Can emerging alternative delivery processes result in better hospital design?

-

Published Article Assessing, remediating and performance monitoring abandoned mine sites

-

Blog Post 3 steps to fully accessible and barrier-free transit

-

Blog Post 3 ways municipalities can use agile financial planning to prepare for unexpected events

-

Blog Post How is California tackling water sustainability with data? What does it mean elsewhere?

-

Blog Post Healthy roots: How to create educational spaces for early developmental success

-

Video Workplace Reboot: 4 ways buildings and property owners can decrease potential legislation penalties

-

Whitepaper How do environmental, social, and governance (ESG) and climate factors enhance valuations?

-

Webinar Recording Impacts of the new PFAS Maximum Contaminant Levels (MCL) and what you can do

-

Video Keeping communities connected through a modern bridge replacement

-

Published Article Drought management lessons from around the globe

-

Video Our underwater archaeology team explores Canada’s past

-

Published Article Wareham Dam interim spillway repairs

-

Published Article Paging All Future Veterinarians

-

Blog Post Fish passage: Fixing culverts is key to better stream habitat for salmon, other species

-

Published Article Emerging trend: Wind turbines paired with energy storage

-

Webinar Recording Design and operation of chloraminated water systems

-

Publication Design Quarterly Issue 18 | The Future of Design

-

Podcast Stantec.io Podcast: The GlobeWATCH Episode

-

Video What’s so great about integrated design?

-

Published Article How the latest technology makes better buildings

-

Blog Post Proton therapy design (Part 2): Tracing the patient journey

-

Blog Post Water affordability: How can utilities help their most vulnerable customers?

-

Published Article Evolving roles of the workplace designer

-

Blog Post How design can support mental health through crisis stabilization centers

-

Blog Post VR and community outreach programs: Building social acceptance with immersive technology

-

Published Article How does innovation in mining accelerate ESG goals?

-

Blog Post New York City’s Local Law 97 is fighting climate change, focusing on sustainable buildings

-

Blog Post PFAS in Canadian provinces: Where are the regulations?

-

Published Article Required: Clean Mine Power Solutions

-

Blog Post 6 design approaches that humanize cancer care amid technology advances

-

Published Article How to curb skepticism around Nature-based Solutions

-

Blog Post Across ever-changing landscapes, technology keeps our pipelines safe from geohazards

-

Blog Post 3 key steps to successful utility design for the telecommunications industry

-

Published Article The right ingredients for tailings management

-

Blog Post Designing for neurodiversity: Creating spaces that are inclusive of all

-

Blog Post How can machine learning help water utilities find lead service lines?

-

Blog Post Finding funding: New opportunities abound for the mining industry

-

Webinar Learn strategies to guide our communities in unlocking new urban opportunities

-

Blog Post Successful Indigenous school design: Listening, understanding, and acting

-

Video Growing native plants for better ecosystem restoration projects

-

Video Workplace Reboot: Understand the penalties for not complying with emissions legislation

-

Blog Post How can schools build strong career and technical education programs? Partnerships

-

Video Collaborating with communities to create stormwater solutions

-

Podcast Stantec.io Podcast: The Soil Risk Map Episode

-

Blog Post What is an innovation center? A collaborative workspace that’s essential in today’s office

-

Published Article Is your healthcare facility resilient enough?

-

Blog Post How prioritizing environmental justice in project planning can reduce discrimination

-

Blog Post Developing wastewater’s circular economy: How do we create a market?

-

Blog Post Desalination: Leveraging the potential of seawater

-

Blog Post Electrifying ferries can help us leverage crucial waterways while reducing emissions

-

Blog Post How does a mine site in the desert find water?

-

Blog Post Water and energy: A symbiotic relationship

-

Blog Post Water supply in the West

-

Blog Post Harnessing water to meet Canada’s renewable energy goals

-

Publication Research + Benchmarking Issue 03 | Planting Seeds

-

Blog Post Consider environmental due diligence as part of project planning—it can save time, money

-

Video Testing emerging AV technologies at airports

-

Published Article Development, Environment, Community: A Q&A with Stantec President and CEO Gord Johnston

-

Published Article Navigating the Complexity of the Hybrid Workplace Design Process

-

Blog Post Savings without sacrifice: Value engineering can counter inflation on projects

-

Podcast Stantec.io Podcast: The Smart Cities Episode

-

Blog Post Why biodiversity matters to your business after COP15

-

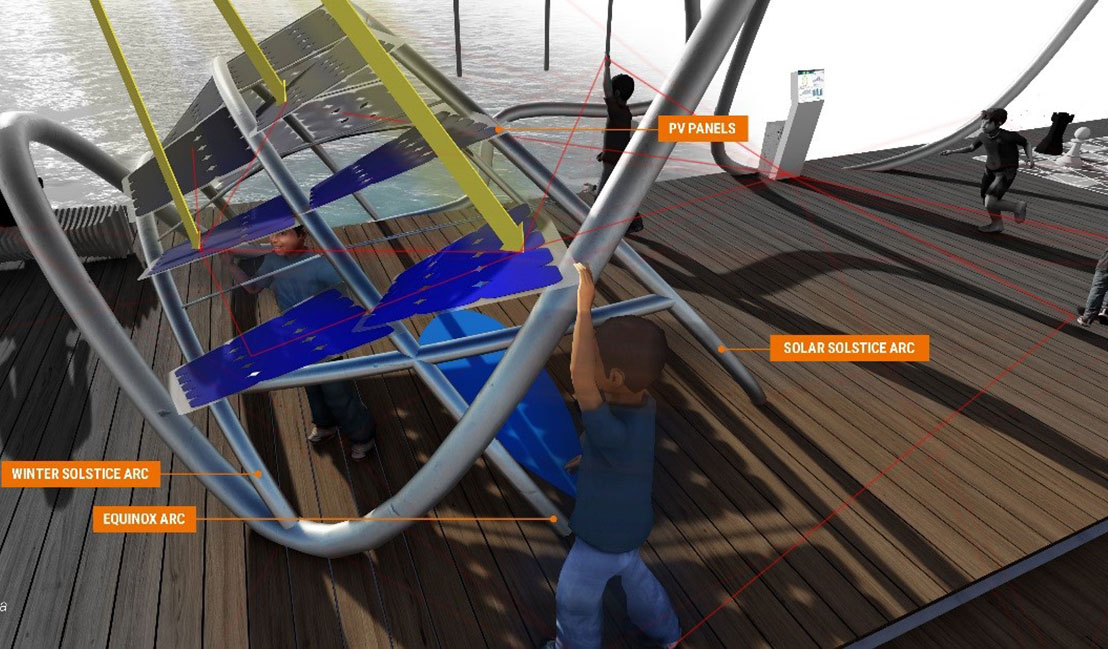

Published Article Net Positive Energy for People

-

Published Article Creating Better Spaces

-

Blog Post 5 trends from CES that will majorly impact the built environment

-

Report One Water, One Future: A promising water pricing model for equity and financial resilience

-

![[With Video] New city regulations are driving building retrofits](/content/dam/stantec/images/projects/0127/the-westory-181249.jpg)

Blog Post [With Video] New city regulations are driving building retrofits

-

Published Article The Mighty Bus: Transit Hero of Our Growing Community

-

Blog Post Debunking 7 myths and embracing a universal design mindset

-

Podcast Design Hive: Robyn Whitwham on designing for mental health

-

Blog Post Today’s parking lots are tomorrow’s mobility hubs. Why is it a better use of space?

-

Published Article Thinking Water: Challenges and opportunities of water management

-

Published Article A toilet in the middle of nowhere – with Derek Chinn

-

Published Article Expanding reservoirs to provide water security for communities

-

Published Article Net Zero: At the turning point

-

Published Article Tailings Management: A global concern

-

Published Article Challenges for the modernization of the Baygorria plant, Uruguay

-

Publication Design Quarterly Issue 17 | New Design Essentials

-

Published Article Mining EPCM Terms of Engagement

-

Blog Post Better than ever: 5 things becoming a parent taught me about pediatric design

-

Report Economic trends examined in Stantec Water’s new financial benchmarking report

-

Blog Post 7 ways we can design more resilient healthcare projects today

-

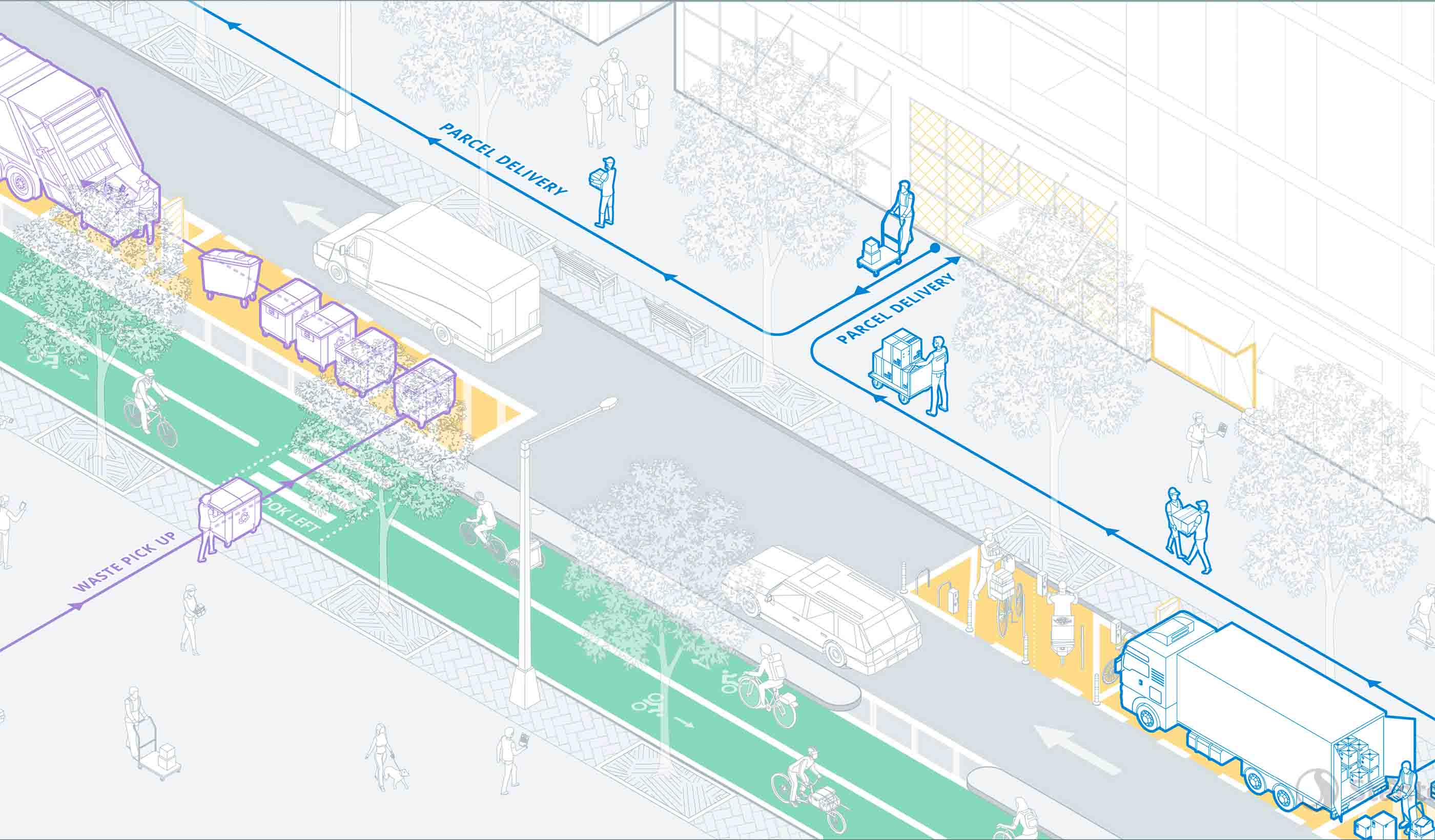

Report Delivering the Goods: Urban freight in the age of e-commerce

-

Podcast Stantec.io Podcast: The Digital Twin Episode

-

Published Article The challenges and opportunities of nature-based solutions

-

Blog Post Small modular reactors: How the next wave of nuclear power can fuel the energy transition

-

Video Workplace Reboot: How carbon impact legislation will affect building owners

-

Publication Inside SCOPE Issue 2: Week 2 at COP27

-

Blog Post When freshwater mussel surveys are needed, eDNA can show the way

-

Published Article Should interconnection participant funding be reformed or replaced?

-

Blog Post Bond programs 101: My school district needs money. Where do we start?

-

Blog Post Do you want functional lighting or fantastic lighting? The difference is commissioning

-

Publication Inside SCOPE Issue 1: Week 1 at COP27

-

Video Climate finance—leading change on an international scale

-

Report How data-informed mobility solutions create more equitable communities

-

Blog Post One module at a time: The future of affordable housing

-

Published Article Why healthcare masterplanning needs engineering masterminds

-

Blog Post 3 reasons why dam owners should prioritize adequate instrumentation and data

-

Published Article Global Hydropower Day: Stantec

-

Published Article Water in a Warming World

-

Published Article Nondestructive Testing: The key to bridge longevity

-

Blog Post Envisioning the future of your healthcare network: How to develop a campus master plan

-

Blog Post Adapting our green spaces for a changing climate

-

Podcast Stantec.io Podcast: The Community Engage Episode

-

Podcast Stantec.io Podcast: The Digital Strategy Episode

-

Blog Post Using well-being, student input, and stewardship to design a WELL school

-

Video What is COP?

-

Published Article Pumped storage advocates see bright future due to new tax credits, reliability needs

-

Published Article Why mine operators should conduct energy audits

-

Webinar Recording Sustainable mine closure and rehabilitation, responsibly caring for land

-

Published Article Small scale hydroelectric power plants used to possibly solve California's energy crisis

-

Published Article Nature-based protections against storm surges

-

Blog Post Family’s cancer journey helps designer turn fragile moments into better projects

-

Published Article The Kenaitze Indian Tribe welcomes a new education center in Alaska

-

Podcast Digital frontiers, reimagining science, engineering, and design

-

Published Article The other shoe is dropping: Engineering solutions to over-sized carbon footprints

-

Published Article Up for the Challenge

-

Blog Post The rise of process intensification: Big gains with small footprints

-

Video Long Island Rail Road Third Track: expanding the busiest commuter line in the US

-

Published Article Pumped storage hydropower acts as a “water battery” that can sustainably power communities

-

Published Article US Offshore Wind Outlook

-

Video Cascading Climate Events and Predictions: Virtual Weather and Debris Flows

-

Video Building Decarbonization: The Urgency and Value

-

Video When is Solar Energy Green Enough

-

Video Cleaning Up the Mess: Quantifying Carbon Offsets with NbCS

-

Video How Remote Sensing Helps Call Out Climate Change Impacts Before Impact

-

Video It’s a Numbers Game: Using New Sensor Data to Achieve Climate Change Goals

-

Video Chasing Butterflies with our Eyes in the Sky

-

Video PipeWATCH: The Pipeline Protector From Outer Space

-

Video A Clear Lifesaver: Safeguarding water resources with Stantec’s WaterWATCH

-

Video Managing our Largest and Most Valuable Public Asset: Our Roadways

-

Video Measurements With Merit: Evolving Data Collection to Enable Integrated Decision-making

-

Video Optimizing a Zero Cost Energy Future for the Water Industry

-

Video Operations of the Future: Using Artificial Intelligence to Run a Water Treatment Facility

-

Video The Benefits of Getting Your Head in the Cloud with FAMS

-

Video How We’re Using Machine Learning to Deliver Rapid Probabilistic Flood Predictions

-

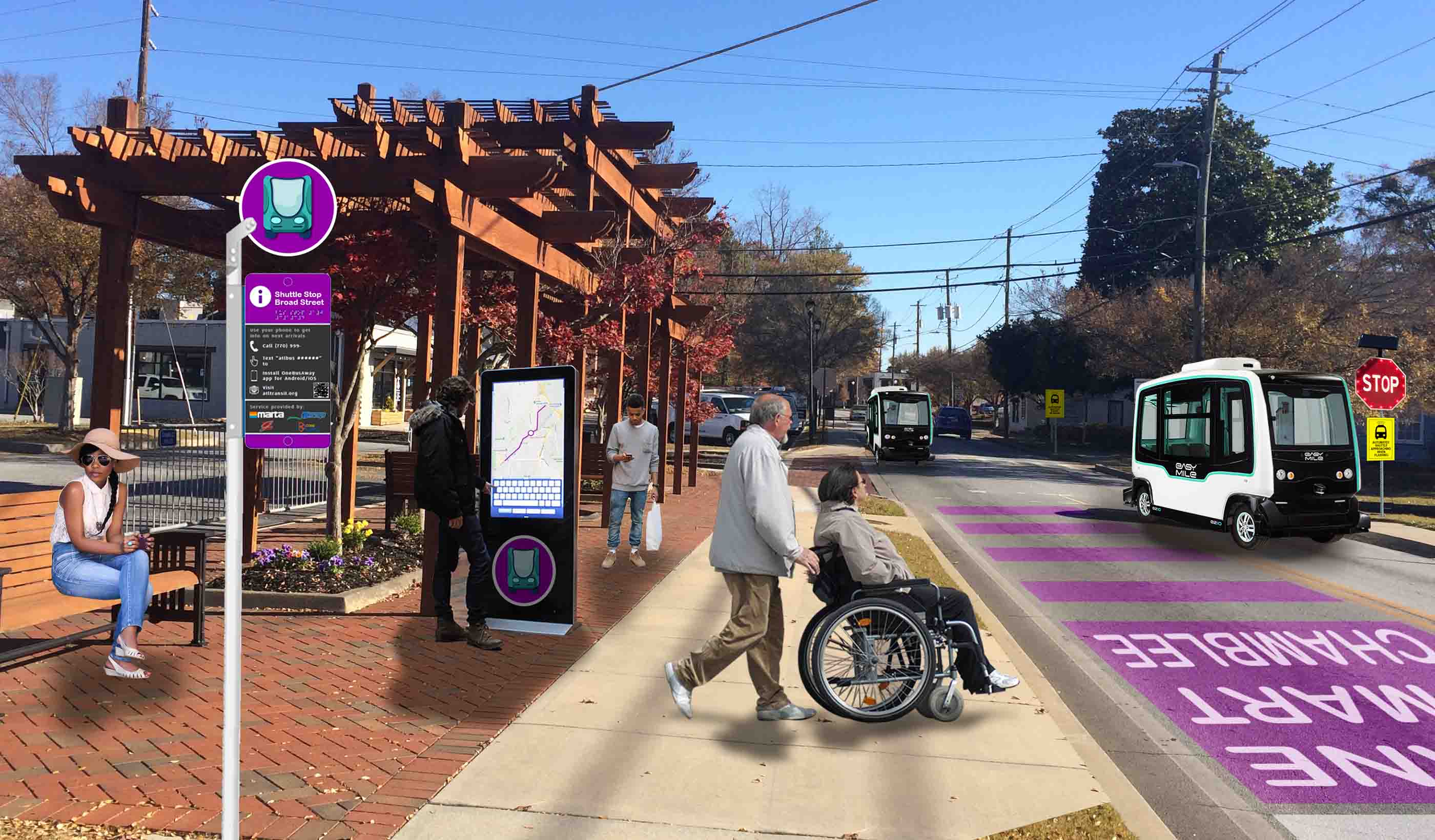

Video Smart(ER) Mobility Respects the Past with Future Thinking

-

Video The Robots Are Coming. Are you ready?

-

Video Mapping the Route for a Zero-Emission Future

-

Video Buildings that Evolve: Design for Future-readiness

-

Video Digital Cities - Fire Flow

-

Video Planning a Smart City or Infrastructure? Don’t Start with Technology

-

Blog Post Bonn or bust: How can we get the most from limited climate finance?

-

Article Our guide to COP27

-

Published Article How mega events can be catalysts for change

-

Blog Post Designing for a client’s nature-driven mission

-

Report Equity in Stormwater Investments

-

Published Article Regulation Roundup: How to manage excess soil through “the pause” in Ontario

-

Blog Post 6 things wastewater treatment plant owners need to know about PFAS

-

Blog Post It takes a village: Managing water in a time of climate change

-

Blog Post How can utilities prepare for the next big storm?

-

Blog Post All about energy resiliency: How different countries are adapting to extreme weather

-

Blog Post Supply and demand: Energy security in the UK

-

Published Article Mitigating the social impacts of mining projects

-

Blog Post 7 big ideas for revitalizing the urban realm

-

Podcast Design Hive: Daryl Fonslow on adaptive reuse to net zero

-

Podcast Stantec.io Podcast: The FAMS Episode

-

Blog Post The mining energy crisis: Are the industry's net zero targets too ambitious?

-

Blog Post How can technology protect against extreme weather events?

-

Video Coming together to restore a Colorado treasure – the Alamosa River

-

Published Article Plugging offshore wind power into our energy grid

-

Blog Post Eastern promise: The compelling case for green hydrogen in Atlantic Canada

-

Video Workplace Reboot: Building consensus - a key (and fun!) aspect of workplace strategy

-

Blog Post Why Florida’s great untapped opportunity lies in its suburbs

-

Blog Post Measuring trees and tracking carbon sequestration from the sky

-

Video Stantec’s Buildings Team celebrates 10 years of being at the forefront of design

-

Published Article From necessity to amenity: changing the perception of storm water management

-

Podcast The SCOPE Episode 2: Sustainable Streets

-

Blog Post Energy efficiency: The first step in decarbonizing a mine

-

Blog Post Developing wastewater’s circular economy: How do we gain public acceptance?

-

Cleaner energy from waste

-

Blog Post Putting the people back in operations and maintenance facilities

-

Publication Design Quarterly Issue 16 | Climate Risk

-

Published Article UT Dallas Sciences Building Uses Zinc to Put Science on Display

-

Blog Post Gen Z water engineer: Climate change is the space race of my generation

-

Blog Post 5 steps companies can follow to change unused spaces into eye-popping pollinator habitat

-

Podcast Sustainability, Innovation and Failure to Launch

-

Published Article Woodlawn Cemetery could help solve mystery of Tampa’s erased Black cemeteries

-

Blog Post Rethinking air pressure in operating rooms could save lives

-

Technical Paper Simulation of some debris flows in Klanawa watershed in Vancouver, British Columbia

-

Video Workplace Reboot: A glass concourse transformed creates an urban, big city vibe

-

Published Article Brick-and-Mortar Retail Design: Reimagining the Way We Shop

-

Technical Paper A comparison of two runout programs for debris flow assessment at the Solalex-Anzeindaz region of Switzerland

-

Published Article Air Control

-

Blog Post Even popular retail malls are reevaluating and refocusing to amplify experiences

-

Blog Post The future of the Cahill Expressway

-

Published Article Increasing capacity and funding for rural communities

-

Published Article Building an electric vehicle program: Where should cities start?

-

Our approach: The Climate Solutions Wheel

-

![[With Video] 7 approaches to creating affordable housing for artists](/content/dam/stantec/images/projects/0111/pullman-artspace-lofts-185195.jpg)

Blog Post [With Video] 7 approaches to creating affordable housing for artists

-

Published Article How high-performance buildings attract high-performing people

-

Podcast Stantec.io Podcast: The CommunityHQ Episode

-

Technical Paper Improving Environmental DNA Sensitivity for Dreissenid Mussels

-

Technical Paper Detecting bat environmental DNA from water-filled road-ruts in upland forests

-

Published Article Texas Stages Development of Statewide Flood Plan

-

Blog Post Designing for gender inclusivity in industrial facilities

-

Technical Paper Evaluating environmental DNA metabarcoding as a survey tool for unionid mussel assessments

-

Video Constructing a new park and transportation corridor on Manhattan’s shore

-

Published Article Riding the Risks and Opportunities of ESG in Mining

-

Presentation Saltair study guides development in areas that may be subjected to natural hazards

-

Blog Post Artificial intelligence and machine learning can diffuse water’s ticking time bomb

-

Blog Post Rethinking resuscitation rooms: A new approach for a vital healthcare space

-

Technical Paper Lessons learned from the local calibration of a debris flow model and importance to a geohazard assessment

-

Technical Paper Informing zoning ordinance decision-making with the aid of probabilistic debris flow modeling

-

Blog Post How can businesses and policy makers achieve climate justice?

-

Podcast Design Hive: Dr. Rick Huijbregts on smart cities

-

Blog Post Avoiding the wrecking ball: Restoring purpose in aging commercial properties

-

Video A new spin on an old bridge type for safer travel across Kentucky’s Green River

-

Blog Post 4 challenges to overcome when transmitting offshore wind power

-

Published Article Sustainability by design

-

Published Article Stronger Together: Indigenous Peoples and Mining

-

Blog Post Freshwater mussels 101: Explaining the “aquatic archaeology” behind mussel relocation

-

Blog Post 5 reasons why your company should address greenhouse gas emissions

-

Published Article Automated vehicles: Plan now, switch on later

-

Blog Post 7 approaches to building reuse to help existing properties reach net zero

-

Video Workplace Reboot: The challenge – modernize, relocate, expand, sustain

-

Podcast Stantec.io Podcast: The EnviroExplore Episode

-

Video Home is Where the Art is

-

Published Article A new era of downtown opportunity

-

Blog Post Proton therapy design (Part 1): Putting the patient experience first

-

Blog Post 4 ways to make sure your connected-infrastructure technology program is … well, connected

-

Published Article Modeling Post-Wildfire Debris Flow Erosion for Hazard Assessment

-

Published Article Retail, Restaurant Industries Embrace Post-Pandemic Design Shifts

-

Technical Paper Environmental genomics applications for environmental management activities

-

Published Article Using Environmental DNA (eDNA) to Improve Biological Assessments

-

Blog Post Buildings that care: 6 things to know about WELL certification in hospitals

-

Published Article A trip through British Columbia in the life of a shipping container

-

Blog Post Data + Design = Decision

-

Blog Post Are we finally about to achieve sustainable fusion energy?

-

Blog Post Can gaming influence human behavior when it comes to combating climate change?

-

Hydro batteries: Making renewables dispatchable

-

Blog Post What if simply restoring a closed mine site isn’t the best option?

-

Blog Post Can data improve dam safety?

-

How can solar canopies help us electrify our industry?

-

Published Article Treatment Trends

-

Blog Post What if the energy industry and environmentalists worked better together?

-

Blog Post For your next urban playground or revitalization, consider nature-based solutions

-

Blog Post Mapping your culture: How to help communities identify their heritage landmarks online

-

Blog Post A different West Side Story: How a boulevard changed Manhattan

-

Blog Post Breathe easier: Mitigating welding hazards with ventilation and exhaust

-

Blog Post Making smart asset choices from imperfect asset data

-

Blog Post Surveying Hawaii’s Kalaupapa Peninsula: Connecting a rich past to an exciting future

-

Blog Post Reflecting on Frederick Law Olmsted’s legacy for World Landscape Architecture Month

-

Podcast Stantec.io Podcast: The Route Selector Episode

-

Video Workplace Reboot: What’s so great about Beauty and Awe?

-

Blog Post The 4 pillars of ecosystem restoration work together to create better communities

-

![[With Video] 4 reasons we’re excited about our new automated design tool](/content/dam/stantec/images/ideas/blogs/021/audet-design-automation-graphic.jpg)

Blog Post [With Video] 4 reasons we’re excited about our new automated design tool

-

Published Article Shrink Your Carbon Footprint

-

Blog Post How two scientists collaborated to develop a tool for better stream restoration design

-

Blog Post Developing wastewater’s circular economy: How do we pay for it?

-

More Energy Independence in Indigenous Canada

-

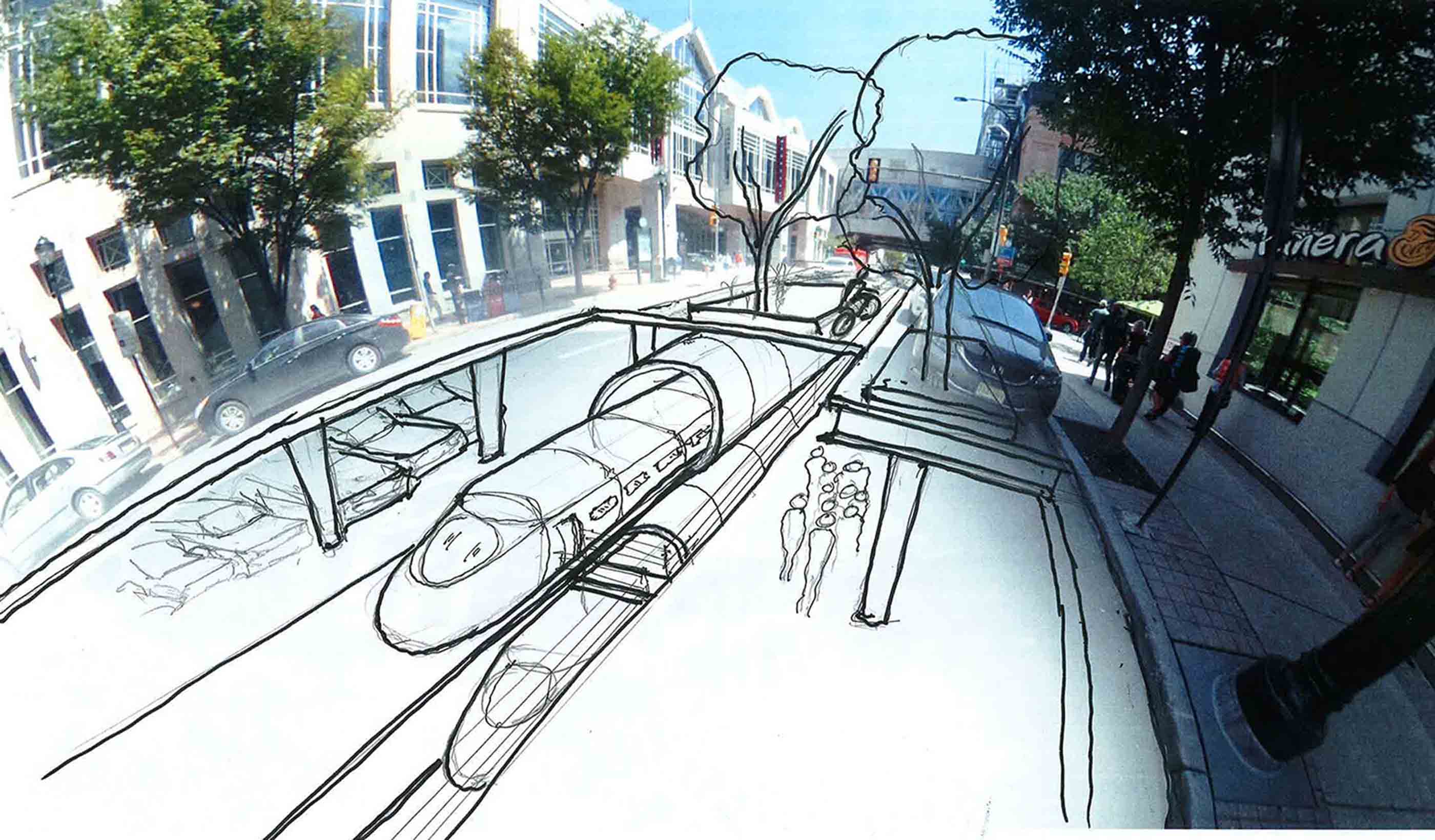

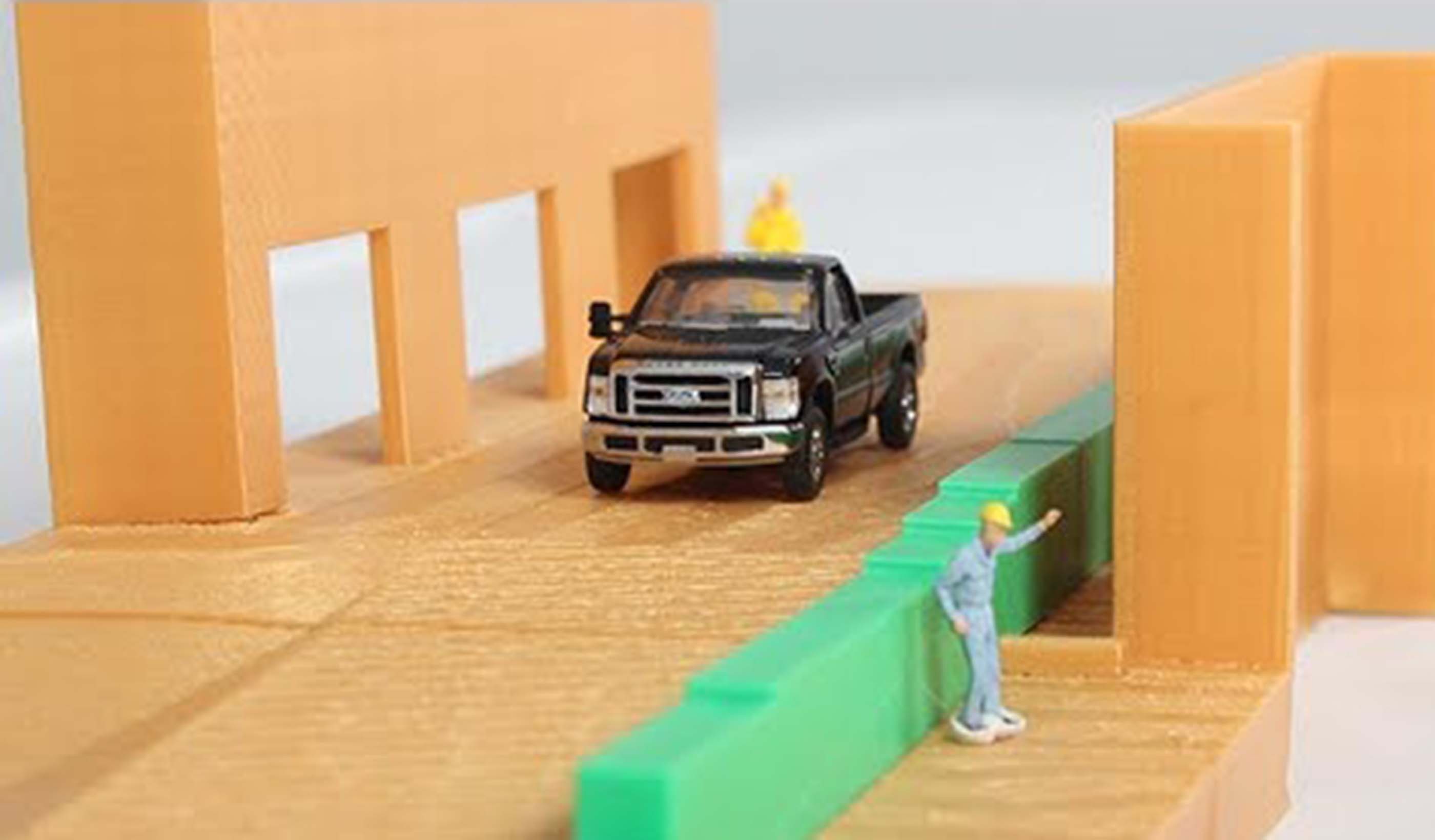

Blog Post Urban highway removal: 4 ways to reknit a city’s fabric

-

Blog Post A new tool to protect marine environments from wastewater nutrients

-

Publication Design Quarterly Issue 15 | Reuse and Revitalization

-

Blog Post Designing living systems to adapt to a changing climate

-

Video A channel runs through it: How we addressed the challenges of urban creek restoration

-

Blog Post Reinventing the Thompson Center: A research project into a modular, mixed-use destination

-

Published Article Deeper mines are tantalizing but costly

-

Blog Post Arc flash hazards: How engineering and standards can make for safer electricity use

-

Blog Post 3 design solutions to sort out the carbon impact of logistics

-

Blog Post Groundwater plays a critical role in climate change adaptation

-

Video Workplace Reboot: A reimagined office building becomes a magnet for both employers and employees

-

Blog Post Creating memories in a supportive home away from home

-

Blog Post Why we all need to care about mining

-

Blog Post Is your infrastructure facing climate risks? Here’s a tool to assess that

-

Podcast Stantec.io Podcast: The Fire Flow Episode

-

Podcast What does the future hold for autonomous vehicle policy and funding?

-

Blog Post Museum lighting case study: How a single exhibit embodies an impactful mission

-

Blog Post Smart resilience planning and design includes triple bottom line benefits

-

Published Article The future is now

-

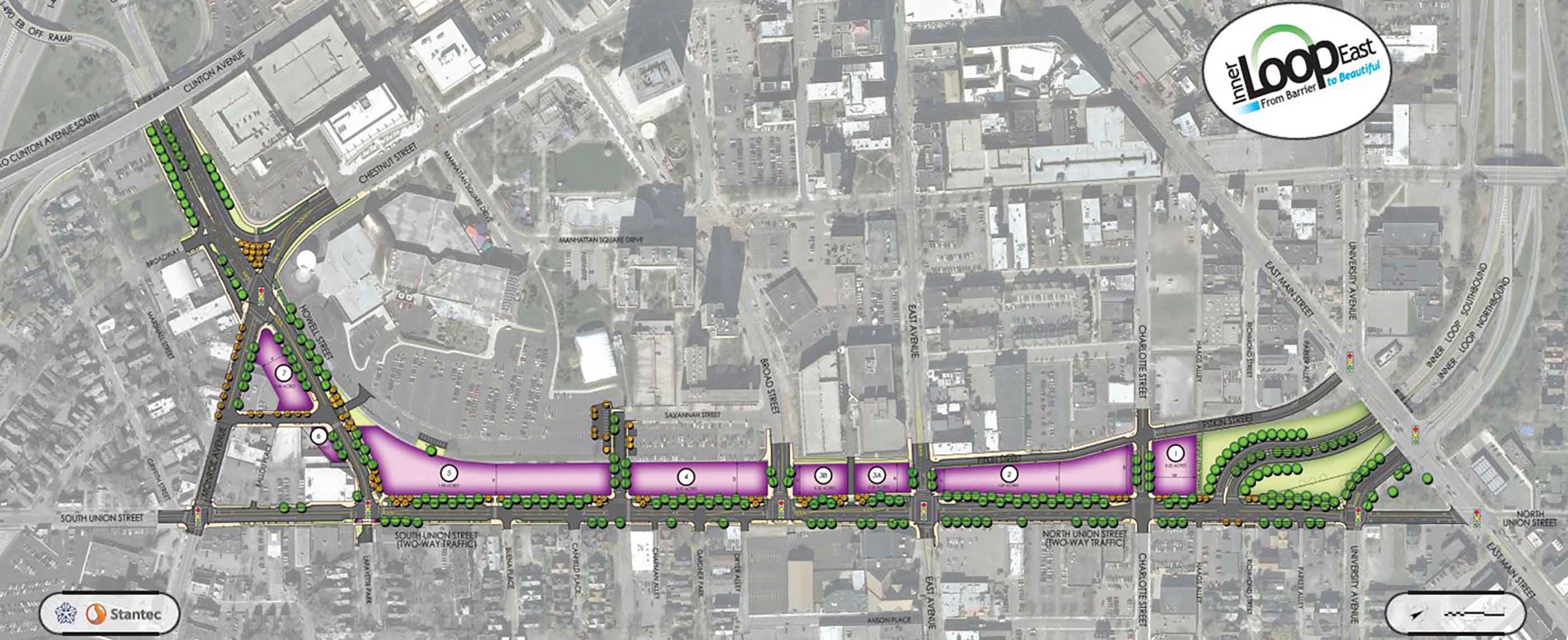

Published Article Urban highway removal projects are on the rise across America

-

Published Article Santiago Airport becomes the most modern in South America

-

Blog Post Breaking the bias: How two women are making spaces and places more inclusive

-

Published Article How to Plan Office Space When Temporary Becomes Permanent

-

Published Article 4 HVAC Strategies to Still Protect Against COVID-19

-

Published Article A Guide to Workplace Metrics, Data, and Research

-

Blog Post Water reuse and river flows: What can we learn from the LA River?

-

Technical Paper Pathways between climate, fish, fisheries, and management

-

Video Planning for the future of healthcare in Ontario

-

Blog Post The (remote) doctor is in: 5 ways telehealth is changing design

-

Podcast Mining Stock Daily: AME’s Roundup Day 3 Recap

-

Blog Post Design through stories: Experience-based design in pediatric healthcare

-

Video Workplace Reboot: There's more to lighting design than meets the eye

-

Published Article Combining Generic/Flexible Labs with Highly Specialized Research Space

-

Article Stantec’s letter to the US Department of Transportation on its 2022-26 strategic plan

-

Blog Post Resilience checklist: 10 steps to improving your community’s resilience

-

Publication Research + Benchmarking Issue 02 | Connected

-

Webinar Recording Climate Solutions Webinar Series: Climate solutions that work

-

Published Article Water analytics data helping the rise of smart utilities

-

Blog Post The new low-carbon suburb: Retrofitting communities for success in the post-COVID era

-

Podcast Stantec.io Podcast: The Connect Episode

-

Published Article It takes two: How architects and engineers blend their talents

-

Blog Post What is design automation, and how can you optimize it for your next project?

-

Webinar Recording Climate Solutions Webinar Series: Navigating climate change terminology

-

Video Workplace Reboot: Law firm takes the hybrid approach

-

Blog Post Railroad crossings: Involving locomotive engineers to better understand crash risks

-

Webinar Recording Climate Solutions Webinar Series: Why now, and why us?

-

An energized future

-

Published Article 2021 Trenchless Q&A: A Look at Trenchless Engineering in Canada

-

Published Article Smarter care at Vaughan’s first hospital

-

Published Article St. Petersburg, Florida: Coastal Resiliency and Community Sustainability

-

Technical Paper Assessing the use of a SWA for treating oil spills in Canada’s freshwater environments

-

Report Quick and creative street projects

-

Video From airside to landside: Diversifying the airport program

-

Blog Post How do we shift to a circular economy? It starts with dumping the end-of-life concept

-

Published Article The importance of the author-verifier relationship in project management

-

Podcast Design Hive: Brenda Bush-Moline on health equity

-

Technical Paper New research quantifies risk to bats at commercial wind facilities

-

Published Article Tailings Management, Meet Science Fiction

-

Published Article Screening and Framework Considerations for a PEL Approach

-

Webinar Recording Lateral structure jacking - how to install a railroad tunnel in a weekend

-

Report The Infrastructure Investment and Jobs Act: How Agencies Can Position for Funding

-

Blog Post We can build healthier streets by prioritizing the human experience

-

Webinar Recording What’s blowing your way? Proposed new odor guideline and monetary environmental penalties.

-

Blog Post 4 keys to keeping mechanical systems running during a hospital renovation

-

Published Article UV-C Radiation to combat healthcare associated infections

-

Blog Post How to use funding trends to promote healthier lifestyles and better public space

-

Blog Post Combating the zebra mussel: What you need to know

-

Blog Post 4 ways smart utilities improve water infrastructure

-

Video GlobeWATCH: World Leading Remote Sensing Technology

-

Webinar Recording Ontario’s New Soil Regulation - Panel Discussion

-

Published Article Model builders

-

Blog Post Children’s play area design: How landscape architects set the stage for fun and games

-

Published Article Community-minded Design

-

Video For our US Audience: If We Built it Today – The Hoover Dam

-

Blog Post First Nations school design case study: Listen, learn, then design

-

Published Article Delivering pumped hydro storage in the UK after a three-decade interlude

-

Publication Inside SCOPE Issue 2: Week 2 at COP26

-

Blog Post EV is the bridge to transit’s AV revolution—and now is the time to start building it

-

Blog Post Managing the future: Why you should consider climate change risks on your next project

-

Blog Post Investigating PFAS: Key concerns for sampling programs

-

Technical Paper Aggradation, degradation in gravel-bed rivers mediated by sediment storage and morphologic

-

Publication Inside SCOPE Issue 1: Week 1 at COP26

-

Published Article No aspect of a mine will remain untouched

-

Blog Post A Smart(ER) approach to mobility: Prioritizing equity and resilience

-

Publication Design Quarterly Issue 14 | Tools and Data

-

Blog Post Student housing success: How P3 helped UC Davis meet its goals

-

Blog Post Brownfields, synchronized: Pros and cons of aligning development goals with remediation

-

Blog Post How is transit responding to reopening?

-

Published Article Hydropower: A Cost-Effective Source of Energy for Hydrogen Production

-

Blog Post Certainty in uncertainty: Machine learning can improve engineering decisions

-

Video Design inspiration for creating spaces to inspire your students, researchers, and staff

-

Published Article Deliver smart buildings using CSI Division 25, commissioning

-

Blog Post Does your company understand the risks of not transitioning to a low-carbon economy?

-

Blog Post Best places to produce hydrogen? Look at a topographic map

-

Blog Post Reconsidering waste in mining

-

Blog Post Clean transport: Reducing carbon emissions on our roads

-

How do we power rural communities? By providing off-grid solutions

-

Blog Post Combating climate change across Australia

-

Blog Post Climate emergency: How governments around the globe are tackling the crisis

-

Published Article Vent tech shortage hits amid automation push

-

Webinar Recording Asset Integrity: Key considerations for blending hydrogen in your pipeline

-

Blog Post Mechanical engineering and industrial buildings: Why we need to go above and beyond

-

Blog Post Microtunneling 101: Good things come in small packages

-

Webinar Recording Demystifying Hydrogen Fueling for Transit Fleets

-

Blog Post Microtunneling: The next big thing

-

Whitepaper The nature of wind-forced upwelling and downwelling in Mackenzie Canyon, Beaufort Sea

-

Published Article Mining’s ESG stewards

-

Video For our US Audience: Deadly Engineering - Power Plant Catastrophes

-

Blog Post What will the future campus look like?

-

Blog Post 3 emerging trends that put the “community” in community rec center design

-

Published Article Water Power

-

Blog Post Holistic heroes: How lighting designers use efficiency and modeling to boost performance

-

Blog Post Capturing carbon: How nature-based solutions help achieve net zero goals

-

Blog Post Tackling climate change: Identifying global opportunities in response to the IPCC AR6

-

Webinar Recording ESG, Net Zero Emissions and Climate Change Resilience for Manufacturing

-

Blog Post Protecting Florida homes from invasive plant species

-

Blog Post How to overcome the 3 most common obstacles in long-term community development

-

Blog Post Continuous, holistic, and equitable health communities

-

Published Article Hydroelectric Dam Conversion with Diaphragm Walls, Retention Systems

-

Published Article Energy storage using conventional hydropower facilities

-

Webinar Recording What to consider when converting office or commercial space to science use

-

Published Article Giants of Design on Corporate Trends

-

Blog Post To incentivise or to tax, or both? How we can reduce carbon in our infrastructure schemes

-

Blog Post Fully aware: How to track air pollutants and GHG emissions with near real-time data

-

Blog Post Elevating mobility infrastructure into a destination of its own

-

Blog Post A call for a global carbon offset banding system

-

Published Article Top 100 Green Design Firms

-

Blog Post 5 things to know before starting an ITS deployment

-

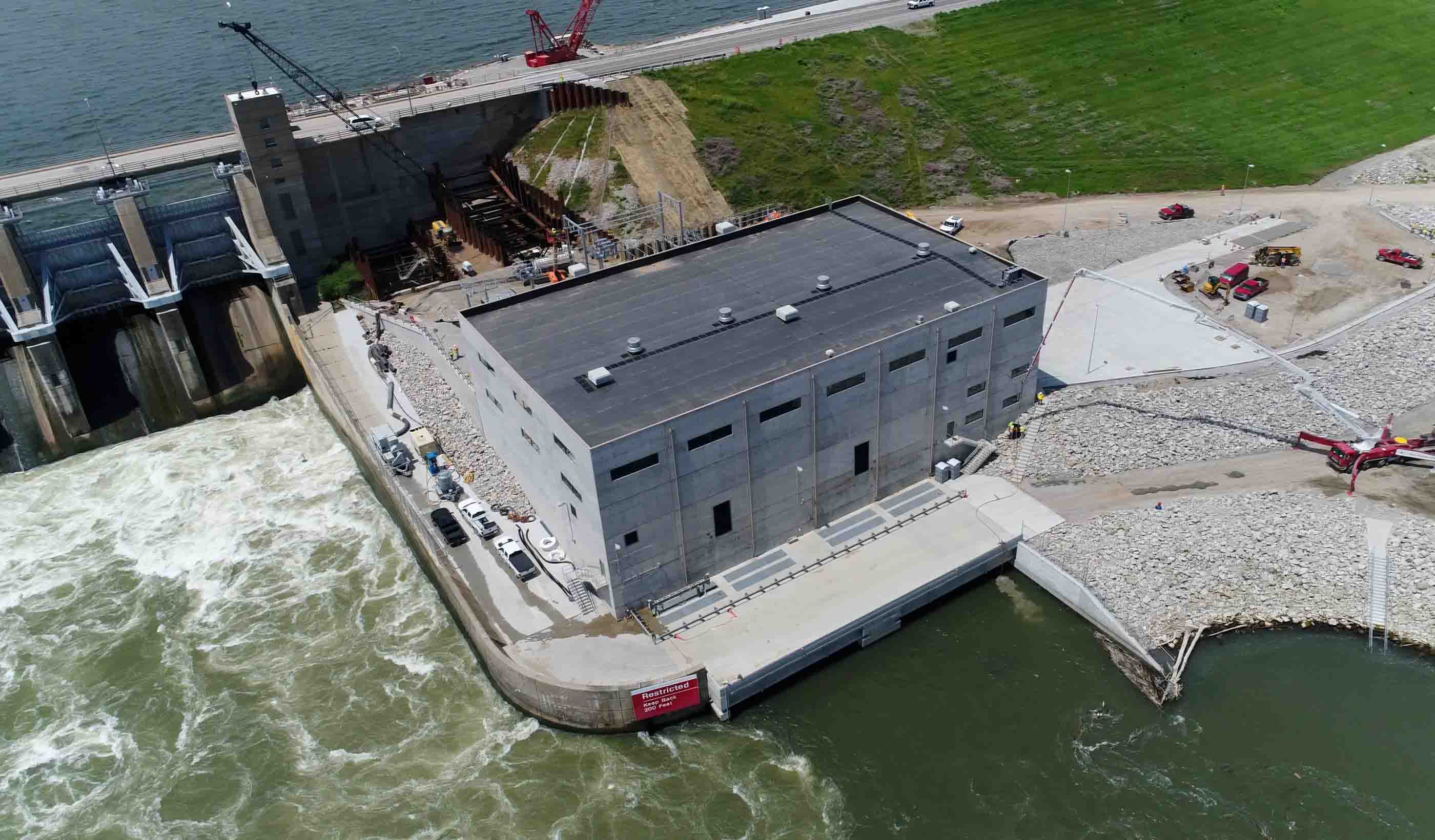

Published Article Red Rock Hydroelectric Project: Successfully Generating New Power from a Pre-Existing Dam

-

Webinar Recording All Hands on Deck: Building Strong Public Partnerships for Your Next Water Infrastructure Project

-

Published Article Storage tunnel will improve Narragansett Bay water quality

-

Published Article New bridge inspection techniques increase speed, efficiency, and safety

-

Blog Post 6 factors when planning a school sports facility

-

Published Article Back in Business: Minimizing Spread of Disease Remains Priority

-

Blog Post How wastewater’s circular economy can help fight climate change

-

Published Article Adapting to climate change in New York City

-

Blog Post Helping mines leverage the “S” in ESG

-

Blog Post The pandemic helped some utilities boost resiliency and delivery—while reducing risk

-

Published Article Everything’s bigger in Texas: How a P3 mega roadway project came to life

-

Published Article Environmental Technologies to Treat Selenium Pollution: Principles and Engineering

-

Blog Post Passports to a net zero carbon future

-

Published Article Life Extension and Savings through Field Testing and Engineering Analysis of a Draft Tube

-

Published Article Waste-to-energy tech could slash carbon emissions, but its promise remains underdeveloped

-

Published Article UAE Plans to Burn Mountains of Trash After China Stops Importing Waste

-

Published Article How to step into the net zero zone

-

Blog Post How New Orleans’ response to Hurricane Katrina improved public safety and economic growth

-

Blog Post Listening to the land to design an urban Indigenous healthcare facility

-

Blog Post Innovative technology in the water sector: It’s time to focus on needs over preferences

-

Podcast Design Hive: Rebel Roberts and Paul Sereno on the Niger Heritage project

-

Published Article Planning ahead - the importance of waste planning within developments

-

Blog Post Saving bats and generating more power: Acoustic data is the key

-

Report COVID-19 Recovery Strategies for Downtowns

-

Published Article Big Ideas

-

Blog Post Following the flames: Preparing for landslides in wildfire country

-

Published Article Clean Slate

-

Blog Post Highlighting the hidden: Integrating operational infrastructure into the community

-

Published Article Graeme Masterton talks commuter systems, working from home

-

Blog Post What to consider when converting commercial or office space to life-science use

-

Published Article How Creative Designs Can Further a Vision of Sustainability and Resilience

-

Published Article Chimney Hollow Reservoir Construction Risk Management

-

Webinar Recording Improving Dam Safety with Risk Informed Decision Making

-

Blog Post Elevating low-rise development—it’s a SWELL idea

-

Published Article Wellness in the Workplace: Burnout from the blurred lines of work and home

-

Blog Post Untapped potential: Expanding healthcare space, capabilities within a limited footprint

-

Published Article Airborne Electromagnetic Surveys: One More Tool in California’s Journey to Sustainable Groundwater Management

-

Published Article Can alternative tailings disposal become the norm in mining?

-

Publication Design Quarterly Issue 13 | After the Reset

-

Webinar Recording Adapting to 21st Century Water Resource Challenges

-

Webinar Recording Sustainable mining: The future is now

-

Blog Post Harmonizing the seen and unseen when designing for neurodiversity

-

Published Article Building a new paradigm: commissioning in the 21st century

-

Blog Post Don’t just offset carbon, remove it

-

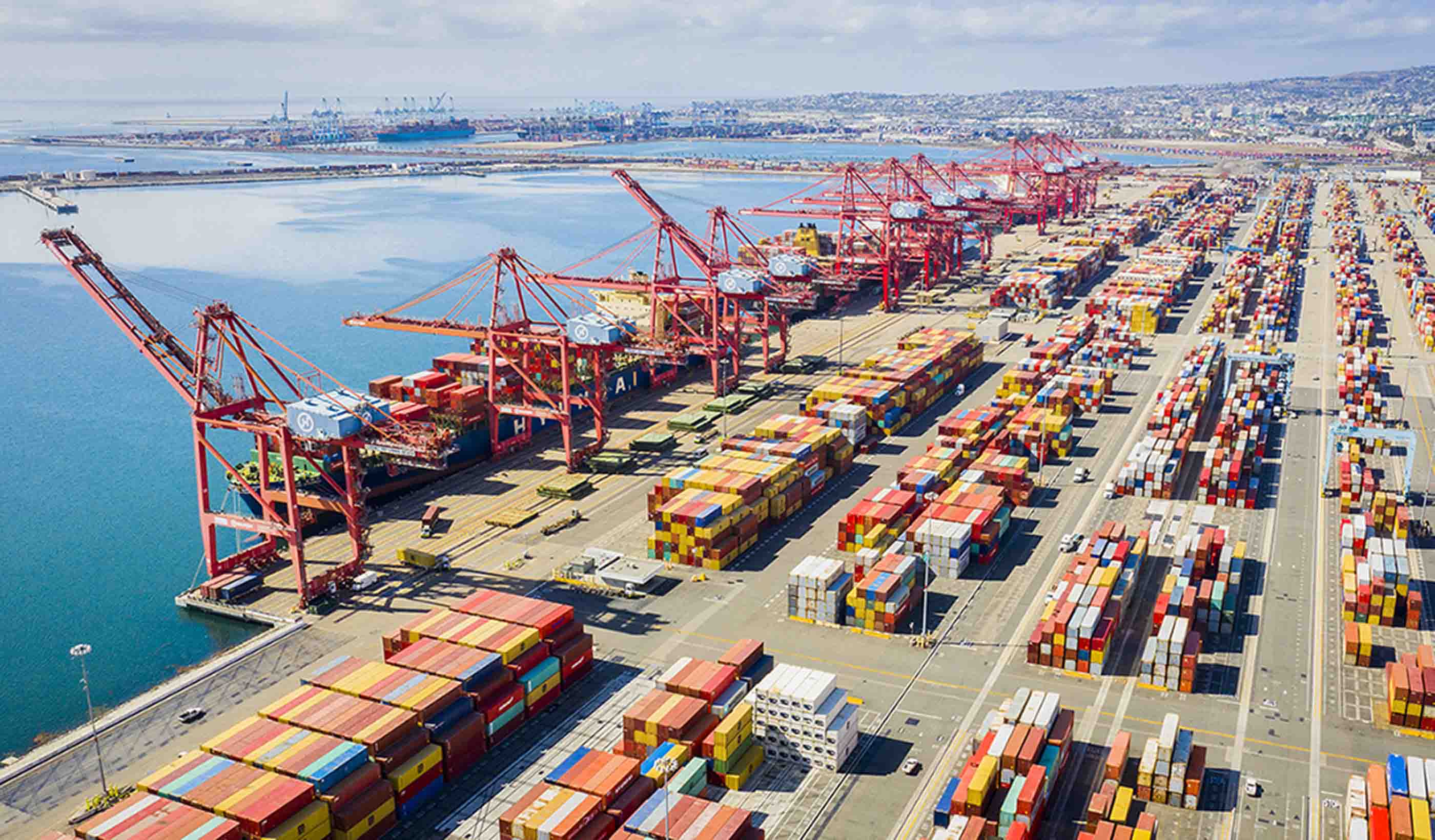

Blog Post The port of the future: Secure, sustainable, and welcoming

-

Video Water policy institute pioneers solutions for sustainable water future

-

Blog Post Recycle your building: 8 reasons to consider adaptive reuse and retrofitting

-

Webinar Recording Designing with equity in mind: Rethinking how we approach projects

-

Blog Post Charged up: Key considerations around electric vehicle use on mine sites

-

Blog Post The potential of biogas in the energy transition

-

How can offshore wind power a more sustainable energy infrastructure?

-

What role should the energy industry play in a sustainable future?

-

Blog Post How can we reduce the carbon footprint of our oil and gas infrastructure?

-

Building partnerships for sustainable and resilient communities

-

Webinar Recording PFAS Investigations at Bulk Fuel Terminals and Refineries

-

Published Article 4 questions transit agencies should be asking about AVs right now

-

Presentation Designing for Impact: Hubs and Corridors

-

Blog Post The water industry is feeling the climate change squeeze

-

Published Article Top Trends in Tailings Closure

-

Article Micromobility Q&A: Are we on the road to a future of connected, Complete Streets?

-

Webinar Recording The State of the Art in Selenium Management and Regulation

-

Published Article 3,300-foot lifelines: Remote runway construction takes grit, group effort

-

Published Article From no natural light to low ceilings, interior designers share 15 clever fixes to design

-

Webinar Recording How to spend COVID relief funding to improve K12 environments

-

Published Article Not all carbon offsets are created equal

-

Blog Post Wastewater case study: 10 years. 4 procurement methods. 1 cutting-edge treatment project.

-

Video Providing Transportation Lifelines for Central Hawke’s Bay

-

Blog Post Community spirit can strengthen collective resilience—and you can design for it

-

Blog Post The eCommerce era of four-hour delivery

-

Blog Post More than just another bus route: Can Bus Rapid Transit spur compact urban development?

-

Video Creating a memorable passenger experience through art

-

Report What you need to know about the Coronavirus State and Local Fiscal Recovery Funds

-

Blog Post Plan B: Reasons to change your mining method from the obvious choice

-

Video Everyday essential resources

-

Blog Post As we return to the workplace, it’s important to ask: How safe is your building’s water?

-

Report Planning a resilient future for defense communities

-

Published Article The rise of water risk

-

Blog Post Can we learn to share? How the sharing economy can help launch the mobility revolution

-

Webinar Recording A New Way – Applying Asset Management to a Stormwater Program

-

Gull Bay First Nation Microgrid - Opportunities & Challenges

-

Webinar Recording Climate Change & Resiliency Planning

-

Blog Post Stantec Institute for Applied Science, Technology: Fueling innovation for utility systems

-

Webinar Recording Integrating Affordability Into Capital and Financial Planning

-

Blog Post Establishing clean water delivery is just the start: How to maintain investments long-term

-

Webinar Recording Lead in Drinking Water. Is Plumbosolvency an issue in New Zealand?

-

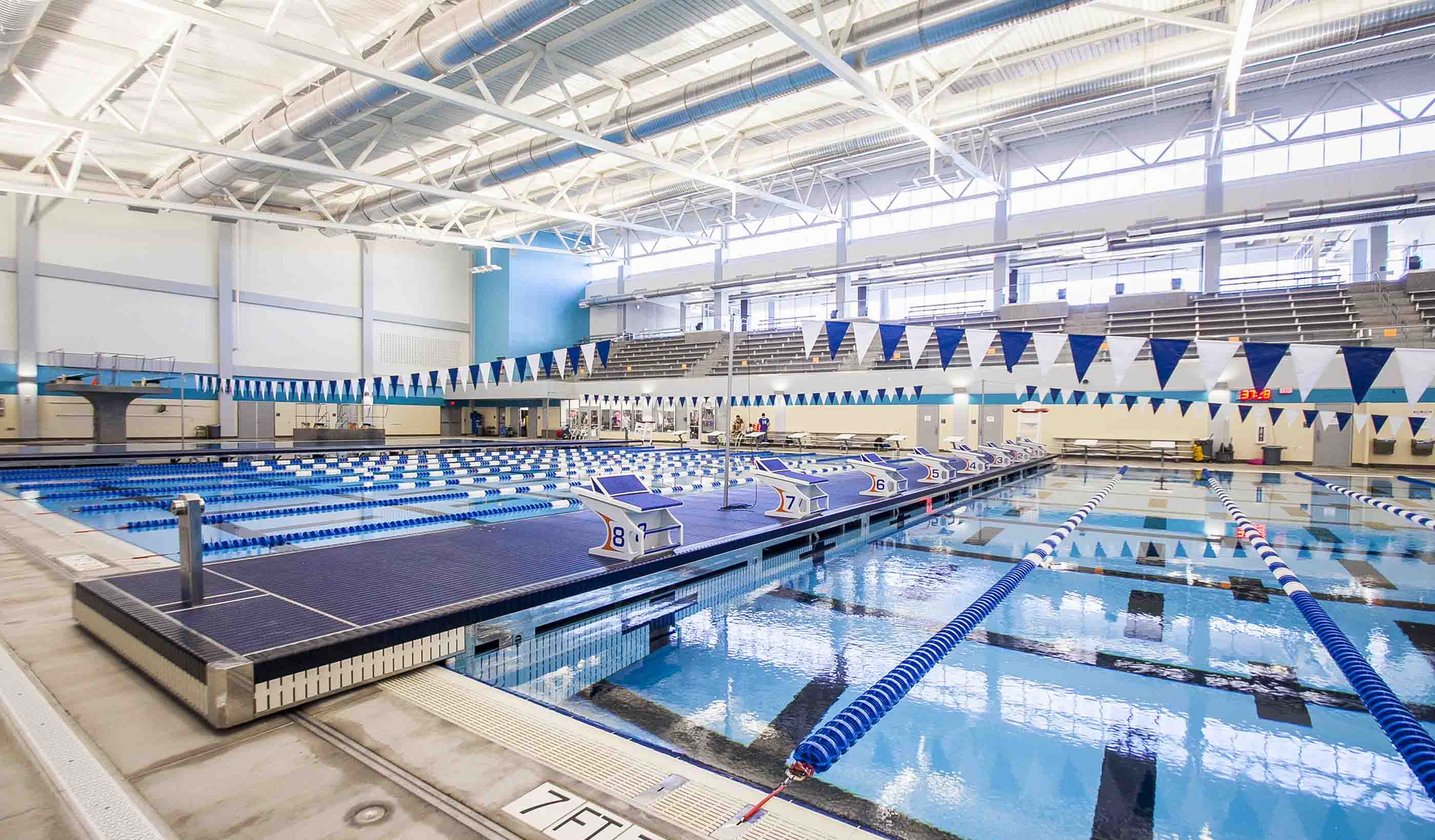

Published Article Emerging Trends in Sports Facility Design

-

Blog Post From zero to hero: Kick-starting the rebirth of North America’s malls

-

Blog Post We can think differently to create more equitable outcomes in city building

-

Blog Post Supporting equity with an inclusive neighborhood—it’s urban planning for all

-

Webinar Recording Using Customer Insight to Improve Operations and Asset Performance

-

Published Article Elevators and Fire Sprinklers: Fire Safety Moves On Up

-

Blog Post Designing Complete Streets for towns and suburbs, not just for big cities

-

Video Ottawa CSST: A life on the water and a personal project

-

Blog Post Celebrating Niger’s rich heritage with design that instills national pride

-

Blog Post Early education case study: Using design to nurture diverse learners

-

Report What do employees want in the office of the future? See what they told us.

-

Brochure Planning for a New Workplace Paradigm

-

Presentation Producing Hydrogen using Hydropower in the US

-

Webinar Recording Net Zero Emissions and Climate Change Resilience for Mining

-

Video Exploring the possibilities with 3D printing

-

Blog Post 6 steps for effective modularization and preassembly planning

-

Blog Post How satellite image fusion and machine-learning can help us monitor large water bodies

-

Technical Paper A probabilistic model for assessing debris flow propagation at regional scale: a case study in Campania region, Italy

-

Publication Design Quarterly Issue 12 | Equity and Inclusion

-

Whitepaper What business are airports really in?

-

Published Article Living with Water in New Orleans

-

Published Article Why smart buildings are core to business continuity

-

Blog Post COVID-19 has accelerated the need for flexible labs and research environments

-

Article Taking design to new heights through market insights and innovation

-

Technical Paper DebrisFlow Predictor: an agent-based runout program for shallow landslides

-

Podcast The Road to Autonomy

-

Published Article Office Design in a Post-Covid World

-

Blog Post 8 design strategies to enhance access to urban mental healthcare

-

Blog Post Clean energy case study: A conversation about powering Indigenous and remote communities

-

Video Rolling out new mobility pilots

-

Video Making mobility work for people

-

Video Planning for the future of mobility

-

Blog Post The American Rescue Plan Act: 4 questions to get thinking about infrastructure projects

-

Blog Post Finding success for downtown office space after COVID-19

-

Webinar Recording PFAS and the Energy Industry

-

Blog Post A new digital tool can help predict landslides, protecting people and infrastructure

-

Blog Post World Water Day: Valuing a finite, irreplaceable resource

-

Blog Post Beyond the terminals: How airports are helping strengthen communities and promote culture

-

Published Article Examining alternative project delivery

-

Blog Post Is digitization driving a new economic growth paradigm fueled by the circular economy?

-

Published Article Don’t damn ageing dams

-

Published Article Iowa Dam Adds Hydroelectric Power Generation

-

Published Article Double-edged sword for industrial sector

-

Podcast We need an EV charging renaissance

-

Published Article Engineers use hydropower from Red Rock Dam to generate clean and reliable electricity

-

Blog Post Completing the picture: The future of hydraulic modeling is two dimensional

-

Published Article Chicago’s Historic Post Office Houses an Expansive Office for Walgreens

-

Blog Post 4 reasons why 3D modeling and BIM are the future of infrastructure design

-

Published Article Making Richmond Hill future-ready: Interview with David Dixon

-

Video Stantec kicks off the United Nations’ Decade on Ecosystem Restoration RIGHT!

-

Blog Post We can build better communities through landscape-led cities that focus on people not cars

-

Published Article Improving Children’s Wellbeing with Nature in Mind

-

Published Article Certification is Serious (but don’t just take my word for it)

-

Published Article How can strategic water resource options deliver best value for all?

-

Blog Post Keeping retail banks relevant (Part 2): Understanding generational preferences

-

Webinar Recording Integration of Company Culture and the Role of Engineering-of-Record

-

Blog Post Climate emergency: How communities are tackling the crisis

-

What role do buildings play in the energy transition?

-

Microgrids: A critical key to the energy transition

-

Blog Post How can hydrogen fuel a more sustainable energy future?

-

Blog Post The benefits of big hydro in Ethiopia

-

Video Global Leaders in Applied eDNA

-

Blog Post The Lily Pad Network: A natural analogy to advance resiliency

-

Blog Post Creating adaptable AV policy to match AV technology

-

Published Article College Libraries Turn to Transformational Tech

-

Published Article Supplemental Grouting at Neelum-Jhelum Dam

-

Webinar Recording The Future of Resource Recovery

-

Blog Post Keeping retail banks relevant (Part 1): Becoming a post-pandemic “third place”

-

Published Article Think Tank: Stanley A. Milner Library Renewal

-

Blog Post Building water reliability: New Colorado dam, complex tunnel strengthens water supply

-

Blog Post Maintaining momentum: 3 ways to keep a large development project moving forward

-

Published Article Lights, candles, action: How St. John's can find more bright spots in the dead of winter

-

7 trends and techs—and 3 obstacles—shaping the big picture for an energy transition

-

Blog Post 3 public engagement practices for holistic urban planning

-

Publication Research + Benchmarking Issue 01 | How Buildings Shape Us

-

Webinar Recording Smart Utilities – Where is the World Heading?

-

Blog Post 3 important questions to ask when making a mining method change

-

Article A Stantec review of global AV research

-

Published Article The Nature Conservancy’s New Space by Stantec is Designed with A Colorado Nature Motif

-

Blog Post Accessibility isn’t just the law, it’s good business

-

Blog Post Wood and timber in student housing design

-

Published Article The State of the Global Dam Safety Practice

-

Published Article The Record Breakers!

-

Published Article Airports: Ensuring financial resilience through diversification

-

Blog Post Developing a new open space in your downtown community? Here are 5 key ideas to consider

-

Published Article Integrating a BAS into design

-

Blog Post 5 steps toward efficient building performance—from LEDs to heat pumps and more

-

Presentation Innovative design solutions for the future of micromobility

-

Webinar Recording Coagulation 101

-

Publication Design Quarterly Issue 11 | Empowering Design

-

Blog Post Sea change: 5 smart technologies to help us design better shipping gateways

-

Blog Post Building a legacy: Designing sports facilities that serve communities for decades

-

Webinar Recording PFAS: Regulations and Treatment

-

Whitepaper The Future of Mobility: Remaking Buffalo for the 21st Century

-

Published Article Deer Grove East: Restoring an historic forest preserve

-

Blog Post 3 challenges—and innovative solutions—when powering urban communities

-

Whitepaper Is your mine using remote sensing?

-

Webinar Recording Do more with Data - Digital Innovation for Integrity

-

Blog Post How to stave off the wintry chills and embrace the outdoors this COVID-19 winter

-

Published Article Hydroelectric Generators: The forgotten mechanical component

-

Published Article Advancing Cancer Care

-

Published Article 5 recommendations to cultivate digital-forward innovation in the built environment

-

Webinar Recording The Blue-Green Corridors Project in New Orleans

-

Published Article Innovative ideas to clear the air

-

Video Improving New Zealand housing

-

Webinar Recording Winter City Design

-

Blog Post 6 sustainable solutions for reducing GHG emissions at pipeline facilities

-

Webinar Recording Excess Soil – October 2020 Webinar

-

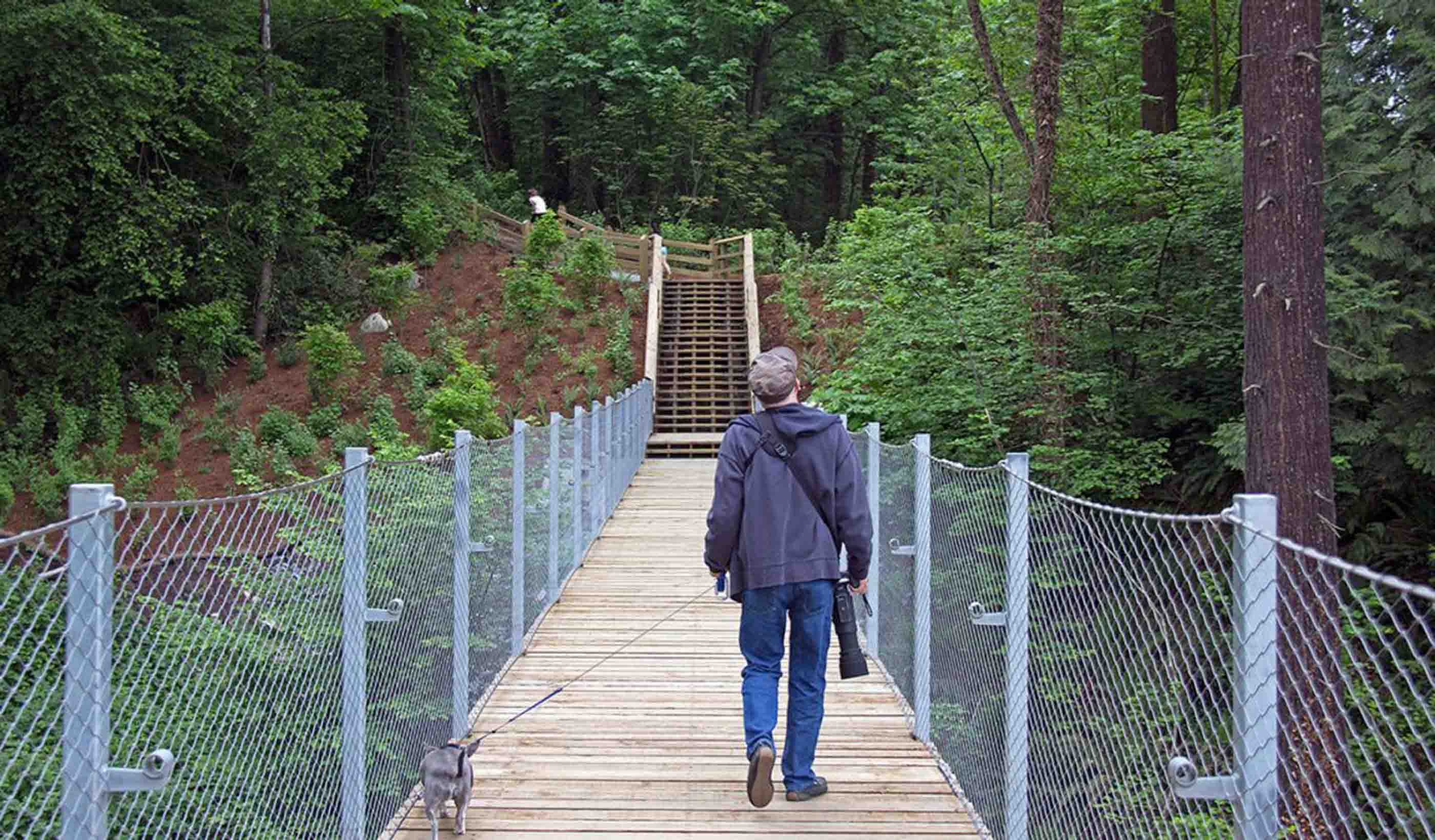

Blog Post Trail building 101: Exploring design details you may not have noticed on your local trail

-

Blog Post Hidden in history: Creating invisible mechanical systems in heritage buildings

-

Published Article Delivery with a difference

-

Blog Post Building industry diversity: 5 ways to empower disadvantaged business enterprise firms

-

Webinar Recording Assessing Nutrient Loading into Waterbodies from Reclaimed Water Practices

-

Blog Post Harnessing the power of mobile GIS apps to simplify field work and save time, money

-

Video Balancing impacts to wetlands with the need for critical infrastructure expansion

-

Blog Post What you need to know about Canada’s regulatory framework for GHG emissions

-

Blog Post Dinner in a “snow globe”—one designer’s plan to help restaurants during a COVID-19 winter

-

Blog Post How to turn shopping malls into a physical and virtual retail world

-

Webinar Recording Global Panel on COVID-19 Responses in Vulnerable Urban Contexts

-

Publication Water Futures +1 – Water Energy and Agriculture to 2035

-

Published Article How virtual design changes the way we work

-

Electricity as a clean source of energy: Quebec’s example

-

Published Article Students, tech, COVID drive higher ed HVAC design

-

Published Article The Indigenous Hub in Toronto Promises a Brighter Future

-

Blog Post Working 9 to 5 has been a double climate change whammy

-

Webinar Recording Strategic Assessment on Climate Change

-

Published Article 5 reasons the Denver Metro area will be a post-pandemic “winner”

-

Published Article Something for Everyone at Riverlife Park

-

![[With Video] Untapped energy: Transforming existing dams into a source of hydropower](/content/dam/stantec/images/projects/0101/red-rock-hydroelectric-project-2.jpg)

Blog Post [With Video] Untapped energy: Transforming existing dams into a source of hydropower

-

Blog Post Carbon: A common language for change—now is the time to act

-

Published Article Mesocosm comparison of laboratory‐based and on‐site eDNA solutions

-

Blog Post Using digital solutions to capture, curate, protect, and analyze vital data

-

Webinar Recording Operations and Hazard Considerations in the Design of Treatment Plants

-

Published Article Pandemic Response Lessons From Singapore

-

Blog Post Warm welcome: What to consider when designing a modern mental health space

-

Blog Post How can digital data collection lead you and your project to success?

-

Published Article Future-proofing the industry

-

Webinar Recording Cerro Verde Mine Tailings Storage Facility

-

Published Article Renewal Rooms for Nurses

-

Published Article Taking down the walls around mental health care

-

Published Article Is this the beginning of a micromobility revolution?

-

Published Article The largest public charging station in the US showcases the challenges facing developers

-

Whitepaper How can Urban Agriculture Play a Role in the City of the Future?

-

Video Bridging communities while improving multimodal transportation

-

Blog Post Satellite technology provides a new way to assess historic pipeline releases

-

Published Article Tailoring Mental Health Design To Young Adult Patients

-

Webinar Recording Impacts of the Updated Steam Electric Power Generation ELGs

-

![[With Video] Why VR matters in healthcare design](/content/dam/stantec/images/projects/0053/ucsf-precision-cancer-medicine-bldg-1.jpg)

Blog Post [With Video] Why VR matters in healthcare design

-

Article The how and why of measuring your water footprint

-

Published Article Going viral: How the coronavirus pandemic could change the built environment

-

Published Article Core Considerations for Early Childhood Classroom Design

-

Webinar Recording Atlanta’s Regional Zero Waste Energy Recovery Program

-

Blog Post The pandemic’s lessons in loneliness and aging in place

-

Published Article Award-Winning Hydropower Project Helps Electrify Ethiopia

-

Blog Post Winter city design: 3 ways to save our small businesses this COVID-19 winter

-

Blog Post 5 critical factors to meeting deadlines for America’s Water Infrastructure Act of 2018

-

Published Article A global, standard approach to tailings, finally

-

Published Article Signaling a Safe Return

-

Published Article Basics of industrial, manufacturing facility design

-

![[With Video] Beyond the monument: Honoring our heroes through contextual design](/content/dam/stantec/images/projects/0094/boston-marathon-memorial-3.jpg)

Blog Post [With Video] Beyond the monument: Honoring our heroes through contextual design

-

Blog Post Making it easier to protect streams

-

Publication Design Quarterly Issue 10 | The Carbon Issue

-

Blog Post Continuing advances in wastewater-to-energy technology

-

Blog Post Creating a sustainable port for Canada’s Department of National Defence

-

Blog Post Remaining hands-on while distanced: How to take design-build collaboration virtual

-

Pipeline Threat Assessment for Safety Assurance

-

Published Article How This Veterans Research Building Evolved Amidst Design Changes

-

Blog Post The South Surrey Interceptor: A microtunneling milestone in North America

-

Blog Post What is Finite Element Analysis and how is it done?

-

Published Article A hybrid learning approach could redefine higher education

-

Published Article City of Somerville launches wastewater testing program to get ahead of COVID-19 spikes

-

Published Article How to make the most of COVID winter

-

![[With Video] How a young team shaped a new home for a mental health center](/content/dam/stantec/images/ideas/blogs/016/stellas-place-1.jpg)

Blog Post [With Video] How a young team shaped a new home for a mental health center

-

Blog Post 5 ways effective program management supports project owners

-

Blog Post Awaiting departure, 6 feet away: How will airport holdrooms adjust?

-

Published Article Acute Condition

-

Blog Post What does designing with the heart of healthcare mean for nurses?

-

Webinar Recording Mall Remix: breathing new life into old malls

-

Blog Post Did COVID-19 finally push us to completely paperless projects?

-

Published Article Stantec helps Ethiopia move towards ambitious power goals

-

Published Article Reconstruction could be COVID-19’s silver lining

-

Blog Post Beyond Complete Streets: Could COVID-19 help transform our roads into places for people?

-

Published Article Pandemic control in high-performance buildings

-

Blog Post How to focus on health and wellness in existing buildings

-

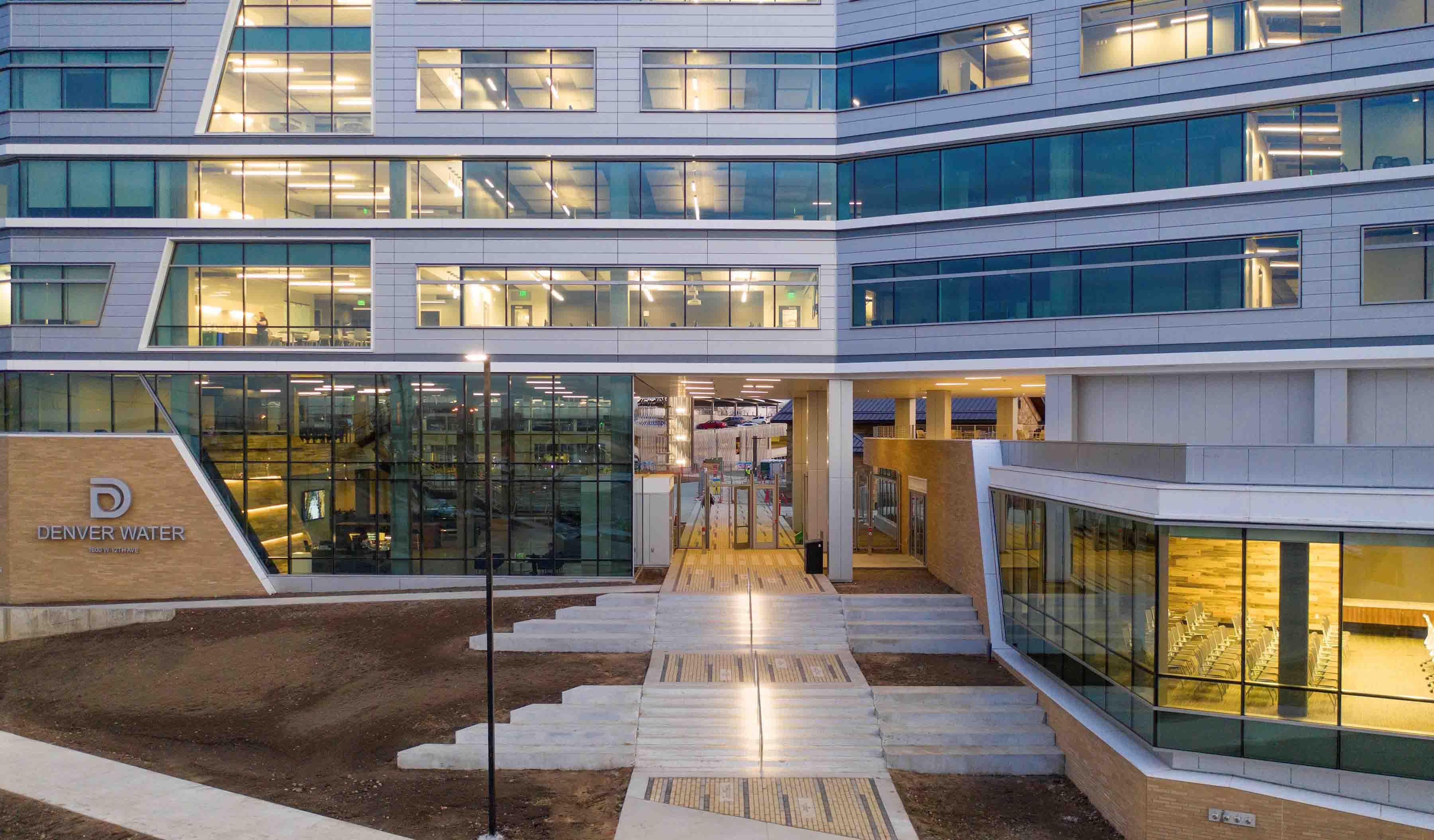

Published Article Denver Water: A Holistic Design For First-Of-Its-Kind Water Reuse Initiative By Stantec

-

Webinar Recording Just Suburbs: Creating Equitable Opportunities in Suburban Development

-

Blog Post Digital data collection for wildlife surveys leads to better—and faster—information

-

Published Article Room to Grow

-

Video Stantec welcomes you to the Decade on Ecosystem Restoration

-

Blog Post UV lighting in building infection control: Can passive light safely kill coronavirus?

-

Blog Post How has shopping changed over the past 100 years? A look at the evolution of retail

-

Blog Post 8 ways to accelerate your pipeline project

-

Published Article A glimpse of the future – opportunities to manage surface water

-

Brochure Getting Back to Productivity: Responding to COVID-19

-

Blog Post Deploying data to monitor aging pipelines

-

Whitepaper Data Management and Pipeline Integrity

-

Blog Post 5 strategies for creating safer, healthier hotel experiences

-

Published Article Going beyond climate change awareness

-

Blog Post How parametric design helps in the creation of custom interior elements

-

Blog Post Retail as a stage: Today’s extension of a centuries-old philosophy

-

Published Article Port of the Future

-

Blog Post From Stantec ERA: Updating and refurbishing our hydropower stations will pay off long-term

-

Blog Post Collaboration, cost savings, and quality control: The benefits of CM/GC project delivery

-

Blog Post Scanning smarter: Using reality capture on renovations

-

Blog Post Changes are coming to Oregon’s greenhouse gas reporting program—are you ready?

-

Blog Post Engineering our return to the workplace after COVID-19

-

Article Digital Impact Assessment: A Primer for Embracing Innovation and Digital Working

-

Blog Post Exploring the airborne transmission of the coronavirus and strategies for mitigating risk

-

Blog Post From Stantec ERA: Monitoring pipelines from space

-

Published Article Long Island Rail Road Expansion is a medley of moving parts

-